Immersion

Description

Week 5: February 13 – 19

The experience of entering a multi-sensory representation of three-dimensional space. An exploration of the evolution of virtual reality and 3D virtual space: multimedia as an immersive experience that engages all the senses. We will overview the research of pioneering VR artists and scientists dating back to the 1950s, including Morton Heilig, Ivan Sutherland, Scott Fisher, Jenny Holzer, Jeffrey Shaw, and Char Davies, who pioneered the tools and aesthetics of virtual reality, stereoscopic imaging, and telepresence, leading to the creation of digital, immersive environments.

Assignments

Due in two weeks: February 27 (there will be no class next week)

Reading

- Constance Lewallen, Still Subversive After all These Years, (Introduction to the Next Essay)

- (2004): Ant Farm (1968-1978), Michael Sorkin, Sex, Drugs, Rock and Roll, Cars, Dolphins, and Architecture, Berkeley: UC Press

Viewing

Chip Lord live from the NMC Media Lounge at the College Art Association conference::: Friday, February 23, 12:00pm-1:00pm (Pacific Standard Time-US) (UTC-8) ::: Networked Conversations is hosted by Randall Packer

View the recording:

Research Critique

Each student will be assigned a work to research and critique by the Media Collective Ant Farm from the following list:

- Cadillac Ranch (1974)

- Media Burn (1975)

- The Eternal Frame (1975)

Write a more extended 500 word essay about your assigned work, Incorporate the readings (see above), as well as the interview with Chip Lord, as relevant, into your research post, using at least one quote from each reading to support your own research and analysis.

Note that I have provided an analysis of the second reading, which some of you may found challenging because of its colloquialisms, however I feel it’s tone and approach is important to understanding Ant Farm, their ironic humor, and unique approach to the critique of American culture.

The goal of the research critique is to conduct independent research by reviewing the online documentation of the work, visiting the artist’s Website, and googling any other relevant information about the artist and their work. You will give a presentation of your research in class and we will discuss how it relates to the topic of the week: The Media Collective & Interdisciplinary Forms

Here are instructions for the research critique:

- Create a new post on your blog incorporating relevant hyperlinks, images, video, etc

- Be sure to reference and quote from the reading to provide context for your critique

- Apply the “Research” category

- Apply appropriate tags

- Add a featured image

- Post a comment on at least one other research post prior to the following class

- Be sure your post is formatted correctly, is readable, and that all media and quotes are DISCUSSED in the essay, not just used as introductory material.

Be prepared to synthesize and present your summary for class discussion in two weeks.

Outline

Discussion of Readings

Ivan Sutherland, “The Ultimate Display,” 1965, Wired Magazine

A display connected to a digital computer gives us a chance to gain familiarity with concepts not realizable in the physical world. It is a looking glass into a mathematical wonderland.

How did Ivan Sutherland envision in the 1960s, a “mathematical wonderland,” like something out of Alice in Wonderland by Lewis Carroll, an imaginary place that defies the laws of the physical world? How can we draw from our understanding of today’s virtual reality and augmented reality, to understand what Sutherland imagined more than 50 years ago?

Displays being sold now generally have built in line-drawing capability.

Ivan Sutherland’s earlier contribution to computer graphics was the Sketchpad, the first touch screen that enabled the user to draw on the interface with a light pen. Of course it was very simple.

It is equally possible for a computer to construct a picture made up of colored areas. Knowlton’s movie language, BEFLIX is an excellent example of how computers can produce area-filling pictures.

Ken Knowlton of Bell Labs had just created one of the first computer animations. Knowlton developed the BEFLIX (Bell Flicks) programming language for bitmap computer-produced movies.

Tomorrow’s computer user will interact with a computer through a typewriter.

This new technology providing user interactivity with a computer was just underway at the Stanford Research Institute led by Douglas Engelbart, which would lead to the graphical user interface at Xerox PARC under the direction of Alan Kay.

A variety of other manual-input devices are possible. The light pen or RAND Tablet stylus serve a very useful function in pointing to displayed items and in drawing or printing for input to the computer.

This is a reference to the light pen that was used with Sketchpad. Sutherland was thinking about all the various ways the user can interact with the screen and with data, including the typewriter, the light pen, knobs, joy sticks, push buttons, mobile keypad, voice input, as well as the movement of the head and even later, the dataglove and other tracking devices that became popularized with VR. Clearly he was interested in extended the notion of the user interface, long before the technologies had been realized, as we saw with Vannevar Bush.

If the task of the display is to serve as a looking-glass into the mathematical wonderland constructed in computer memory, it should serve as many senses as possible.

He also foresaw that it was insufficient to be focusing on the visual senses exclusively, but that the computer interface needed to take into account the other senses, such as sound, touch, and perhaps even olfactory and taste! Of course this was the research of cinematographer Morton Heilig in the late 1950s, and it would become the focus of Scott Fisher’s work in the 1980s, to realize a multi-sensory tracking apparatus that became the foundation of modern day VR.

The force required to move a joystick could be computer controlled, just as the actuation force on the controls of a Link Trainer are changed to give the feel of a real airplane.

In fact an early form of VR was the flight simulator, such as the Link Trainer, which allowed pilots to gain real-life flying experience in a simulated cockpit to give the sensation of movement, direction, and speed. Does this remind us of Michael Naimark’s Aspen Movie Map and the idea of surrogate travel?

The computer can easily sense the positions of almost any of our body muscles.

This is a quantum leap forward in human computer interface thinking, instead of merely typing on a keyboard, the computer responds to muscular movement, which formed the basis of VR and the ability to adjust the body and its muscles to control the experience. So too the touch screen on mobile devices, swiping, etc., is a result of Ivan Sutherland’s research into finding new ways to translate body movement into digital display control of information and media.

Our eye dexterity is very high also. Machines to sense and interpret eye motion data can and will be built.

He also foresaw eye tracking, another important feature of VR, such as in Google Glass, and a technique that is used extensively by paraplegics to control their computers who are paralyzed.

The user of one of today’s visual displays can easily make solid objects transparent – he can “see through matter!”

This idea looks forward to augmented reality applications that allow the viewer to trigger graphics that are overlaid with the physical space, a blending of the real and the virtual, using GPS coordinates, shapes, colors, etc., to extend reality into the digital sphere. This was recently popularized with Pokeman Go and related applications that infused gaming into augmented reality.

The ultimate display would, of course, be a room within which the computer can control the existence of matter. A chair displayed in such a room would be good enough to sit in. Handcuffs displayed in such a room would be confining, and a bullet displayed in such a room would be fatal. With appropriate programming such a display could literally be the Wonderland into which Alice walked.

What is Ivan Sutherland imagining here? Is this the world we live in today? Have we embraced VR to the point that it has become our new reality?

Scott Fisher, “Virtual Environments” 1989, Multimedia: From Wagner to Virtual Reality

PART 1. MEDIA TECHNOLOGY AND SIMULATION OF FIRST-PERSON EXPERIENCE

Watch out for a remarkable new process called SENSORAMA! It attempts to engulf the viewer in the stimuli of reality. Viewing of the color stereo film is replete with binaural sound, colors, winds, and vibration. The original scene is recreated with remarkable fidelity. At this time, the system comes closer to duplicating reality than any other system we have seen!”

As you can see, Scott Fisher was influenced by Morton Heilig’s Sensorama, which served as a prototype for the multi-sensory virtual experience.

Evaluation of image realism should also be based on how closely the presentation medium can simulate dynamic, multimodal perception in the real world… The image would move beyond simple photo-realism to immerse the viewer in an interactive, multi-sensory display environment.

What he is referring to here is the phenomenon of the “suspension of disbelief.” Think about how Wagner activated the experience of the imaginary. Not necessarily through realism, but by heightening and expanded total sensory awareness through immersion and additionally interactivity. That is the keyword in creating “realism,” not just by copying reality, but by augmenting and extending and simulating it.

… the medium of television, as most experience it, plays to a passive audience. It has little to do with the nominal ability to ‘see at a distance’ other than in a vicarious sense…

Fisher was pointing beyond the passive telematics of television, meaning literally to see at a distance. Rather, by extending seeing interactively with the immersive and with touch, which is referred to as ‘telepresence” (presence at a distance) one’s sense of presence it more believability extended to the remote.

A key feature of these display systems (and of more expensive simulation systems) is that the viewer’s movements are non-programmed; that is, they are free to choose their own path through available information rather than remain restricted to passively watching a ‘guided-tour’.

The purpose of the inclusion of interactivity into the immersive experience, is to incorporate the suspension of disbelief into new narrative possibilities. We saw this with Deep Contact, in which touch screen control was used to take viewer on a journey through a fantasy world. Fisher envisions VR as a medium for storytelling, which of course has materialized in the form of arcades, games, augmented reality, and artworks such as Char Davies’ Osmose.

For these systems to operate effectively, a comprehensive information database must be available to allow the user sufficient points of view.

Scott Fisher was also involved in the Aspen Movie Map at MIT, and understood the importance of linking a database of information, such as video clips driving through Aspen, CO, with an interactive interface. This article is clearly based, at least in part, on the results of that project to enable the viewer to navigate through a virtual space.

PART 2: THE EVOLUTION OF VIRTUAL ENVIRONMENTS

Matching visual display technology as closely as possible to human cognitive and sensory capabilities in order to better represent ‘direct experience’ has been a major objective in the arts, research, and industry for decades.

Early stereoscopic imaging from the 1950s

Flight simulators

The idea of sitting inside an image has been used in the field of aerospace simulation for many decades to train pilots and astronauts to safely control complex, expensive vehicles through simulated mission environments

This 30-second video from the early 1960’s shows NASA flight test pilot Milt O. Thompson training in the X-15 flight simulator at the NASA Flight Research Center

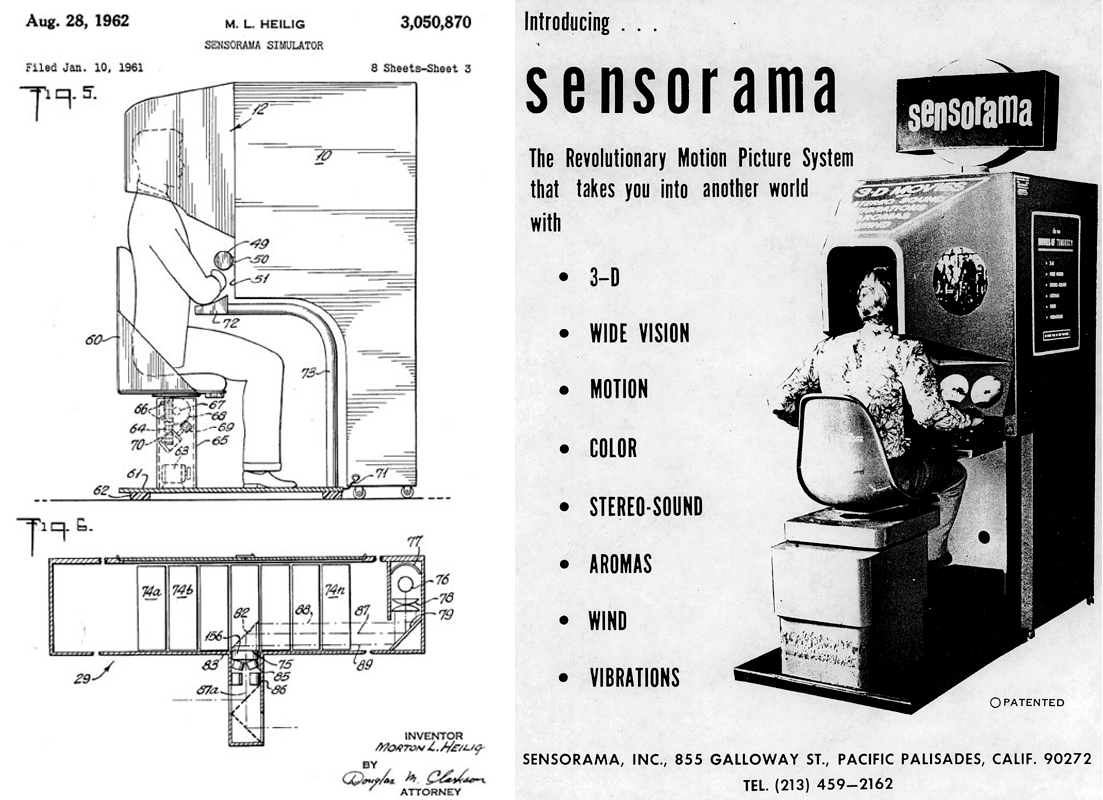

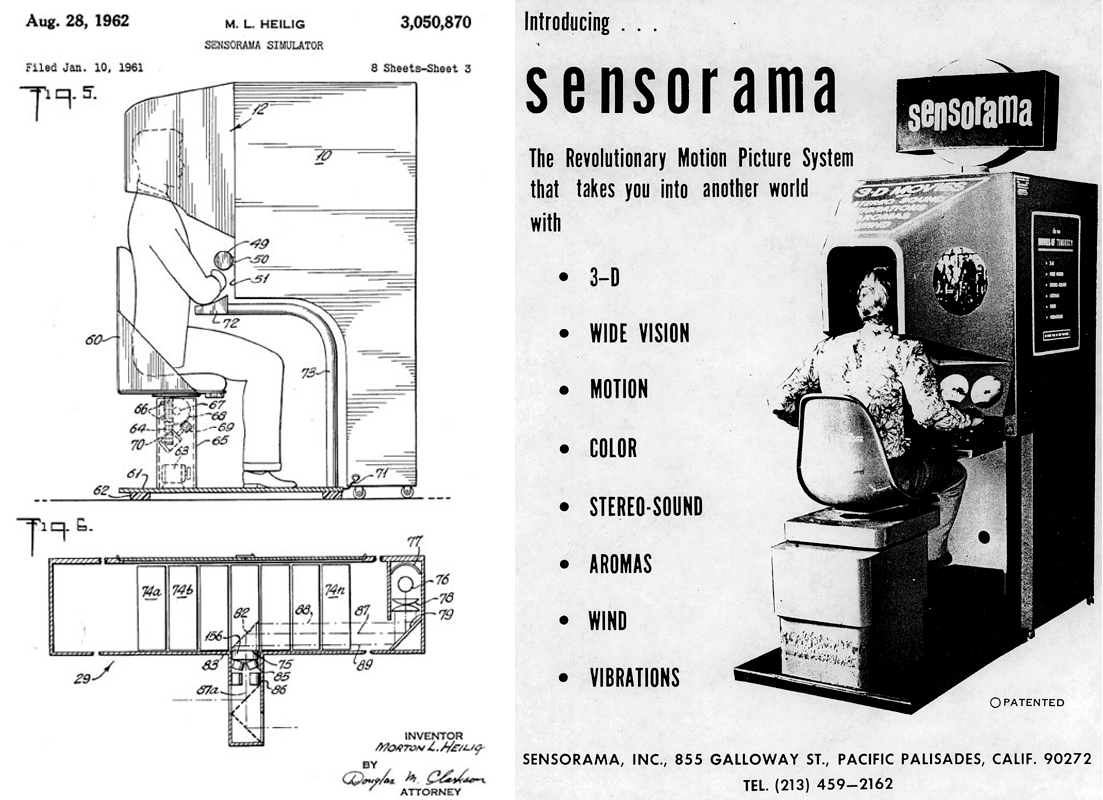

Sensorama (1960)

When you put your head up to a binocular viewing optics system, you saw a first-person viewpoint, stereo film loop of a motorcycle ride through New York City

Cinerama from the 1960s

Ivan Sutherland’s Head-Mounted Display (1969)

This helmet-mounted display had a see-through capability so that computer-generated graphics could be viewed superimposed onto the real environment. As the viewer moved around, those objects would appear to be stable within that real environment, and could be manipulated with various input devices that they also developed.

Aspen Movie Map

The Movie Map gave the operators the capability of sitting in front of a touch-sensitive display screen and driving through the town of Aspen at their own rate, taking any route they chose, by touching the screen, indicating what turns they wanted to make, and what buildings they wanted to enter.

More recent Omnimax

3. VIEW: THE NASA/AMES VIRTUAL ENVIRONMENT WORKSTATION

NASA’s Ames Research Center, an interactive Virtual Interface Environment Workstation (VIEW) has been developed as a new kind of media-based display and control environment that is closely matched to human sensory and cognitive capabilities. The VIEW system provides a virtual auditory and stereoscopic image surround that is responsive to inputs from the operator’s position, voice and gestures.

This is the result of the VIEW system at NASA Ames, where as you can see, the user is entirely immersed through the senses of sight (stereoscopic), sound (binaural), touch (data glove), and even voice recognition. Of course taste and touch have always been beyond the reach of VR research.

Scott Fisher describes the system:

The current Virtual Interface Environment Workstation system consists of: a wide-angle stereoscopic display unit, glove-like devices for multiple degree-of-freedom tactile input, connected speech recognition technology, gesture tracking devices, 3D auditory display and speech-synthesis technology, and computer graphic and video image generation equipment.

This was the first complete, digitally immersive virtual reality system. As Scott Fisher has described, the intent was to create believability of the total sensory experience, to activate the “suspension of disbelief,” not necessarily by copying reality, but deeply immersive the viewer in a virtual reality, captivating the senses, and giving the user interactive control.

The gloves provide interactive manipulation of virtual objects in virtual environments that are either synthesized with 3D computer-generated imagery, or that are remotely sensed by user-controlled, stereoscopic video camera configurations.

The dataglove is the tactile device that gives the user the sensation of being able to touch objects, controlling them, activating them as one would do through a mouse or trackpad, but well beyond, as though truly transgressing the screen as Lynn Hershman could only suggest in Deep Contact.

For realtime video input of remote environments, two miniature CCD video cameras are used to provide stereoscopic imagery.

Stereoscopic imaging is now done in real-time, much faster than Ivan Sutherland’s early prototype. The real-time speed of the imaging creates the sensation that one can visually navigate the virtual space, such as the BOOM System.

4. VIRTUAL ENVIRONMENT APPLICATIONS

TELEPRESENCE – The VIEW system is currently used to interact with a simulated telerobotic task environment.

Again, telepresence refers to being ‘present at a distance,” and through the control of robotic arms, one has an extended reach to touch and lift and move virtual or even real objects.. This technology was used by NASA to simulate underwater and space tasks, where it was not easy or even possible to place real human beings.

DATASPACE – Advanced data display and manipulation concepts for information management are being developed with the VIEW system technology.

This is the ability to control or manipulate data within a spatial orientation, much like the Aspen Movie Map project, in which the viewer could navigate the virtual environment by activating data (videos) of the streets of Aspen.

5. PERSONAL SIMULATION: ARCHITECTURE, MEDICINE, ENTERTAINMENT

In addition to remote manipulation and information management tasks, the VIEW system also may be a viable interface for several commercial applications.

This has included VR arcades, flight simulation, space simulation, underwater simulation, even far-reaching applications such as tele-surgery, where doctors can operate at a distance, and of course artistic projects such as Char Davies’ Osmose, Pokemon Go and many other VR and augmented reality projects. Here is an example of a virtual tour of the Sapporo Brewery in Japan:

6. TELE-COLLABORATION THROUGH VIRTUAL PRESENCE

A major near-term goal for the Virtual Environment Workstation Project is to connect at least two of the current prototype interface systems to a common virtual environment database.

This refers to the multi-user environment, which requires telematically connected users from various locations. While beyond the scope of this lecture, there are numerous games, Web conferencing (such as Skype), research projects and artworks that involve the placement of viewers in a shared environment, or ‘third space,’ where by joining the local and the remote they can interact in real-time in networked space. The CAVE (Cave Automatic Virtual Environment) System (1991) is one such environment that allowed for tele-collaboration, and which immerses the viewer without requiring the head-mounted display.

The possibilities of virtual realities, it appears, are as limitless as the possibilities of reality. It provides a human interface that disappears – a doorway to other worlds.

This is the vision, a doorway to other worlds that allow us to create the unimaginable, the poetics of virtual construction and shared experiences that fully extend what Wagner had envisioned in his 19th century opera works.

Works for Review

Morton Heilig, artwork: Sensorama, 1960

Introduction to The Cinema of the Future (1955) by Morton Heilig, Multimedia: From Wagner to Virtual Reality

“ Thus, individually and collectively, by thoroughly applying the methodology of art, the cinema of the future will become the first art form to reveal the new scientific world to man in the full sensual vividness and dynamic vitality of his consciousness.” – Morton Heilig

Morton Heilig, through a combination of ingenuity, determination, and sheer stubbornness, was the first person to attempt to create what we now call virtual reality. In the 1950s it occurred to him that all the sensory splendor of life could be simulated with “reality machines.” Heilig was a Hollywood cinematographer, and it was as an extension of cinema that he thought such a machine might be achieved. With his inclination, albeit amateur, toward the ontological aspirations of science, Heilig proposed that an artist’s expressive powers would be enhanced by a scientific understanding of the senses and perception. His premise was simple but striking for its time: if an artist controlled the multisensory stimulation of the audience, he could provide them with the illusion and sensation of first-person experience, of actually “being there.” Inspired by short-lived curiosities such as Cinerama and 3-D movies, it occurred to Heilig that a logical extension of cinema would be to immerse the audience in a fabricated world that engaged all the senses. He believed that by expanding cinema to involve not only sight and sound but also taste, touch, and smell, the traditional fourth wall of film and theater would dissolve, transporting the audience into an inhabitable, virtual world; a kind of ”experience theater.” Unable to find support in Hollywood for his extraordinary ideas, Heilig moved to Mexico City in 1954, finding himself in a fertile mix of artists, filmmakers, writers, and musicians. There he elaborated on the multidisciplinary concepts found in this remarkable essay, “The Cinema of the Future.” Though not widely read, it served as the basis for two important inventions that Heilig patented in the 1960s. The first was the Telesphere Mask. The second, a quirky, nickelodeon-style arcade machine Heilig aptly dubbed Sensorama, catapulted viewers into multisensory excursions through the streets of Brooklyn, and offered other adventures in surrogate travel. While neither device became a popular success, they influenced a generation of engineers fascinated by Heilig’s vision of inhabitable movies.

Myron Krueger, Videoplace, 1970

Originally trained as a computer scientist, Myron Krueger, under the influence of John Cage’s experiments in indeterminacy and audience participation, pioneered human-computer interaction in the context of physical environments. Beginning in 1969, he collaborated with artist and engineer colleagues to create artworks that responded to the movement and gesture of the viewer through an elaborate system of sensing floors, graphic tables, and video cameras.

At the heart of Krueger’s contribution to interactive computer art was the notion of the artist as a “composer” of intelligent, real-time computer-mediated spaces, or “responsive environments,” as he called them. Krueger “composed” environments, such as Videoplace from 1970, in which the computer responded to the gestures of the audience by interpreting, and even anticipating, their actions. Audience members could “touch” each other’s video-generated silhouettes, as well as manipulate the odd, playful assortment of graphical objects and animated organisms that appeared on the screen, imbued with the presence of artificial life.

From Wikipedia:

In the mid-1970s, Myron Krueger established an artificial reality laboratory called the Videoplace. His idea with the Videoplace was the creation of an artificial reality that surrounded the users, and responded to their movements and actions, without being encumbered by the use of goggles or gloves. The work done in the lab would form the basis of his much cited 1983 book Artificial Reality. The Videoplace (or VIDEOPLACE as Krueger would have it), was the culmination of several iterations of artificial reality systems: GLOWFLOW, METAPLAY, and PSYCHIC SPACE; each offering improvements over the previous installation until VIDEOPLACE was a full blown artificial reality lab at the University of Connecticut.

The Videoplace used projectors, video cameras, special purpose hardware, and onscreen silhouettes of the users to place the users within an interactive environment. Users in separate rooms in the lab were able to interact with one another through this technology. The movements of the users recorded on video were analyzed and transferred to the silhouette representations of the users in the Artificial Reality environment. By the users being able to visually see the results of their actions on screen, through the use of the crude but effective colored silhouettes, the users had a sense of presence while interacting with onscreen objects and other users even though there was no direct tactile feedback available. The sense of presence was enough that users pulled away when their silhouettes intersected with those of other users. (Kalawsky 1993; Rheingold 1992). The Videoplace is now on permanent display at the State Museum of Natural History located at the University of Connecticut. (Sturman and Zeltzer 1994).

From MediaNet

Two people in different rooms, each containing a projection screen and a video camera, were able to communicate through their projected images in a «shared space» on the screen. No computer was involved in the first Environment in 1975.

In order to realize his ideas of an «artificial reality» he [Krueger] started to develop his own computer system in the years up to 1984, mastering the technical problems of image recognition, image analysis and response in real time. This system meant that he could now combine live video images of visitors with graphic images, using various programs to modify them.

When «Videoplace» is shown today, visitors can interact with 25 different programs or interaction patterns. A switch from one program to another usually takes place when a new person steps in front of the camera. But the «Videoplace» team has still not achieved its ultimate aim of developing a program capable of learning independently.

Myron Krueger (Wikipedia)

Myron Krueger (born 1942 in Gary, Indiana) is an American computer artist[1] who developed early interactive works. He is also considered to be one of the first generation virtual reality and augmented reality researchers.

He envisioned the art of interactivity, as opposed to art that happens to be interactive. That is, the idea that exploring the space of interactions between humans and computers was interesting. The focus was on the possibilities of interaction itself, rather than on an art project, which happens to have some response to the user. Though his work was somewhat unheralded in mainstream VR thinking for many years as it moved down a path that culminated in the “goggles ‘n gloves” archetype, his legacy has experienced greater interest as more recent technological approaches (such as CAVE and Powerwall implementations) move toward the unencumbered interaction approaches championed by Krueger.

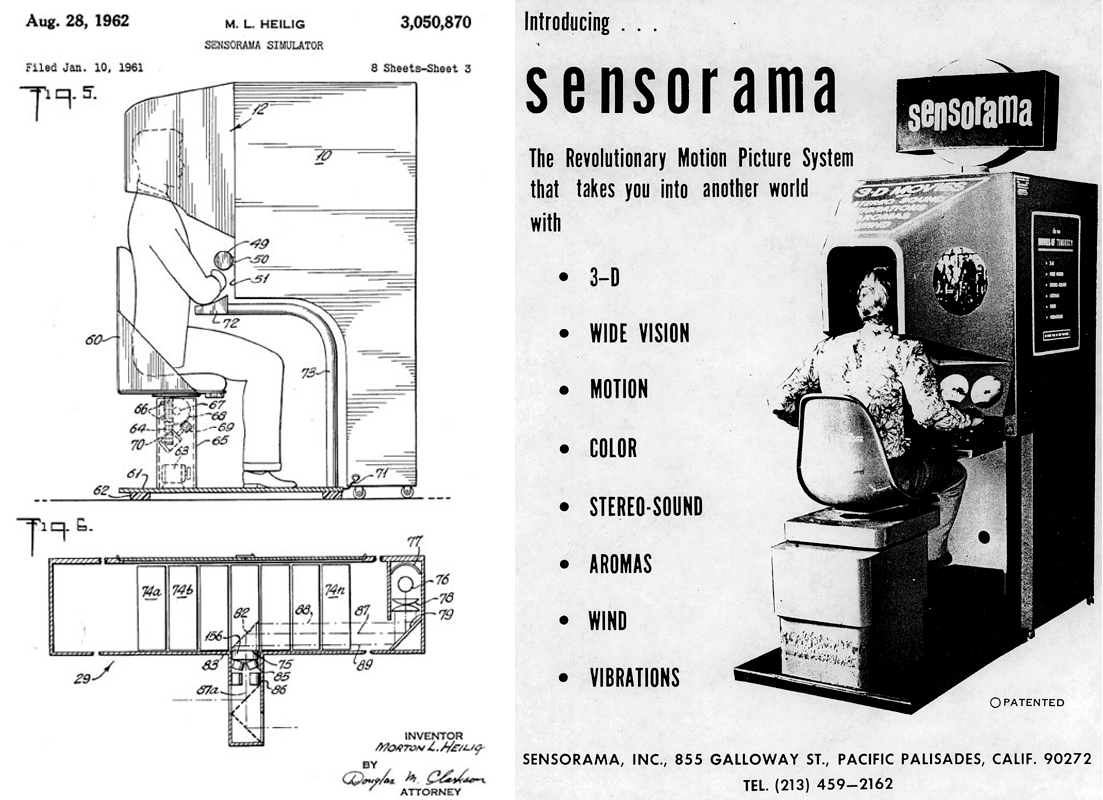

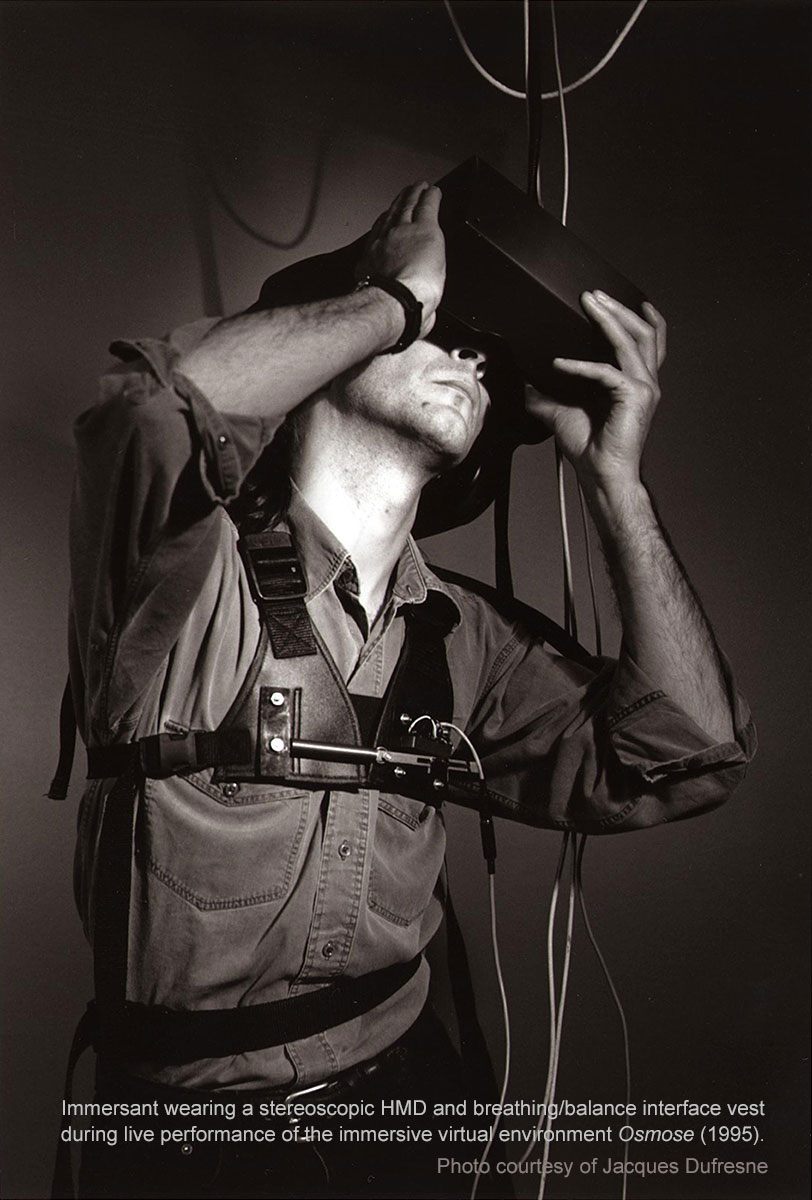

Char Davies, Osmose, 1995

“By changing space, by leaving the space of one’s usual sensibilities, one enters into communication with a space that is psychically innovating. … For we do not change place, we change our nature.” – Gaston Bachelard. The Poetics of Space, 1964

Osmose (1995) is an immersive interactive virtual-reality environment installation with 3D computer graphics and interactive 3D sound, a head-mounted display and real-time motion tracking based on breathing and balance. Osmose is a space for exploring the perceptual interplay between self and world, i.e., a place for facilitating awareness of one’s own self as consciousness embodied in enveloping space.

Introduction to “Changing Space: Virtual Reality as an Arena of Embodied Being” (1997) – Multimedia: From Wagner to Virtual Reality

Canadian artist Char Davies established herself as a painter and filmmaker before achieving international recognition for pioneering artworks that employ the technologies of virtual reality. Her transition to digital media began in the mid-1980s, when she first explored how three-dimensional computer imaging techniques could give depth to the traditional picture plane. In 1987 she became a founding director of Softimage, a 3-D software company that created special effects for Hollywood films, including the industry landmark Jurassic Park. While leading the company’s visual research team, Davies adopted the Softimage imaging program for her own artistic purposes. These efforts culminated in the immersive interactive multimedia work Osmose, which premiered at the Musée d’art contemporain de Montréal in 1995 and has since been shown in museums around the world.

In her paintings, Davies had already expressed an interest in deep-sea diving and for the specific ways that people encounter nature. For Osmose, Davies and her team of engineers devised an innovative interface that evokes deep-sea diving by allowing the user to “float” through a series of virtual worlds. This interface incorporates the head-mounted display, as developed by Scott Fisher at NASA. To this ensemble Davies’s team added sensors that enable the user to navigate through breath and balance. This dramatically expanded the way in which the participant—or an “immersant,” to use Davies’s term—interacts with and inhabits a virtual environment. With Osmose, she introduced the functioning of the body as a source for gestural commands in human-computer interaction. This enabled the immersant to experience a computer-generated space in an immediate, visceral way—particularly one that evokes otherworldly scenes of nature or compelling abstract settings. As Davies says, her intent is to “reaffirm the role of the subjectively experienced, ‘felt’ body in cyberspace.”

Osmose makes the physical self—rather than the conscious mind—the locus for user interaction. By doing so, Davies allows us to be affected by a virtual space in the same subtle way that we are shaped by our unconscious apprehension of our actual, physical environment. As she quotes from Gaston Bachelard’s The Poetics of Space, “By changing space, by leaving the space of one’s usual sensibilities … we change our nature.” Davies’s achievement was to incorporate the intimate, emotional territory of the body into the encounter with virtual worlds. Through this work she discovered, as Bachelard suggests, that a shift in environment can trigger powerful emotional and psychological responses.

From the artist’s Website

Immersion in Osmose begins with the donning of the head-mounted display and motion-tracking vest. The first virtual space encountered is a three-dimensional Cartesian Grid which functions as an orientation space. With the immersant’s first breaths, the grid gives way to a clearing in a forest. There are a dozen world-spaces in Osmose, most based on metaphorical aspects of nature. These include Clearing, Forest, Tree, Leaf, Cloud, Pond, Subterranean Earth, and Abyss. There is also a substratum, Code, which contains much of the actual software used to create the work, and a superstratum, Text, a space consisting of quotes from the artist and excerpts of relevant texts on technology, the body and nature. Code and Text function as conceptual parentheses around the worlds within.

Through use of their own breath and balance, immersants are able to journey anywhere within these worlds as well as hover in the ambiguous transition areas in between. After fifteen minutes of immersion, the LifeWorld appears and slowly but irretrievably recedes, bringing the session to an end.

In Osmose, Char Davies challenges conventional approaches to virtual reality. In contrast to the hard-edged realism of most 3D-computer graphics, the visual aesthetic of Osmose is semi- representational/semi-abstract and translucent, consisting of semi-transparent textures and flowing particles. Figure/ground relationships are spatially ambiguous, and transitions between worlds are subtle and slow. This mode of representation serves to ‘evoke’ rather than illustrate and is derived from Davies’ previous work as a painter. The sounds within Osmose are spatially multi- dimensional and have been designed to respond to changes in the immersant’s location, direction and speed: the source of their complexity is a sampling of a male and female voice.