Project Soli: Controlling devices using hand gestures

It is a gesture sensing technology by Google ATAP (Advanced Technology And Project group) for human-computer interaction. It uses miniature radar technology with sensors that will keep track of gestures made by human hands and fingers. The sensors track these gestures at high speed with detailed accuracy. It allows a rich variety of interaction. For example, the sliding of thumb would indicate the action of scrolling and tapping of thumb would indicate selecting of objects. Such interactive actions can be relevant in areas such as computer gaming and controlling of household appliances. It has the potential to move the users away from physical controllers and allows them to interact with the technological environment using gestures performed by their hands and fingers.

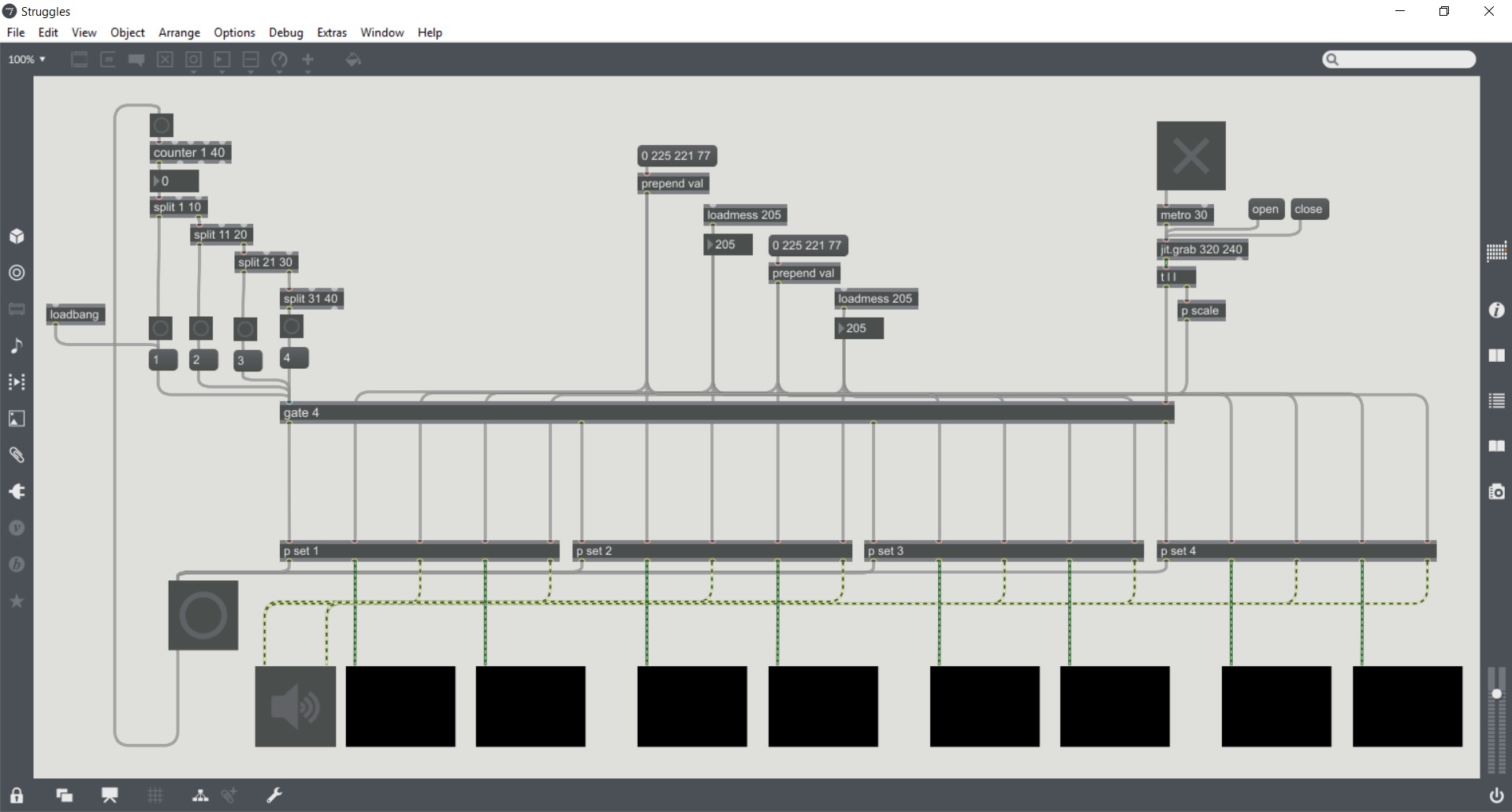

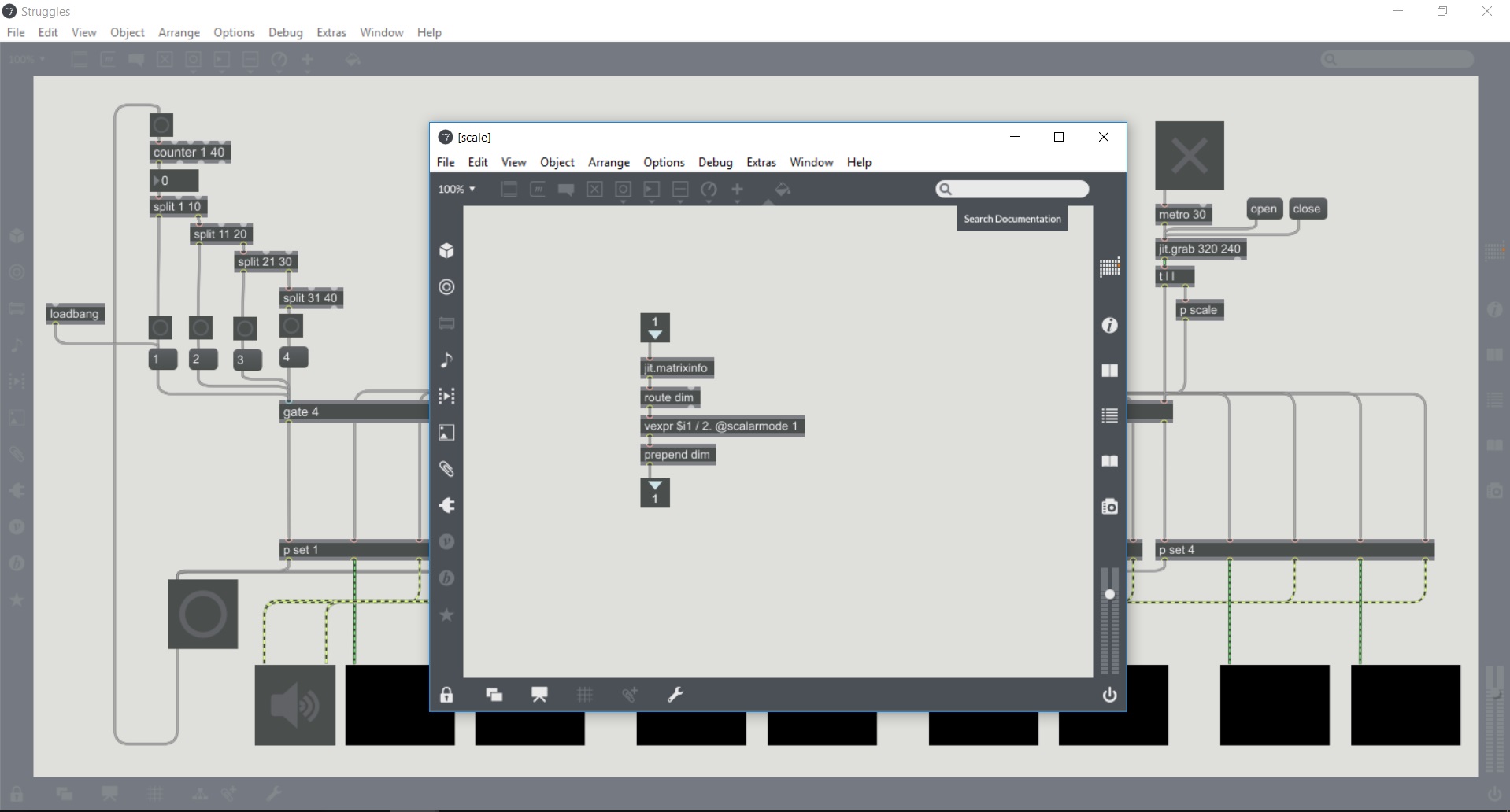

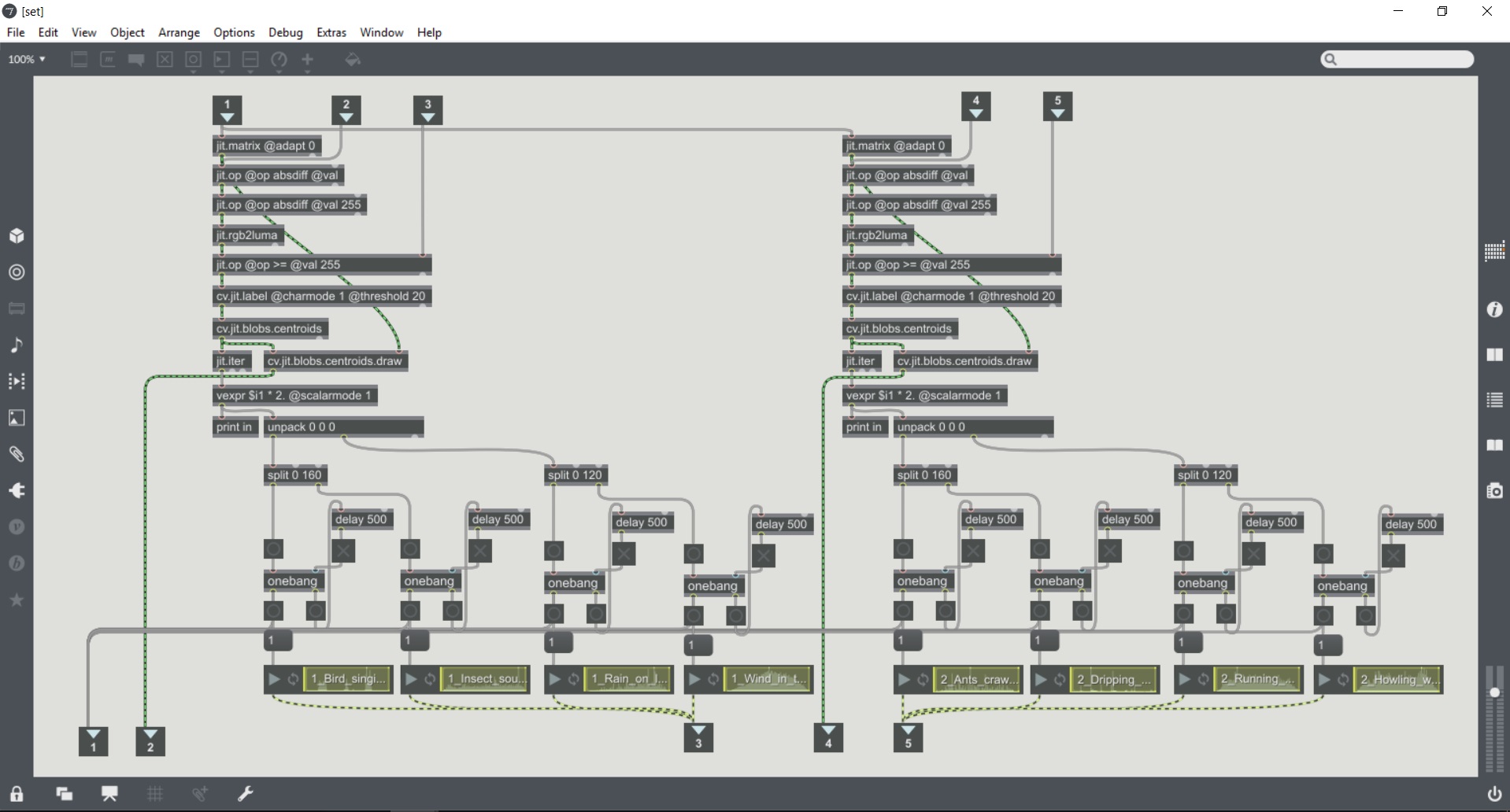

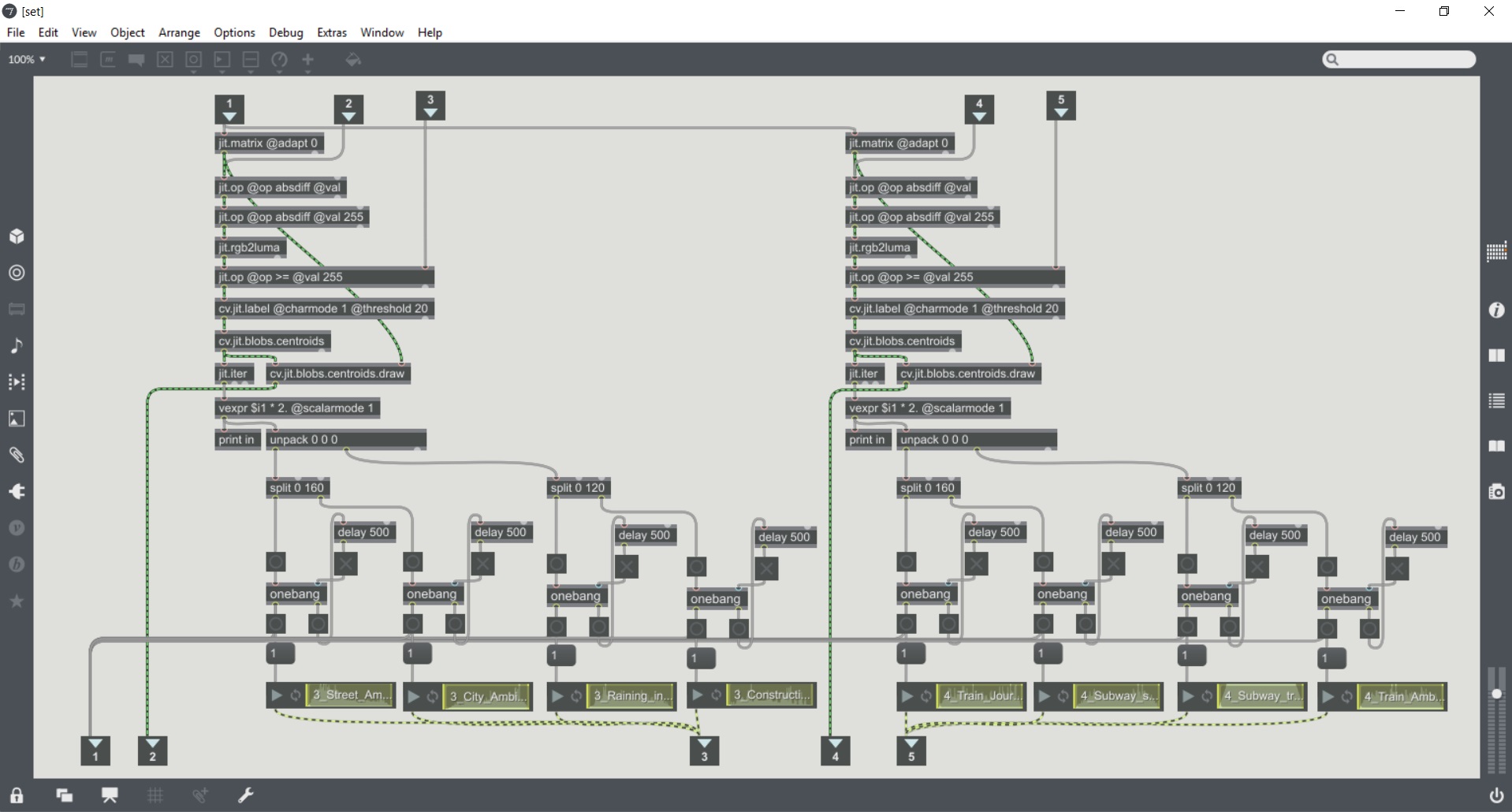

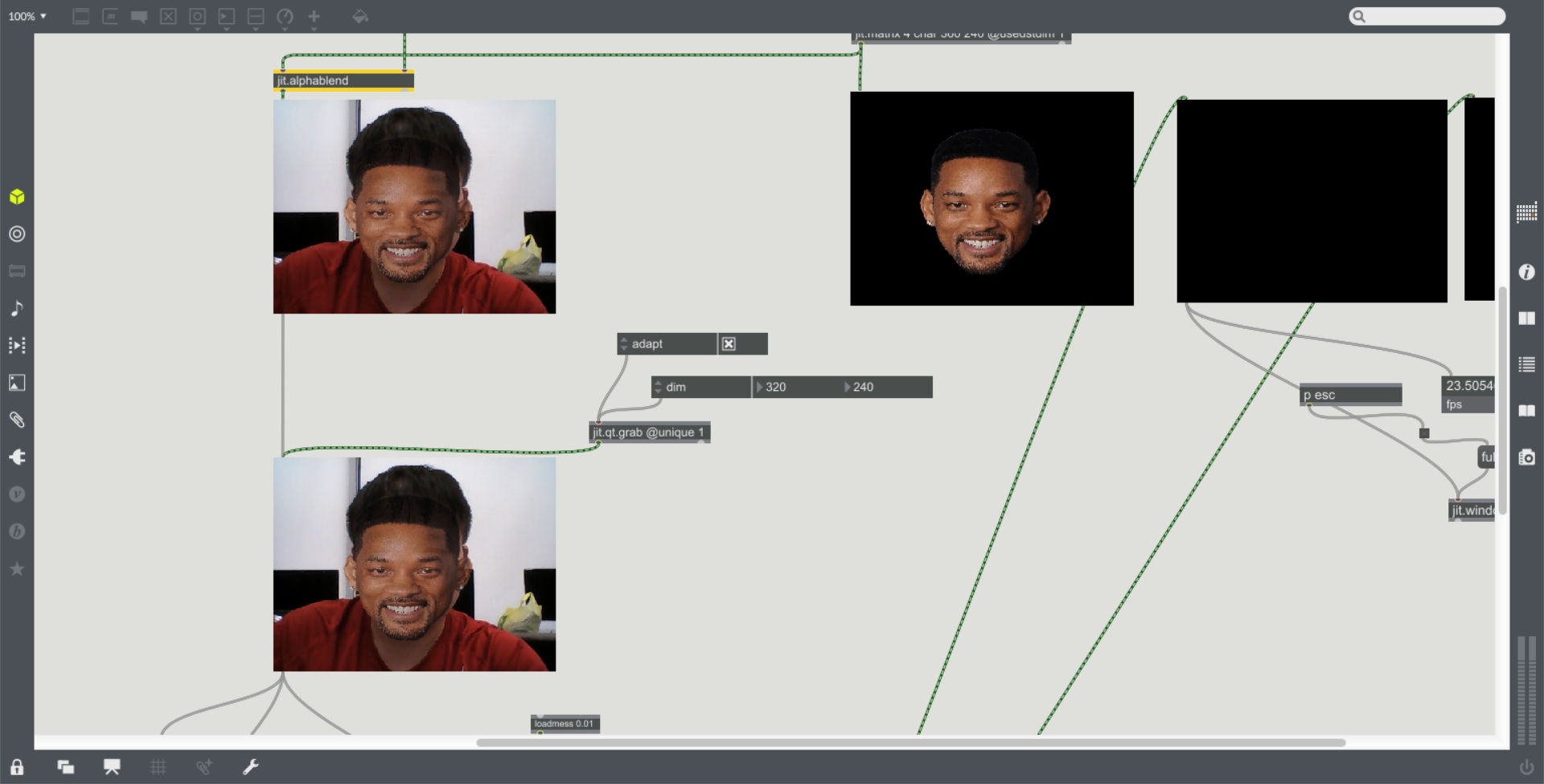

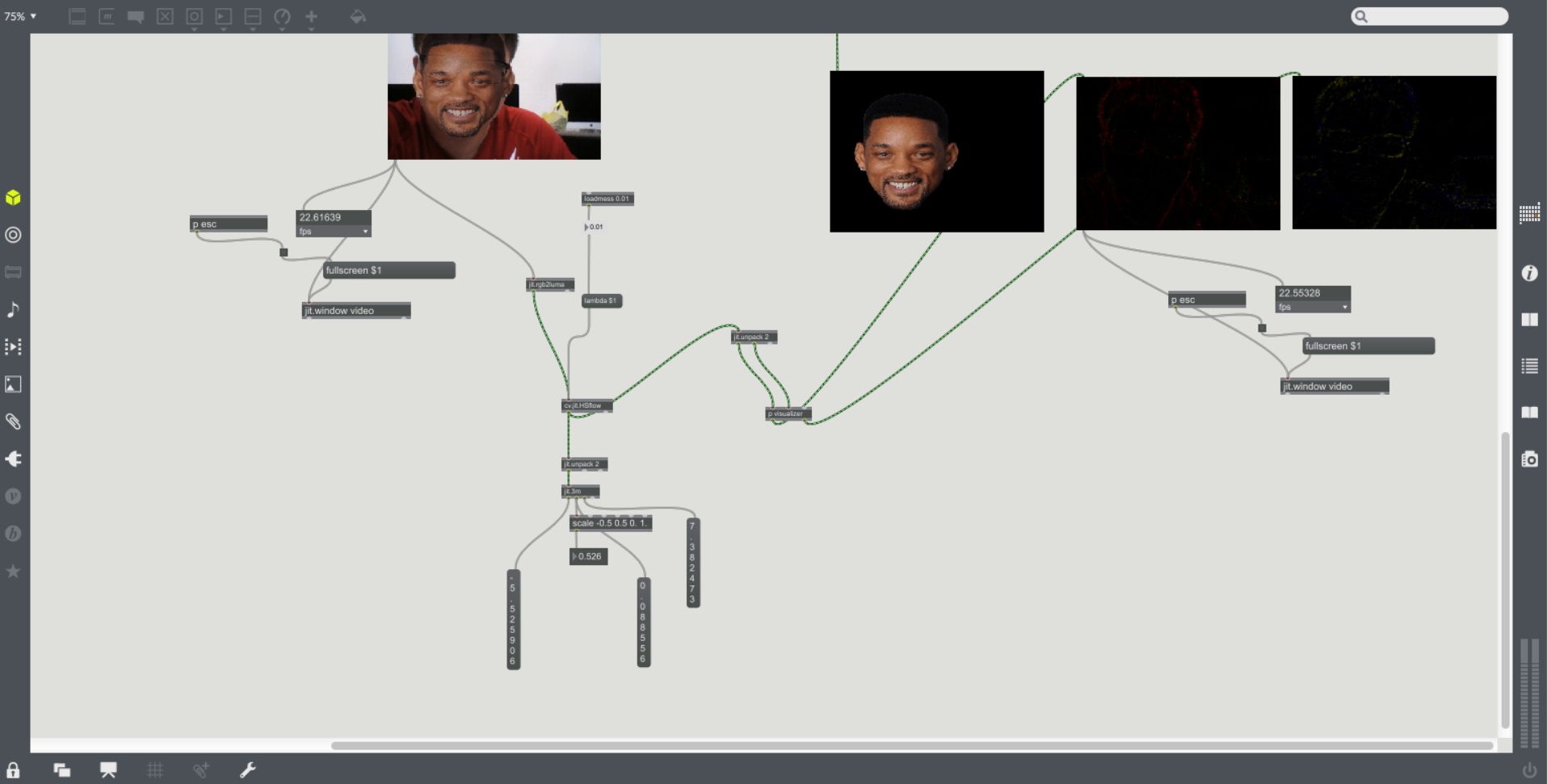

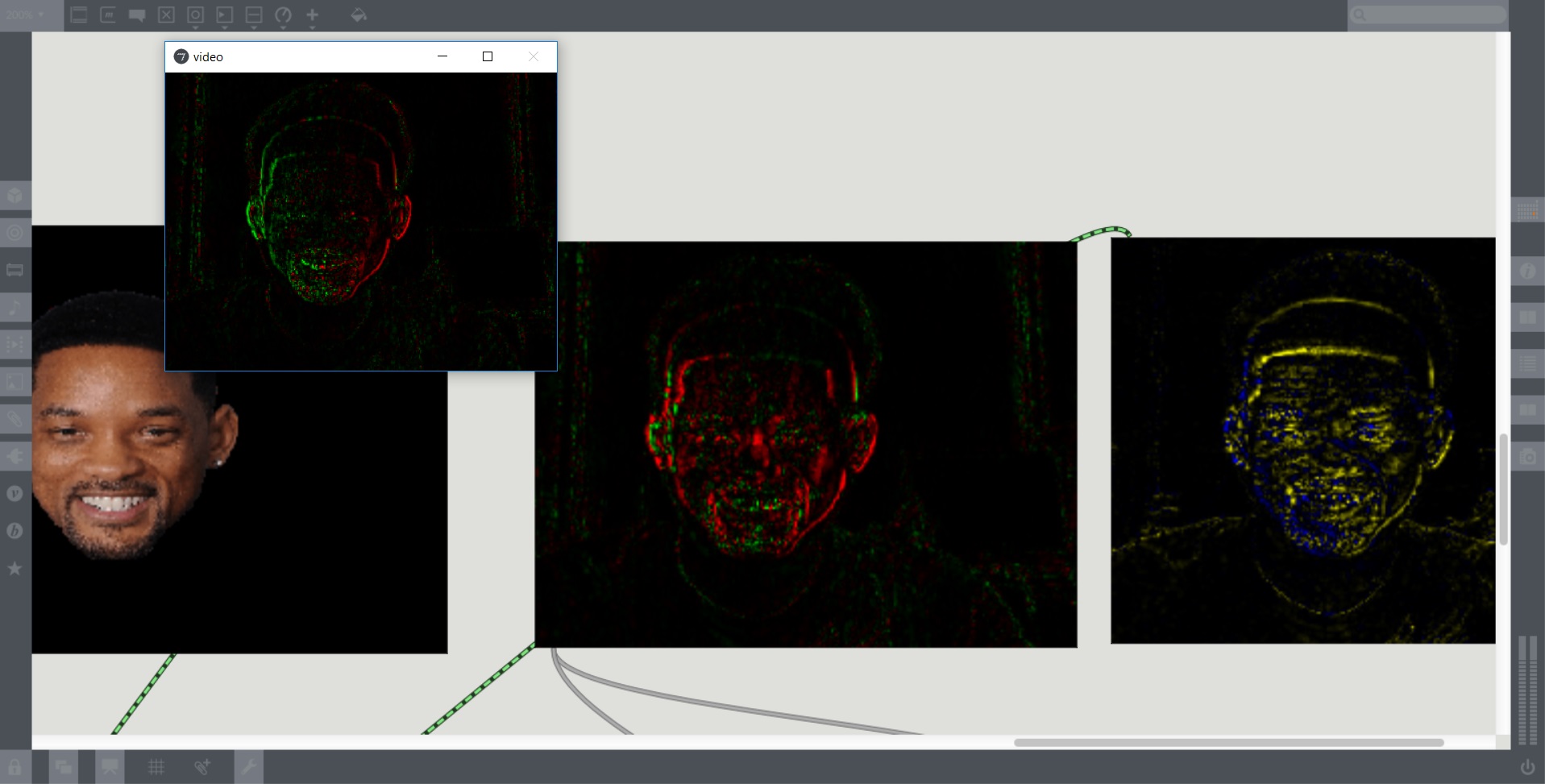

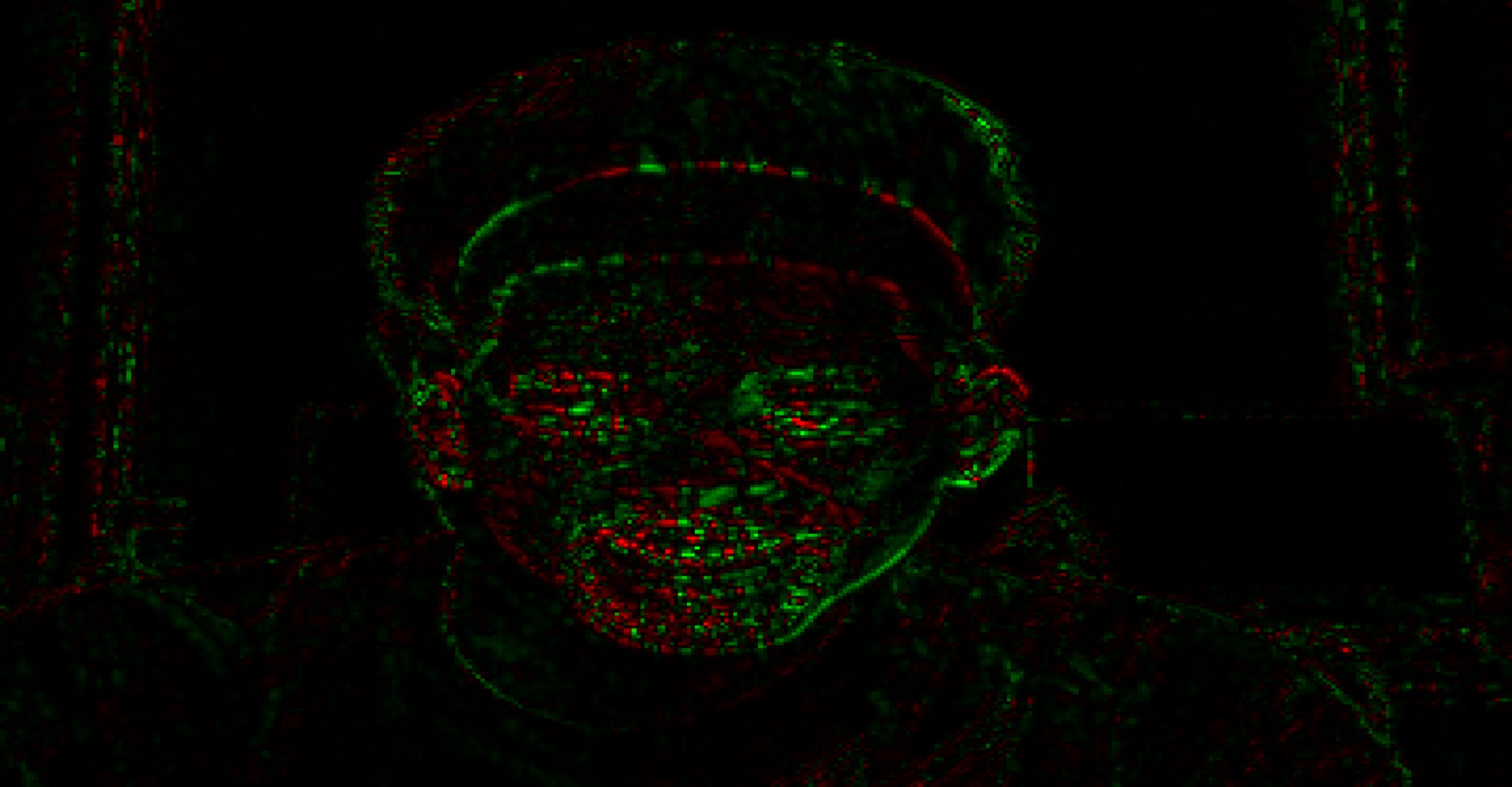

Product Visualisation

Pros and Cons of the Device

Pros

1. This technology gives people the ability to move away from the use of controllers or any physical gadgets which could lead to many possibilities. It could have a huge impact on areas such as virtual reality (VR) and augmented reality (AR). It will allow users to have a greater immersive experience as they are free from holding onto any physical inputs.

2. The physical representation of the technology is tiny. (See attached photos as reference.) This makes it potentially relevant to be integrated into technological wearable devices such as smartwatch and smartphone. With its small physical size, it can also be embedded into non-wearables devices or even daily household objects.

Cons

1. Gestures made by hands and fingers of different individuals are organically different. It will be a challenge for Soli to determine the intentions of the different gestures made by different individuals. It should also take into consideration users with hand and/or finger disability.

2. The experience of using physical mediums such as controllers for interaction had to be around for a long period of time. The implementation of Soli technology will means the absence of physical means in our interaction with the technological environment. As this is still a new developing technology, its effect on the experience of interaction for the users is still unknown and can only be realized in the long run.

Suggestion for alternate use and/or modification

Though Soli is still at an experimental stage of its development, its size and flexibility of the technology offer plenty of possibilities. I believe as time and technology progress, it will potentially be integrated into our technological environment with its favorable interactive characteristics. With ATAP’s plan of offering development kit for developers, it really will be up to the collective effort of ATAP and the developers to help transform the idea into a finalized product and into our home.