Project Description

a liminal space observes the way we unconsciously pursue spaces that reconfirms our own biases — echo chambers. I identified interstices also as liminal; spaces between the threshold. I took more liberty to interpret this and defined the spaces as echo chambers; metaphorical places where truths are rehashed and confirmation biases are asserted.

Through the use of interactive sound and visual elements; the immersive element was important as I wanted to create a literal echo chamber. This would allow the participant to realize and reaffirm how one’s voice, as a relay of truths and communication, can be manipulated ever so easily by re-pitching and layering. Thus, an illusion of polyphony exists where individuals believe it is a complex voice but simply derived from only one voice.

By having modules laid across the room, participants are encouraged to generate or experiment with these modules which detects amplitude from a sound the participant generates. This level of amplitude then corresponds with a change in pitch. The pitch-shifting concept thus becomes relevant as a participant traverses across this space and hears a voiced speech loop that is constantly pitch shifting.

Concept

Using the notion of reverberations and delays that exist in a literal echo chamber, a liminal space surveys the metaphorical concept of an echo chamber vis-a-vis the recreation of the sonic experience of an echo chamber.

A liminal space is a gap in which we as human beings behave, interstices of an unconscious interaction.

Participants are encouraged to explore the space and interrogate how their interactions inflect their looped speech. Movement in this aspect references directly to the act of finding information, the pursuit of reconfirming your biases.

Relations to Interstices as its thematic concept

Interstices, therefore in this manner explores the interstices of the psychological gap to which we as human beings, behave and consequently form social structures within it.. The concept of an echo chamber arrows down towards the notion of the subconscious interaction, the confirmation bias. Is it, therefore, normal to find justifications to our own means, and to our own ends?

By understanding the way in which our own unconscious has ultimately led to the creation of such chambers, we realize that these spaces are, somehow interstices too. Not only are these symbolic to the gaps of our imperfect human being but also spaces that are not literal. And thus by this definition, echo chambers are interstitial spaces made subconsciously. Even if, the truth is a reconstructed image of their own truth. Interstices in this respect, draws back to the meta-understanding that an echo chamber, is in a way, a voluntary act of participating in the cohabitation of your own gaps, subconsciously. An interstitial space thus symbolizes the gap between truth and false.

Project Inspiration

In a very unorthodox aspect, I am very much inspired by sound and music. For this project, I discovered the electronic musician Caterina Barbieri’s music to be very inspiring for my work.

Her music focuses on minimalism and the repetition of patterns which one can hear across the 1-hour music performance. I found it fascinating as these loops are simply musical bars repeated and created patterns within it. She reinforces the idea of a systematic and pattern-based approach and explores within the limitations she has set upon herself.

“the harmonic oscillator is a monophonic oscillator… but it generates the first eight partials….tries to create the illusion of polyphony”

In this video, she discusses the use of a monophonic oscillator, a single-tone, one pitched sound oscillator. But the illusion of polyphony that we can hear from her work exists as through the experimentation with the concept called fundamental frequencies.

Fundamental frequencies refer to the lowest frequency in a waveform. It is a series of sinusoids and each successive pitch which can be derived from the n*positive integer (n being the lowest frequency) are known as partials. A series of frequencies such as 100hz, 200hz, 300hz, 400hz,… are known as a series of harmonic partials, with 100hz being fundamental.

Through this concept of varying pitch, I wanted to explore how I can create a polyphonic experience from a human voice. If our voice (which is comprised of many sinusoids and frequencies) but is predominantly single monophonic and single pitched, it became a point of departure to explore how voices can be distorted and then truths also be distorted. In this way, I wanted to impart this concept into an echo chamber. Where space exists literally like an echo chamber but has their truths be constantly distorted but fundamentally, the distortion is simply an illusion. And this sound is a single note — a single voice.

From my initial research, the project was clear to me that it needed to require the following things.

- An audio reactive interaction

- An audio reactive projection

- Elements of interactivity through movement

- An additional layer of interactivity through inputting speech

To bounce off from Caterina Barbieri’s musical output, I wanted to also interrogate a musical aspect to things, to create a musical element in your own voice. I felt that it could create a piece of interest and great immersive-ness.

Project Developments

Software Side

I first began this project by creating the MAX MSP Patch.

Audio flows top to bottom and left to right. It goes from the pink section, to the gizmo object and down into the brown section.

The patch involves a buffer that records the audio. It is then fed into the ~gizmoz object where the magic happens. The gizmo object modifies the vocal speech by replicating multiple voices as outputs.

To control the level of speech, I used Arduino modules to create instruments or mics that pick up the audio and then drives the modulation in the ~gizmo subpatcher.

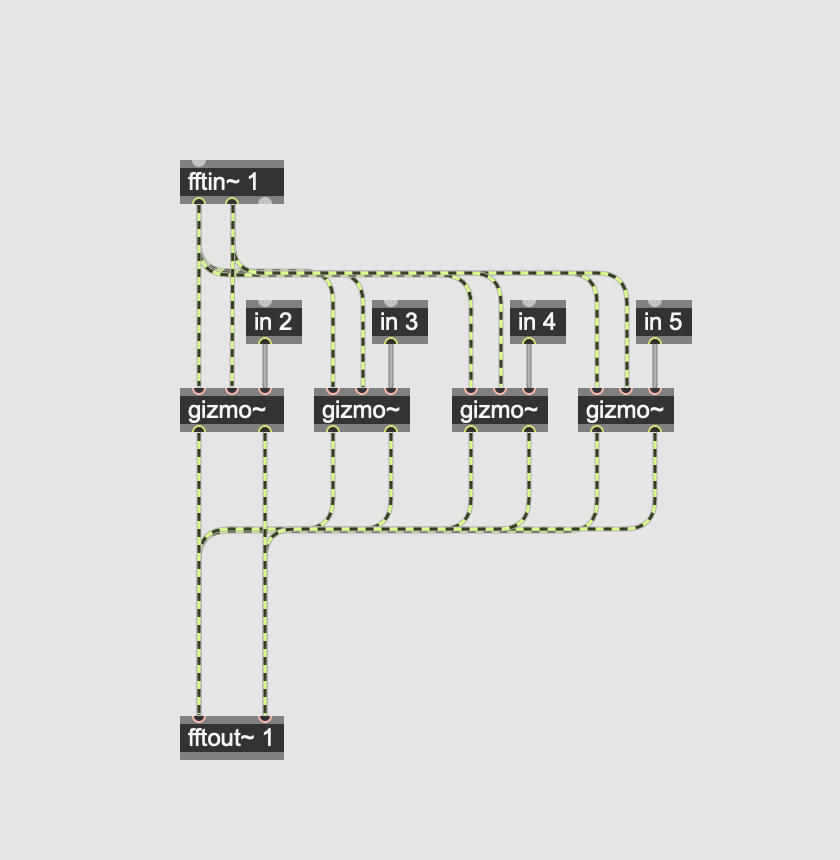

This is the Gizmo Subpatcher. Each gizmo~ object refers to one voice. Here there are 4, so therefore 4 voices. The “in” object feeds in numbers, ranging from 0.1 to infinity from the PITCH subpatcher (below). 1 means no pitch modulation so therefore I use a 4 track system. In 2 refers to the unmodulated voice. In 3 to 5 will have a range of pitch modulation, ranging from the lower and higher pitches. With each arduino transmission module controlling one IN. Pitch shifting is done via the Fast-Fourier Transform process (FFT).

This subpatcher called PITCH is the control center for the Arduino data values. Data flow through here and then into the gizmo subpatcher (image above). The Scale object are what I use to control the pitch.

This subpatcher is called arduino2max. It receives data from the Arduino app via the serial monitor. On the left, you can see the values from each Arduino transmission node. Each has its own unique identifier or known as a String.

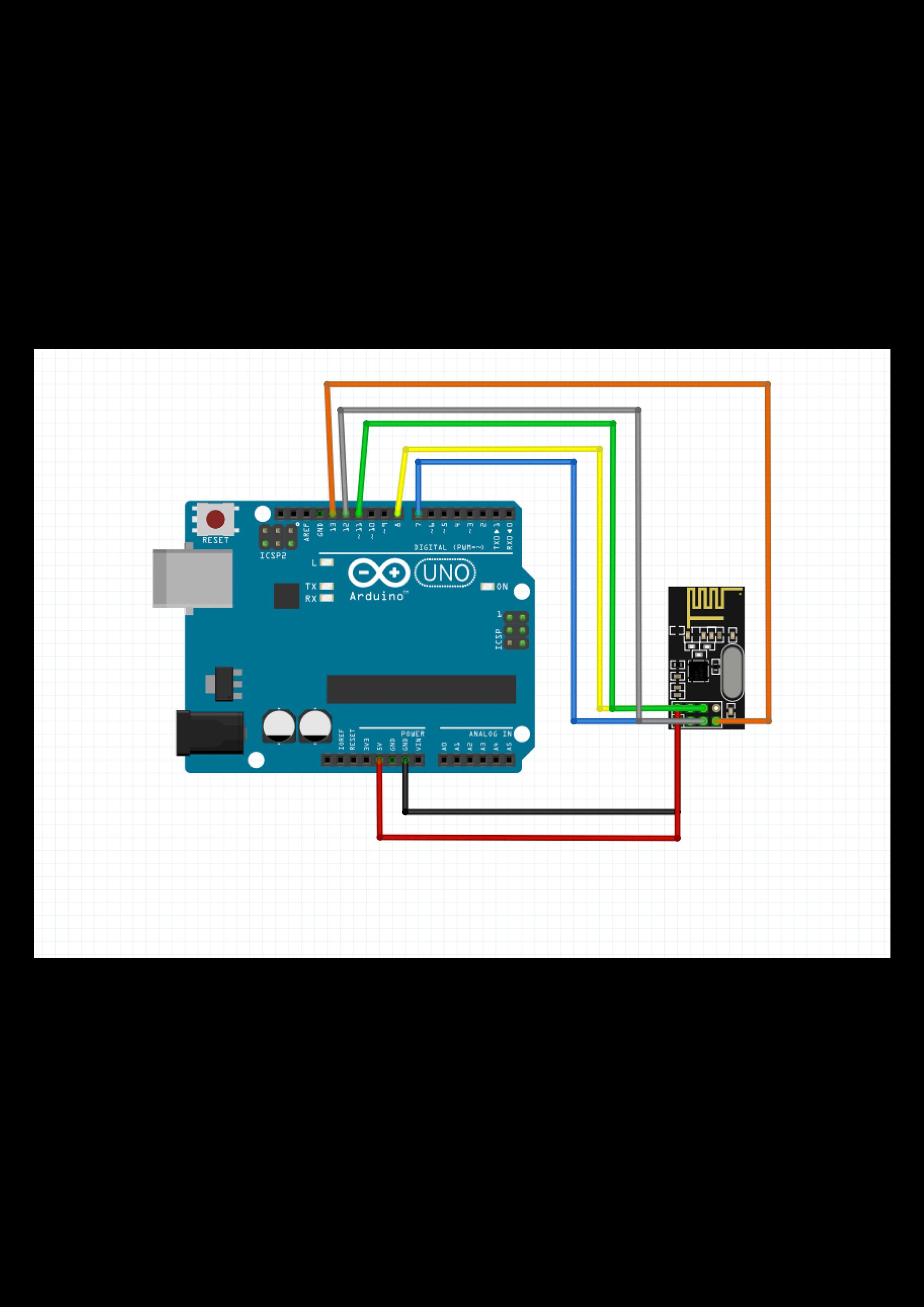

Here is the Arduino sample code. I used 3 Arduinos (see in schematics, for more details & layouts). 1 is deployed as the receiving node and 2 are deployed as the transmission nodes.

Receiving Node Sketch

Transmission Node Sketch

Each transmission node encodes its unique ID. For instance, Node1 will send a value of X15 while Node2 will transmit Z50. X and Z are the unique identifiers which I would later filter via MAX and divert the data to each specific voice. One transmission node controls modulation of one voice in the gizmo subpatcher.

The portion of the subpatcher, arduino2max, where it is colored, filters down the stream of data from serial monitor and breaks it from either X or Z. X being transmission Node 1 and Z, being transmission Node 2. This outlet 1 and outlet 2 feeds it into the subpatcher with the scale object (image abovementioned)

Audio Visual Section

To create the audio-visual projection, I first attempted to create something simply by deriving through the concept of sound waves.

the p pitch-shift/arduino2max object as seen above drives the values from the Arduino transmission nodes into the dim object. This then creates audio-reactive visuals.

In the patch above, the Arduino transmission nodes are driving the modulation of the projection, One controls the North-South direction while the other drives the East-West direction. This became clear as I had managed to create a sonic landscape from simple Cartesian coordinates.

I thought this illustration, done by artist Peter Saville would be interesting if I manage to create one that is 3D. I used this as a main source of inspiration in my audio-visual mapping, to create a sonic landscape; that is beyond the simple sound waves. It is like seeing sound waves but in 3D.

In the words of artist Tarek Atoui,

Sound is a physical phenomena

Hardware Developments

The Receiving Node will be connected to the macbook. Because the entire space will be pitch black, there is no reason for it to be kept in a box.

For transmission Node1 and Node2, I placed them in these boxes from Daiso, and spray painted black. Holes are cut for the microphone and a slit is made for the USB connection to power, via the PowerBank.

I had also removed the LED banks. I found it too distracting and does not make much of a difference. Furthermore, using a projection will be far more interesting and immersive. The LED matrix also takes up a lot of USB power and often results in the Arduino to not work at times.

Schematics

Below are the schematics for the Arduino’s and floor plan layout.

Receiving Node

Transmission Node Schematic

This is the layout schematic for the presentation. Equipment is listed.

Characteristics of Interaction

a liminal space necessitates that a participant actively interacts with the Arduino modules. The viewer has nearly 100% control over modualating the pitch through generating their own sound (and thus loudness, which determines pitch modulation). The viewer or participant can also input whatever they want into the MAX patch and then experiment with it. The interaction is therefore almost to the left of the interactivity scale, where nothing is nearly passive. Each interaction is unique because of the microscopic differences as to how a person can clap. Each clap may sound similar but its amplitude (loudness) will differ.

Final Thoughts

I think a lot more can be improved from this project. Although the overall feedback was overwhelmingly positive, I foresaw this project as one that would occupy the entire studio space. A chamber is not just a rectangular, partitioned space but one that is huge and overwhelming.

Furthermore, I feel that using Arduino is highly finicky but the programming to be quite easy. Perhaps I am a fast learner but I spent more time fixing the connections and troubleshooting my Arduino circuits when it was working well the previous day.

Furthermore, the sound from the speakers was feeding back into the Arduino detection speakers, which was not what I had intended.

At some point, I had to lead the interaction and encourage experimentation. It was important that individuals tried different ways of generate sound, from blowing into the mic to tapping onto the pedestals… somehow or rather it was difficult to encourage people to try new things.

I think the concept is very relevant to me and speaks volumes as to how society operates. Overall I am 80% happy for this project. Nonetheless, to me this is a W.I.P. and I can foresee more variable interpretations of this piece over time. To me the heart of this project is in the MAX MSP patcher as it is extremely powerful.

Overal, as a photography major delving into Interactive classes, I’ve found it extremely fun. It’s very much hands on and it encourages me to think differently from how I would approach concepts in photography.