One of the most undeniable statements is that of these principles being “general tendencies” than “absolute laws”. Where our project has limited functionality (and/or may not require it), these principles do not always manifest entirely.

The use of a computerised system in itself mandates the numerical representation. This is seen most clearly in how serial printing is possible (and crucial!) to the project. Where the project relies on sound, the numerical values of amplitude (volume) and frequency (pitch) are necessary. Furthermore, the output of LED relies on a code which identifies colour thorugh numberical values, e.g. rgb(255, 255, 255).

As shown in the video, almost all of the inputs and outputs must be reduced to numbers, to allow for analysis and conversion.

This is also relevant to the book’s claim that factory-based standardisation is a reason for, and consequence of, the tendency towards discrete representation. Evidently, the same kind of sound inputs will lead to the same kind of light outputs. I think it raises an intriguing question, on if it is truly right that our project reduces the subjective human experience to something so objective.

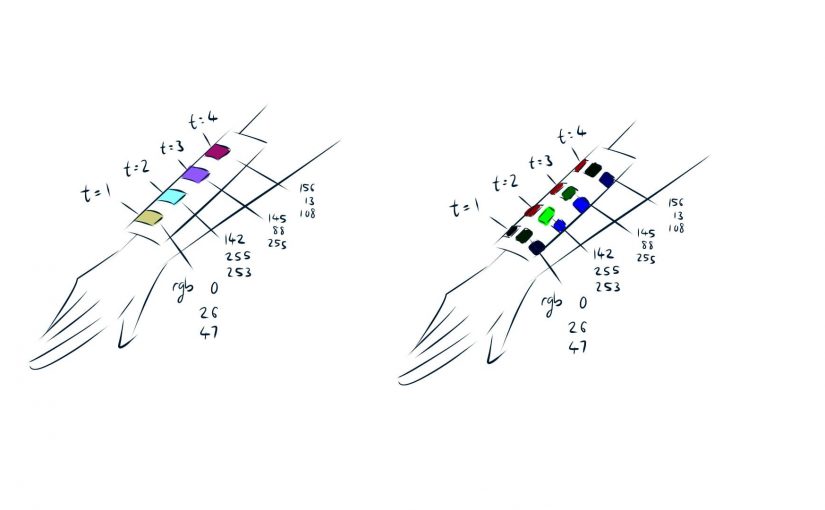

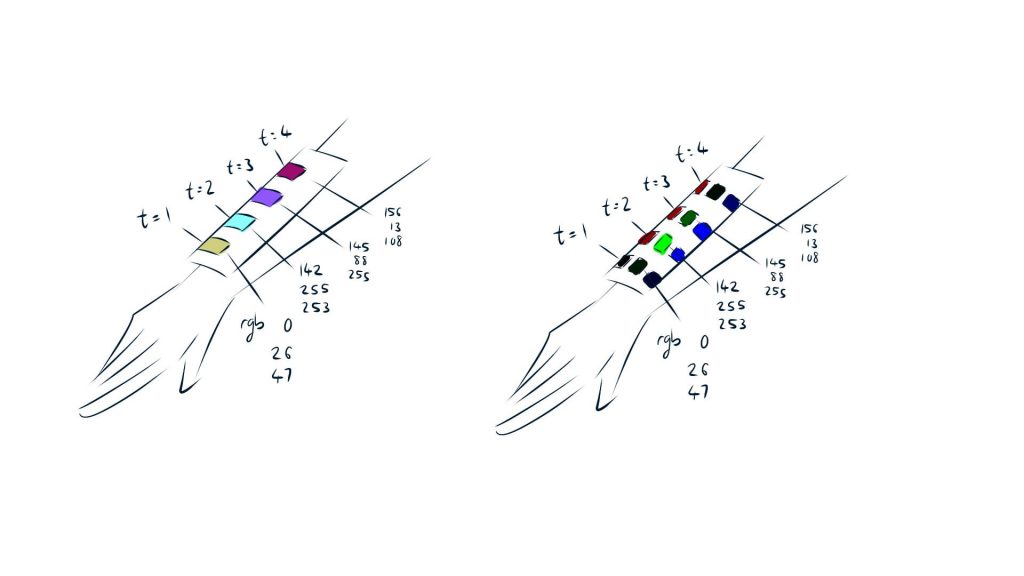

The book also addresses various ways in which modularity manifests, such as in the use of artificial intelligence and media access & organisation. Our system does indeed have a foundation based on modularity, in having separable output components like brightness, red, blue, green, time. However, this reading has made me wonder if this flexibility is something we ought to highlight. Currently, brightness and RGB values are all combined into a single LED pixel: Would it be better to separate said values into multiple pixels, such that colour shifts can be seen both individually and collectively? Or would it be better to keep them all in the same pixel, and only have the collective colour seen both as individual slices of time, or a collective temporal space?

Automation is another segment which our project engages by default. The only necessitated action is that of providing input (creating noise, moving around to find noises): the algorithm does everything else, from receiving the input, converting it to numbers, associating it with other numbers, registering the time of capture, creating outputs. Unlike the previous principle, though, I see no means through which increased automation can improve our project.

It is less clear if the principle of variability is sufficiently engaged. Our project obviously displays this, where the brightness and hues can vary based on the amplitude and frequency. This extent of display, however, still seems rather limited to me. After all, those alone are insufficient inputs and outputs to adequately represent the variances of a soundscape. The inclusion of timbre or directionally-based inputs and outputs might provide even more relevant variances to bring out the message clearer.

An example of variance of light as based on variance of sound.

Another interesting comment regards the customisation to user. While our project does regard the active user, a large portion regards the passive “users” (environment), such that variance is not tied only to the main participant. I wonder if this would be considered a negative point for variability in our case?

The last principle of transcoding is very debatable, in my opinion. Claiming that there are two layers, Manovich suggests that the computer layer and cultural layer influences each other in terms of systems of organisation. For example, our association of red with strength might lead us to code lower pitch = more strength = more red. The cultural layer is clear.

On a personal level, however, I’m confused about the computer layer. After all, humans made computers. If we built computers in a way comprehensible to us, wouldn’t the computer layer be but a somewhat specialised part of the cultural layer? The only defense I can really suggest for the computer layer is that it encourages a culture of efficiency, where the computer has much better syntax and organisation than us.

External Sources

- Manovich, L. (2001). Principles of New Media. In The Language of New Media, pp.27-48. Cambridge, Massachusetts: MIT Press.

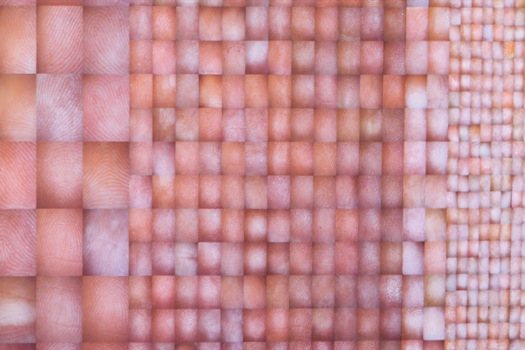

- Pulse Index (2010)

- Binary Number Conversion (Instructables)