https://docs.google.com/presentation/d/1IowUZXYGBmztpKPxHIs5FTLRFykJRSg80ReEppnHzuQ/edit?usp=sharing

19/03/2021

Into image-making and creating spaces

Creative Portfolio (IDS_Interactive Media) – Ling Pei Yi, Alina

https://www.dropbox.com/sh/dx5d7n612d5cwnr/AAD-EKT9s7OI8S1LiFKWhnP1a?dl=0

LIGHT TEXTURES CLASSIFICATION

Framework for perceptual experience and understanding of light

LIGHT

– Atmosphere

– Luminosity framework – Spatial structure, Appearance, Source

LIGHT SOURCE

INTERACTIVE SPACE

– Transform the way light is experienced in a space -> induce/ trigger a sense of touch/ haptic feedback

Using vibration/ proprietary muscle response:

LIGHT IN SPACE – ARCHITECTURE

Explore the difference in visual textures due to the interaction of light and materials

ARTISTS

http://www.phonomena.net/ilinx/

Doug Wheeler

SPACE AND PLACE – Yi-Fu Tian

“What sensory organs and experiences enable human beings to have their strong feeling for space and for spatial qualities? Kinaesthesia, sight and touch

CHAPTER 4 – Body, Personal Relations and Spatial Values

Fundamental principles of spatial organization:

1. The posture and structure of the human body

2. The relations (whether close or distant) between human beings

DIRECTION FOR FINAL FORM – Interactive Space

– Other interesting aspects to consider:

1 . The physicality of space vs. the intangibility of light – Interactions

2. Movement of bodies and bodily actions – walking vs. reaching out

3. Tactile sensations/ vibration – real or artificial?

4. The dimension of time – Can spatial awareness be translated by the relative amount of time spend in a space?

> What can be an indication of time?

5. Temperature – Can give a new sensory experience?

6. Time/ Site-specificity of light – sun light

Over the next 2 weeks:

‘Light textures’ Classification framework

Sensory experiments/ study + results > Creating light atmospheres with different qualities

Prototype of finger tactile sensory substitution (TSS) device to sense ambient light (space)

Deliverables for final presentation:

Dorin, Alan, Jonathan McCabe, Jon McCormack, Gordon Monro, and Mitchell Whitelaw. “A framework for understanding generative art.” Digital Creativity 23, no. 3-4 (2012): 239-259.

Aim of the Paper

In the process of analysing and categorising generative artworks, the critical structures of traditional art do not seem to be applicable to “process based works”. The authors of the paper devised a new framework to deconstruct and classify generative systems by their components and their characteristics. By breaking down the generative processes into defining components – ‘entities (initialisation, termination)’, ‘processes’, ‘environmental interaction’ and ‘sensory outcomes’ – we are able to critically characterise and compare generative artworks which underlying generative processes, as compared to outcomes, hold points of similarity.

The paper first looked at different attempts at and previous approaches of classifying generative art. By highlighting the ‘process taxonomies’ of different disciplines which adopt the “perspective of processes”, the authors used a ‘reductive approach’ to direct their own framework for the field of generative art in particular. Generative perspectives and paradigms began to emerge in the various seemingly unrelated disciplines, such as biology, kinetic art and time-based arts and computer science, adopting algorithmic processes or parametric strategies to generate actions or outcomes. Previous studies explored specific criterions of emerging generative systems by “employing a hierarchy, … simultaneously facilitate high-level and low-level descriptions, thereby allowing for recognition of abstract similarities and differentiation between a variety of specific patterns” (p. 6, para.4). In developing the critical framework for generative art, the authors took into consideration of the “natural ontology” of the work, selecting a level of description that is appropriate for the nature. Adopting “natural language descriptions and definitions”, the framework aims to serve as a way to systematically organise and describe a range of creative works based on their generativity.

Characteristics of ‘Generative Art System’

Generative art systems can be broken down into four

(seemingly) chronological components – Entities, Processes, Environmental Interaction and Sensory Outcomes. As generative art are not characterised by the mediums of their outcomes, the structures of comparison lie in the approach and construction of the system.

All generative systems contain independent actors ‘Entities’ whose behaviour is mostly dependent on the mechanism of change ‘Processes’ designed by the artist. The behaviours of the entities, in digital or physical forms, may be autonomous to a certain extent decided by the artist and determined by their own properties. For example, Sandbox (2009) by artist couple Erwin Driessens and Maria Verstappen, is a diorama of a terrain of sand is continuously manipulating by a software system that controls the wind. The paper highlight how each grain of sand can be considered the primary entities in this generative system and how the system behaves as a whole is dependent on the physical properties of the material itself. The choice of entity would have an effect on the system, such as in this particular work where the properties of sand (position, velocity, mass and friction) would have an effect on the behaviour of the system. I think the nature of the chosen entities of a system is important factor, especially when it comes to generative artworks that use physical materials.

The entities and algorithms of change upon them also exist within a “wider environment from which they may draw information or input upon which to act” (p. 10, para. 3). The information flow between the generation processes and their operating environment can be classified as ‘Environmental Interaction’, where incoming information from external factors (human interaction or artist manipulation) can set or change the parameters during execution which leads to different sets of outcomes. These interactions can be characterised by “their frequency (how often they occur), range (the range of possible interactions or amount of information conveyed) and significance (the impact of the information acquired from the interaction of the generative system)”. The framework also classifies interactions as “higher-order” when it involves the artist or designer in the work, where he can manipulate the results of the system through the intermediate generative process or adjusting the parameters or the system itself in real-time, “based on ongoing observation and evaluation of its outputs”. The higher order interactions are made based on feedback of the generated results, which hold similarities to machine learning techniques or self-informing systems. This process results in changes to its entities, interactions and outcomes and can be characterised as “filtering”. This high order interaction is prevalent in the activity of live-coding, relevant to audio generation softwares which supports live coding such as SuperCollider, Sonic Pi, etc, where performance/ outcome tweaking is the main creative input.

The last component of generative art systems is the ‘Sensory outcomes’ and they can be evaluated based on “their relationship to perception, process and entities.” The generated outcomes could be perceived sensorially or interpreted cognitively as they are produced in different static or time-based forms (visual, sonic, musical, literary, sculptural etc). When the outcomes seems unclassifiable, they can be made sense of through a process of mapping where the artist decides on how the entities and processes of the system can be transformed into “perceptible outcomes”. “A natural mapping is one where the structure of entities, process and outcome are closely aligned.”

Case studies of generative artworks

The Great Learning, Paragraph 7 – Cornelius Cardew (1971)

Paragraph 7 is a self-organising choral work performed using a written “score” of instructions. The “agent-based, distributed model of self-organisation” produces musically varying outcomes within the same recognisable system, while it is dependent on human entities and there is room for interpretation/ error in the instructions, similarities with Reynolds’ flocking system can be observed.

Tree Drawings – Tim Knowles (2005)

Using natural phenomena and materials of ‘nature’, the movement of wind-blown branches to create drawings on canvas. The found process of natural wind to be used as the generator of movement of the entities highlights the point of the effect of physical properties of chosen materials and of the environment. “The resilience of the timber, the weight and other physical properties of the branch have significant effect on the drawings produced. Different species of tree produce visually discernible drawings.”

The element of surprise is included in the work, where the system is highly autonomous, where the artist involvement includes the choice of location and trees as well as the duration. It brings to mind the concept of “agency” in art, is agency still relevant in producing outcomes in generative art systems? Or is there a shift in the role of the artist when it comes to generative art?

The Framework on my Generative Artwork ‘SOUNDS OF STONE’

Visual system:

Work Details

‘Sounds of Stone’ (2020) – Generative visual and audio system

Entities

Visual: Stones, Points

Audio: Stones, Data-points, Virtual synthesizers

Initialisation// Termination:

Initialisation and termination determined by human interaction (by placing and removing a stone within the boundary of the system)

Processes

Visual and sound states change through placement and movement of stones

Each ‘stone’ entity performs a sound, where each sound corresponds to its visual texture (Artist-defined process)

Combination of outcomes depending on the number of entities is in the system

“Live” where artist or performer or audience can manipulate the outcome after listening/ observing the generated sound and visuals.

Environmental Interaction

Room acoustics

Human interaction, behaviours of the participants

Lighting

Sensory Outcomes

Real-time/ live generation of sound and visuals

Audience-defined mapping

As the work is still in progress, I cannot evaluate the sensory outcomes of the work at this point. According to the classic features of generative systems used to evaluate Paragraph 7 (performative instructional piece) such as “emergent phenomena, self-organisation, attractor states and stochastic variation in their performances”, I predict that the sound compositions of “Sound of Stones” will go from being self-organised to chaotic as the participants spend more time within the system. Existing as a generative tool or instrumental system, I predict that there will be time-based familiarity with the audio generation with audience interaction. With ‘higher order’ interactions, the audience will intuitively be able to generate ‘musical’ outcomes, converting noise into perceptible rhythms and combinations of sounds.

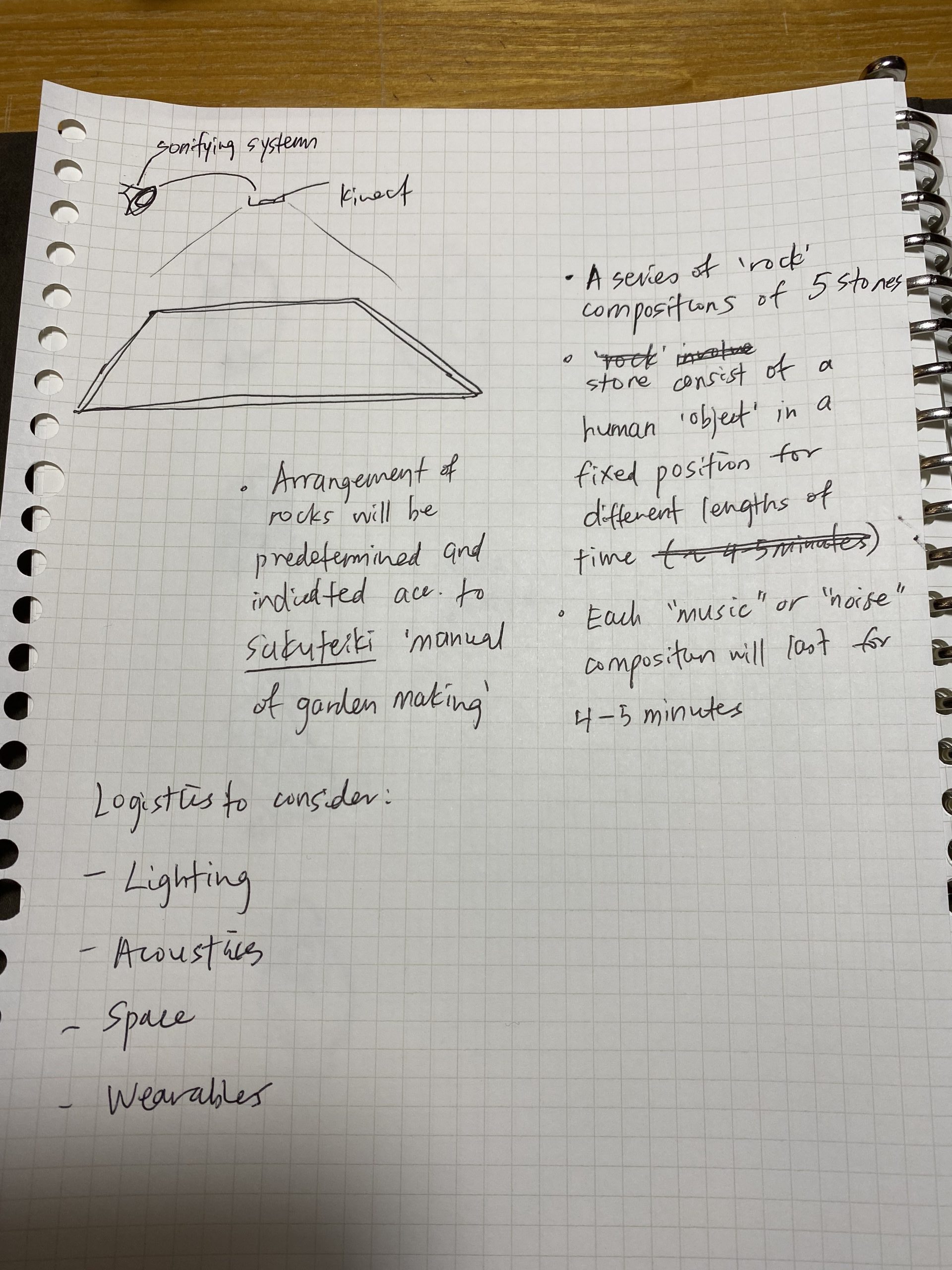

logistics

date: 10 Nov, Week 13

location: Truss room/ LT (Mounting of the kinect/ camera system)

Week 11 – Process and Developing instructions

Week 12 – Set up and construction

Week 13 – Presentation

PROCESS

Human body position

Aim – To find out which positions the body should stay in and the duration to induce a sense of stiffness / being compressed and weighted

Instructions:

i. Use your body to imitate the form of the stone

ii. Experiment with different body positions for different time periods

– Different sitting and crouching positions

– Duration of 2, 5 and 10 minutes

2. Philosophy of zen gardens + the making

Zen Buddhism

japanese rock gardens – “were intended to imitate the intimate essence of nature, not its actual appearance, and to serve as an aid to meditation about the true meaning of existence.”

Selection and Arrangement of rocks

the most important part is the selection and placement of rocks.

The Sakuteiki “Records of Garden Making” – manual on “setting stones” (ishi wo tateru koto). “In Japanese gardening, rocks are classified as either tall vertical, low vertical, arching, reclining, or flat. For creating “mountains”, usually igneous volcanic rocks, rugged mountain rocks with sharp edges, are used. Smooth, rounded sedimentary rocks are used for the borders of gravel “rivers” or “seashores.”

In Japanese gardens, individual rocks rarely play the starring role; the emphasis is upon the harmony of the composition.”

– Arranging rocks according to the Sakuteiki

Make sure that all the stones, right down to the front of the arrangement, are placed with their best sides showing. If a stone has an ugly-looking top you should place it so as to give prominence to its side. Even if this means it has to lean at a considerable angle, no one will notice. There should always be more horizontal than vertical stones. If there are “running away” stones there must be “chasing” stones. If there are “leaning” stones, there must be “supporting” stones.- Sand and gravel – The act of raking the gravel into a pattern recalling waves or rippling water

Zen priests practice this raking also to help their concentration

> might omit this aspect in the project

> alternatively, I can generate “white noise” as background for the audio

MAIN EXPLORATION: Arrangement of OBJECTS vs HUMANS

Arrangement of humans in current times

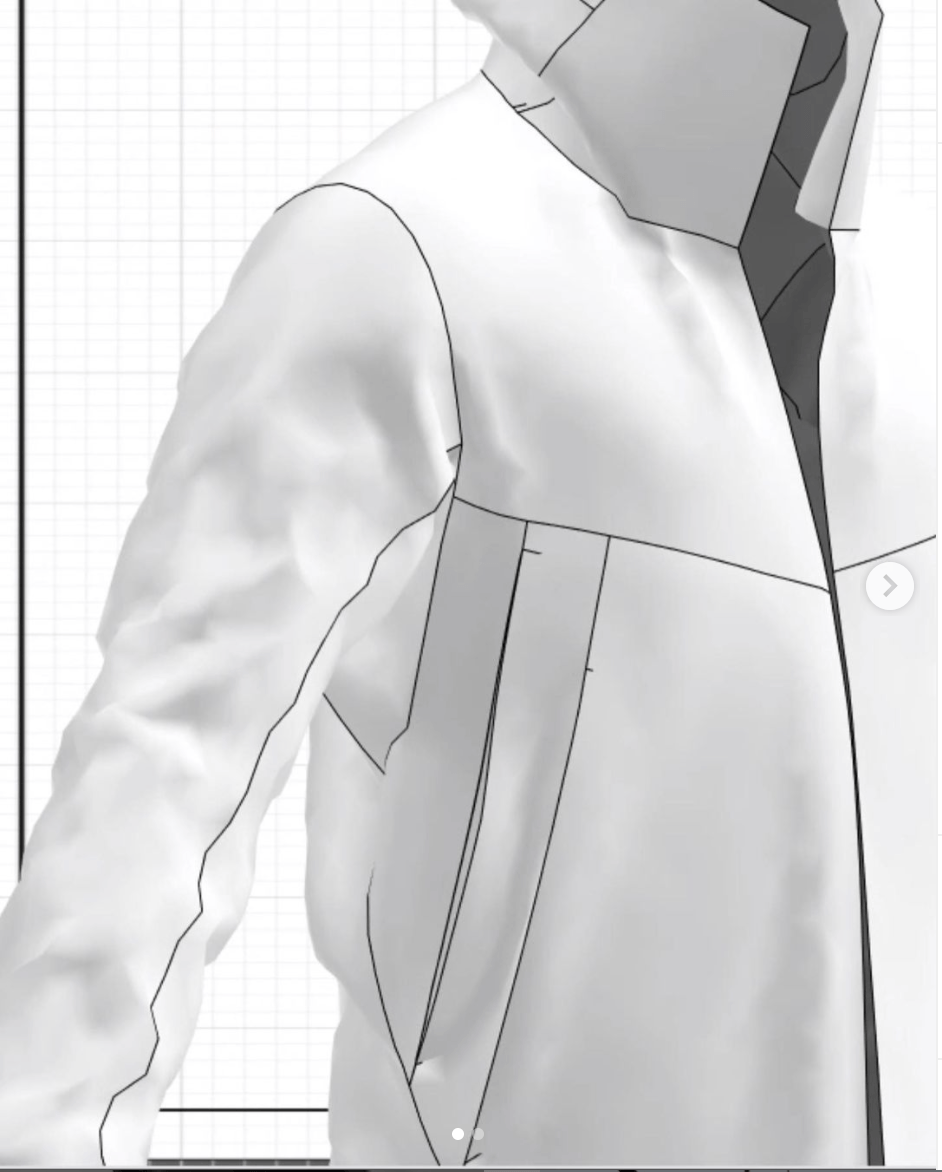

3. Textures – Wearable as embodiment/ ergonomics/ texture of form

Technical Apparel

Materials to endure nature/ harsh conditions

Cambre Jacket — Made from a durable performance denim with quick drying properties.

Cambre Jacket — Made from a durable performance denim with quick drying properties.

SET UP – FIRST DRAFT

PROGRESS

– Working on sonification system (generative art) currently

– Experiment with stone positions and wearable textures

– Aim to develop instructions for performance by the end of the week (OSS)

//

Week 10:

SENSORY EXPERIMENTS – Perceptual box

I built the perceptual box 🙂 I can now conduct my visual-tactile experiments.

Some Visual Experiments:

I experimented with two visual qualities of light that can be experienced visually in my perceptual box.

Some tests:

A. RHYTHM

Playing with the ‘blinking’ LED to create rhythm with light

> Varying the interval delay – Create different rhythms

B. MOTION

Using a servo motor to create a rotating wheel to vary the light source.

‘Sensory Modality Transition’ Experiments – Visual-Tactile (Week 12)

Aim: Associate tactile qualities with Visual Qualities of light

CONSONANCE and DISSONANCE

Light Textures Classification (Week 11-12)

Referencing ‘Sound Textures Classification’ http://alumni.media.mit.edu/~nsa/SoundTextures/

Human Perception of Sound Textures

– Perception experiments which show that people can compare sound textures, although they lack the vocabulary to express formally their perception

Texture or not?

Perceived Characteristics and Parameters:

Come up with possible characteristics and qualifiers of sound textures, and properties that determine if a sound can be called a texture.

CAN I DO THE SAME FOR HUMAN PERCEPTION FOR LIGHT TEXTURES?

> Classify light textures

> Perceived Characteristics and Parameters – Quantifiers and Qualifiers

ISEA DATA gloves workshop – Hugo Escalpalo

Input gloves

– Measures specific finger anatomic and gestural positions as input

– Haptic feedback: Not as output. The design of the glove has in-built mechanism to incorporate proprietary feedback using Slider Sensors and Springs. When pulled forward, the gloves use resistance to simulate the feeling of grabbing an object.

– Intended to include tracking components to track position in VR

https://www.youtube.com/watch?v=MP9aJ5ThwK0

https://www.youtube.com/watch?v=Be3NTYqN0vA

Week 12-13:

Direction for Week 14:

Using the sound generation system I used in my generative sketch:

RECAP for Generative Artwork:

Aiming to extract a sound from the visual textures of stone involves two aspects:

1. Subtracting three-dimensional forms of the material into visual data that can be converted into audio/ sounds

2. Designing a model that generates sounds that the audience would perceive/ associate the tactile qualities of the specific material.

POSSIBLE APPROACH

DIRECTION