What’s going on for the past ??? month(s)?

3D model and 3D model testing

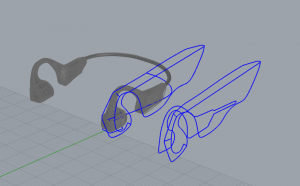

I’ve done a few iteration with 3D modelling of the headset. It was a tedious process to begin with as I don’t really have a sense of how the form is to be made through 3D modelling even with reference to all the physical models I’ve made.

I decided to try downloading trial for Rhino 7 and tried SubD which is basically T-spline, which I thought will be good for modelling this since its more like sculpting

Eventually I realised it’s easier to just model using the normal CAD method. Before that, I tried repurposing real products like earmuffs and visors from Daiso.

I realised that I can make everything really thin and even can try fabric instead of tough materials. More on that later in the next section.

This led me to realise I can use the shape of a hairband to create the form of my device.

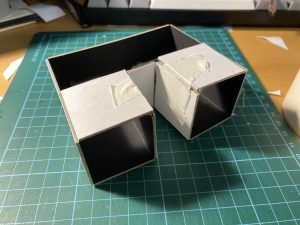

I then up the complexity to try adding all the other parts of the original design. I roughly made the form as this is still just a test print. I created a low-poly version to 3D print as the file size got too big for 3D printing

Results:

I tried different materials as well. Black one is TPU, a flexible plastic. White is PLA which is a more plasticy plastic.

Conclusion: I think the prints are ok. I can go ahead to refine the 3D model. But before doing so, I have some changes to my approach that I will talk more about in the next section.

Change of approach

So I’m happy that I’ve begun with making stuff. But I’m scrapping a lot of the previous headset design I’ve thought about. This happened after I saw earmuffs and I thought that it’s really easier to just put the headset inside a fabric thing that has a wire inside, AKA simplifying everything.

The old design will still be worked on, still. I’ll create separate parts and add magnets to let the different parts stick. It will be on display instead and will only be interactive as a “display” model.

I don’t think I’ve mentioned before but I remembered Sputniko!’s Menstruation Machine and thought my devices can just look like that too. (I think I was also influenced by this work previously actually)

Anyway so next up on the to-do list specifically for Chronophone:

- Experiment with fabric version of Chronophone using earmuff design (without covering the ears)

- Refine 3D model of original design, to be displayed and marketed as “cyborg model” for those who have cybernetics already installed on their head

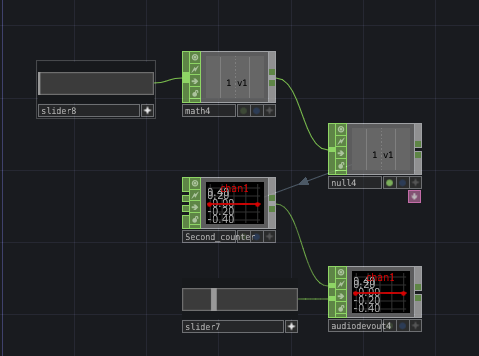

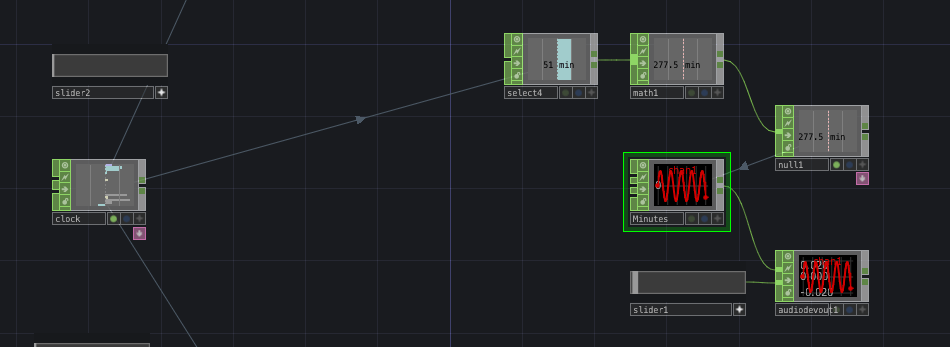

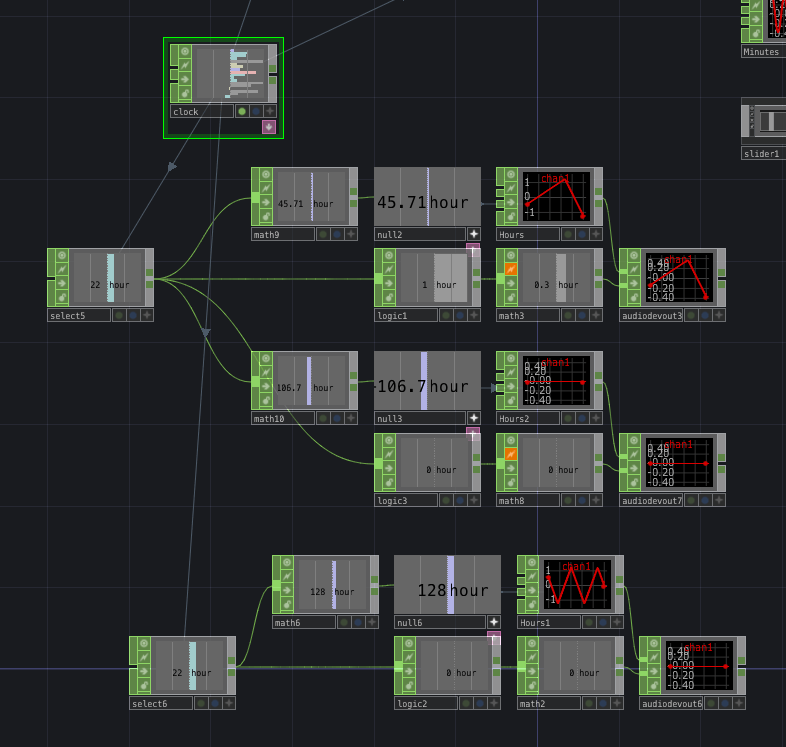

- Refine sounds and add variations so people can change and choose what they like

Concept recap

I think I’m finally happy with the whole Sensorial Futures project. Here’s my train of thoughts for the whole project (sorry I keep doing this cos I need to constantly remind myself)

Sensorial Futures is a speculative cybernetics augmentation popup store, commercialised for the mass public to upgrade their senses on the fly. The shop has a shiny neo-future interior with displays that allow you to try out different device, placed around like Apple devices in their shops.

In this particular pop-up, the sensory devices are catered to the everyday people and the working population, dealing with things like time (productivity), money, and personal space (transport/mobile device use). These devices range from wearables, to implants, to cybernetics. Borrowing from cyberpunk themes, I want to make it such that the wearables are essential for certain jobs and this pop-up store offers sensory upgrades to the most generic 9-5 jobbers. (thanks prof. LP for giving me that idea way back 2 months ago)

3 key devices are on display:

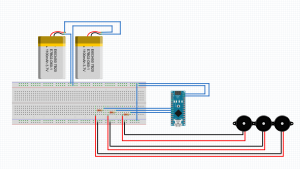

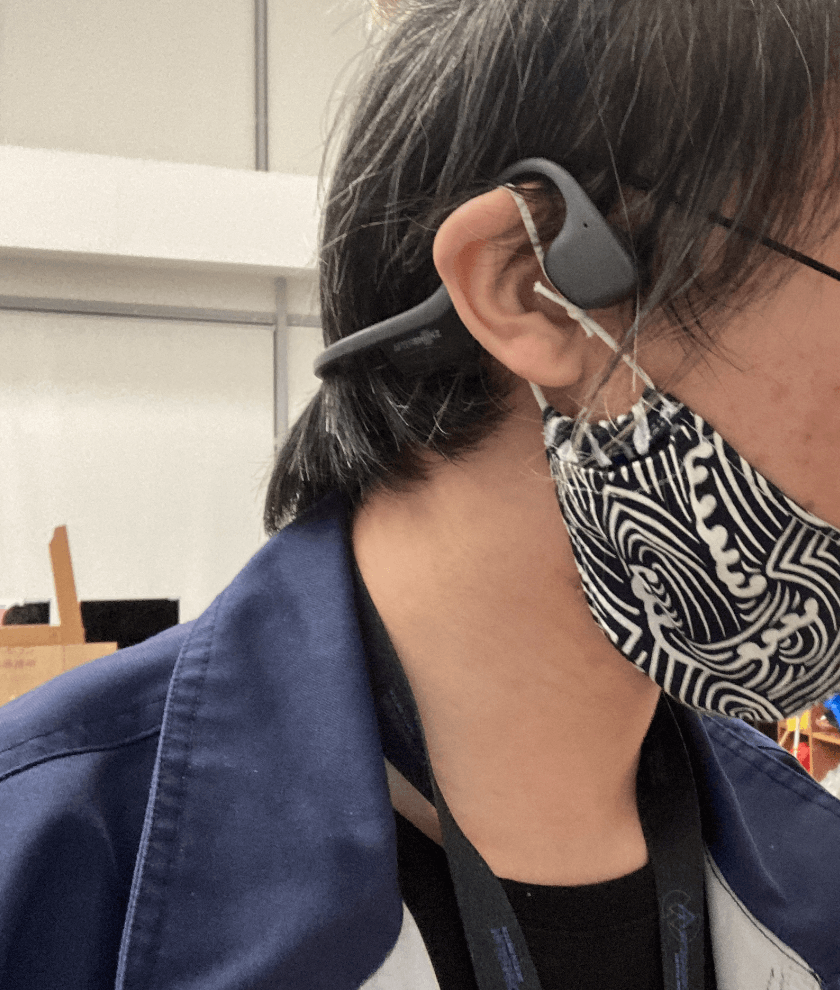

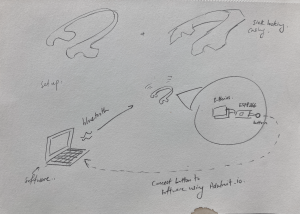

- Time-telling sound device that translate time to sound (and maybe colour). Available in wearable (main interactive device), implant (poster implying its existence, asking people to register for it), cybernetic (on display, magnetic, for cyborgs). how it will work is basically translating real time seconds, minutes, hours into sounds and frequencies and then relayed directly to the eardrums through bone-conduction.

- Money-sensing which is split into 2 different devices.

- hunger/satiation device that makes you literally hungry for money, feeding into your instinctive desire to keep you earning your money. This device will be worn on the bell (and maybe head), warming and constricting the belly while creating salivating responses. The wearable is attached onto a computer-like device housing a game that simulates earning money. The wearable is only for experience, but the actual thing will be a supposed implant that release synthetic hormones which makes you feel hungry, and metabolises them when money is earned (this will be explained on a poster or some interactive screen).

- intoxication/soberness device that makes you intoxicated the more money you spend. This will be worn on the head, controlling your vestibular sense in the ear while also inducing mild constriction to the head. There will be a gambling game as well, simulating losing money.

- Personal-space protecting sense that senses the surroundings of the person. This will be a wearable on the neck (maybe?), with sensors surrounding that allow the person to sense the 360degrees around them. This won’t work IRL of course, looking at the technology we have, so I’m going to “cheat” using Kinect to detect presense, which detects depth as well which can be relayed too. The device translate proximity information into heat and touch, allowing one to feel the proximity of others or objects around them. The device will be wearble, and if I can do it, I’ll do a cybernetic version too that’s on display like the chronophone. This device is for people who use their personal devices all the time (looking into the future I won’t be surprised if we will wear google glasses-like devices as our personal mobile device which will be a major distraction and as such require this kind of sense)

The takeaway from the installation will be: do we really need these? Will we ever need these? In this project, I will explore a few themes, some more vague than others, and I hope people will catch them and think about them. Meanwhile, other than trying to get hired, I want people to have fun with my installation while thinking about the future of senses. Or even, not just the future of senses but to think about the current ways of them sensing their environments and the current ways of them using their senses.

Timeline Changes

Yes. Too much time wasted so here comes another update to the timeline, streamlined into more specific stuff since theres only FOUR + MONTHS LEFT…………..

I’ll be working specifically on each device in 3-week intervals.

Jan week 1: wrap up chronophone prototype

Jan week 2: start on money sense

Jan week 3: finish prototype for money sense

Jan week 4: refine money sense

Feb week 1: start on proprio sense

Feb week 2: finish prototype for propriosense

Feb week 3: refine propriosense

Feb week 4: super refine chronophone

Mar week 1: super refine money sense

Mar week 2: super refine propriosense

Mar week 3: finalise all products

Mar week 4: gather installation materials, Work on fake products (misc stuff on display)

Apr week 1: start installation design

Apr week 2: mid installation logistics work

Apr week 3: finish installation

Apr week 4: add ons to installations and products

May week 1: collaterals and marketting

May week 2: presentation

So next week I’ll start with money sense, while putting chronophone on hold till end Feb.