Awhile back we had this lesson where we played Her Story (2015) by Sam Barlow. I must say that the game is extremely simple in the interface and yet so intriguingly composed in narrative structure.

So you get access to files from a police dept which has been interviewing this woman connected to some sort of a murder case, I think, and you goal is to find out exactly what happened. You do so by searching the database for keywords connected to the case or words you think might be clues. Each of these videos are a few minutes and you have to read between the lines to know what to search next in the database. So for example she mentions some dates or names of people or an item during her interview and then you can search for those things and see what turns up on the monitor. However you can only open the first 5 matches of video to playback.

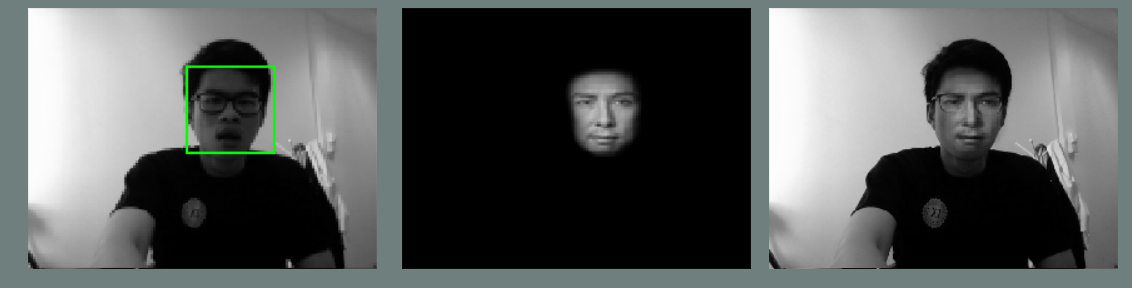

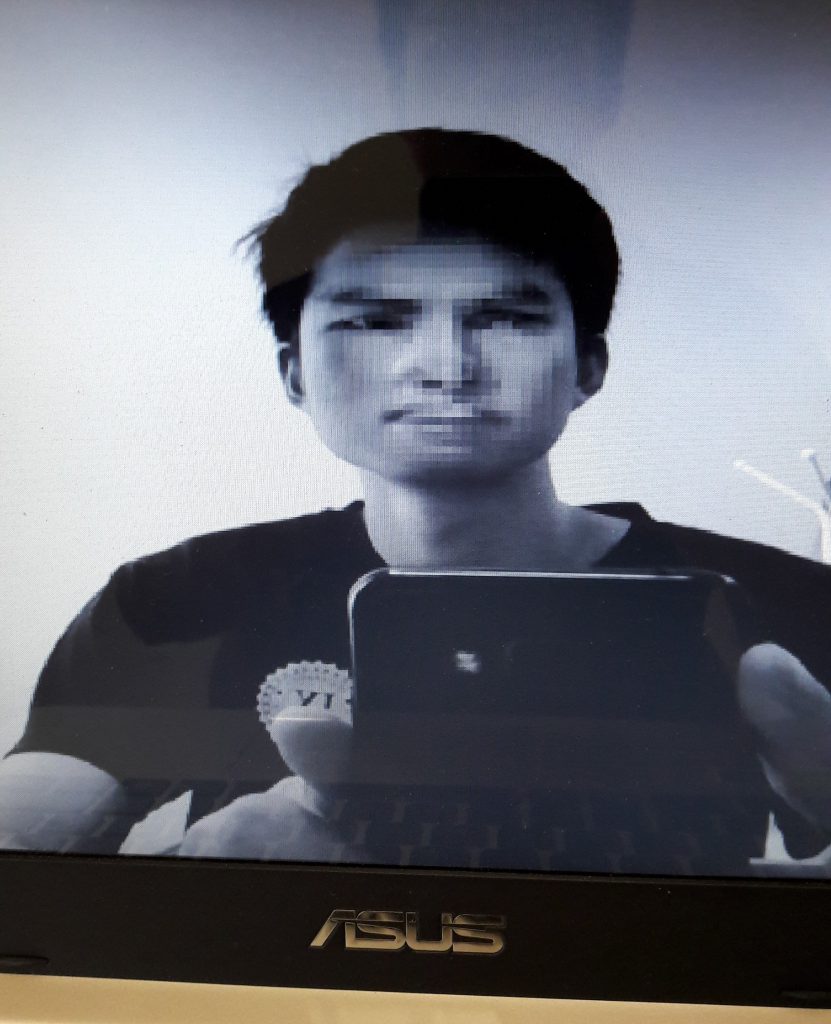

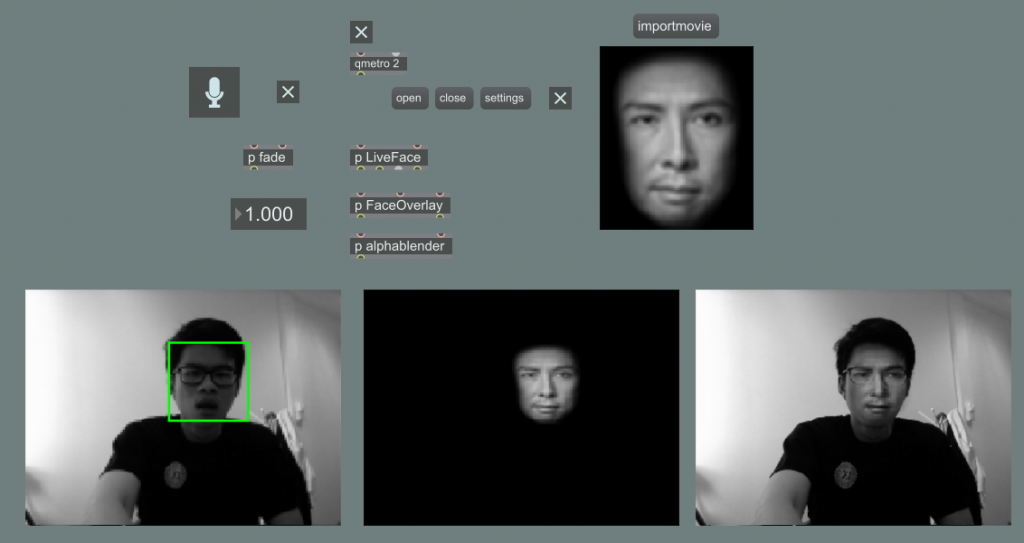

This is somehow rather connected to the current project that we are working on. And I have also created a search function within our game as well. Although my search function is rather primitive as compared to Her Story. But definitely there is much we can learn from the structure and gameplay elements of Her Story. What I really hope to emulate is the elegant display of the information and context. Because in the computer terminal of Her Story, there is actually some reflection on the monitor screen to show a bit of the lights in the room the user is using the computer within the context of the game. At times, reflection of the user’s face appears in the monitor screen as well. I found that really immersive and was really thrilled to notice this subtle detail as it certainly completes the look. There is also some fun stuff on the side going on like we can close the program and go to the recycle bin within the game’s terminal to see what files have been deleted and this adds on to the idea that we are really using this terminal to conduct the investigation.

The other thing about Her Story, is the cutting of the narrative. Almost like in film how we have jump-cuts. So here each interview video reveals only a portion of the information, but with just enough to allow you to formulate a certain idea or clue and search for a new piece of evidence. I think this formula is handled very well in Her Story, because I felt throughout the experience of playing it that I am getting more and more interested to get to the bottom of the mystery.

I feel that Her Story is certainly inspiring to play and learn from. Although I think we also wish to really devise our own game mechanics and structure, but hopefully we can successfully apply some of these learning points to our project as well.