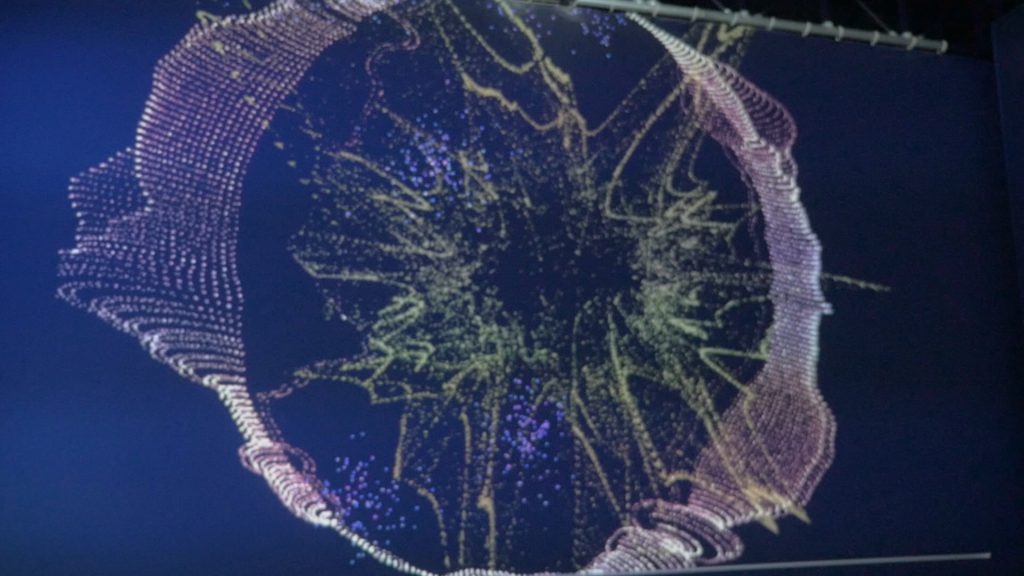

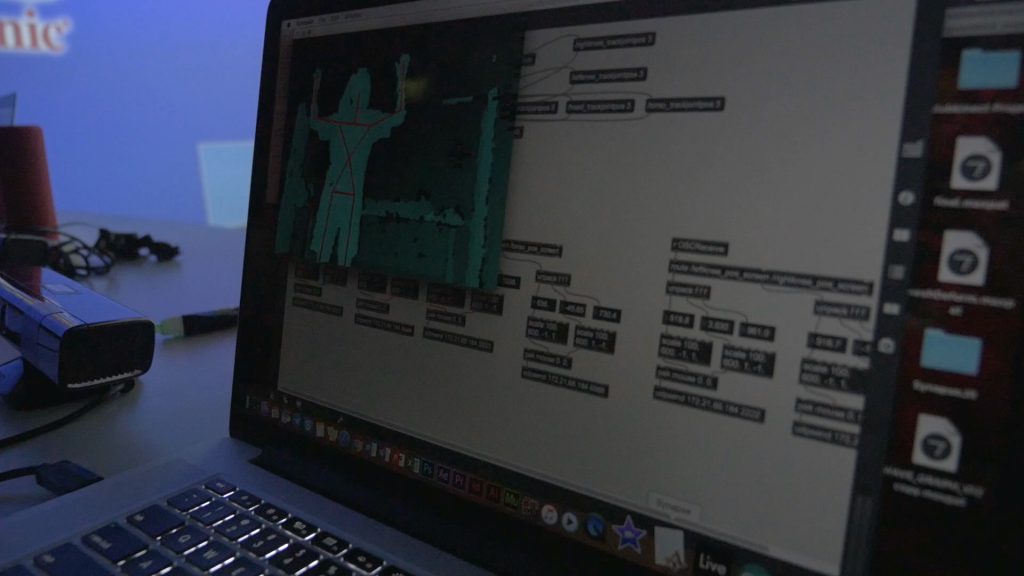

Particle Rhythm seeks to explore generation of music through movement using Kinect, Ableton Live and MAX MSP. All sounds were generated live and nothing was pre-recorded.

The installation involved 3 computers and 2 Kinects. We use a dark room to enhance the atmosphere of the installation.

Depending on the intensity of the movement generated by the user, the sound generated and warped also varied. We had a couple of dancer friends to try out the Kinect and the results generated were rather experimental and interesting.

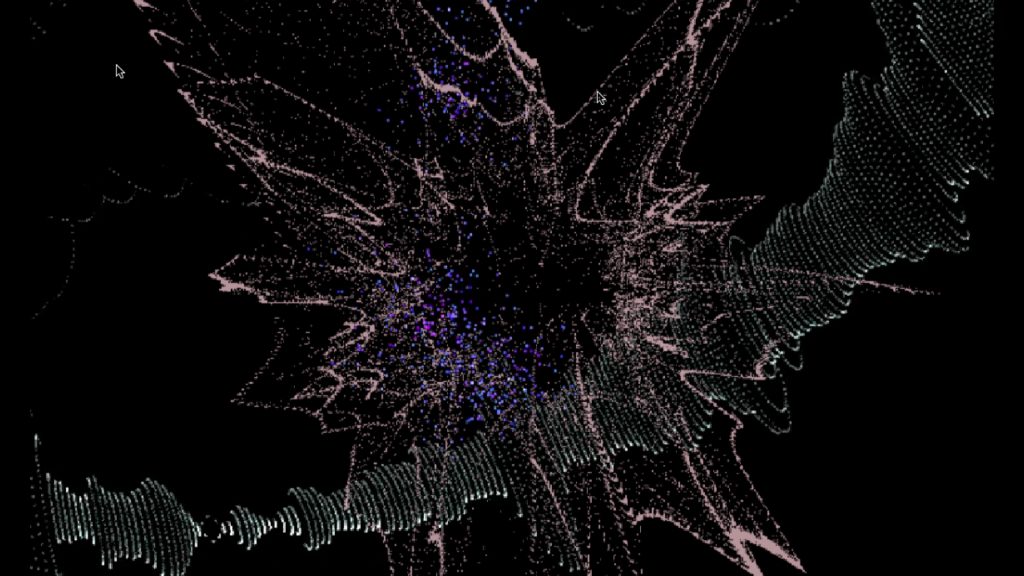

One of the major challenges definitely was the computer power which mostly could not keep up with the layers in MAX MSP, and thus the visuals were not as pleasing. Also, the sounds generated through mapping are rather raw and at times inaccurate due to the mapping of coordinates. The mapping of coordinates will definitely be something to overcome.

Overall, we have learnt quite a lot about MAX MSP and Ableton Live through this final project, and we would definitely want to take this further in the artistic level. In the future as we develop this, we would like to experiment with concept and music – maybe even pure soundscapes and no EDM track. Perhaps there could even be a thematic focus – e.g. nature.