A month is a period of time that we share universally. What happens in a month? How much of the past months can we recall? When we recall a month that has passed, what comes to mind?

Perhaps it’s characterised by events; public holidays, birthdays, one-time occasions (i.e. marriages, celebrations, funerals, world events, injuries, natural disasters). I feel that a month really flies by and the days blend together and become indistinguishable.

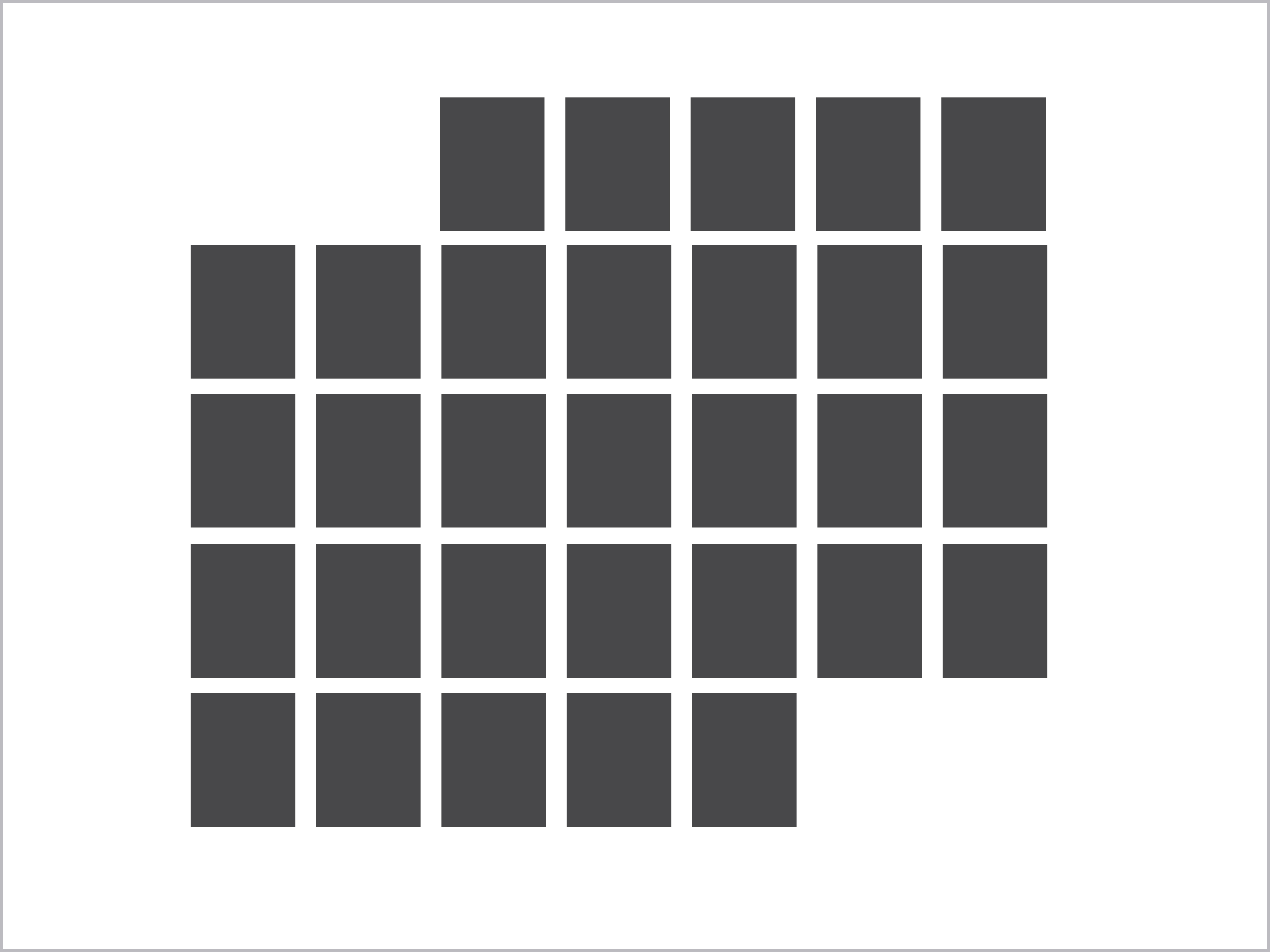

This project will invite users to document the most significant thing that happens each day. Users would record a single piece of media in any form (images, music/sounds, words, article/headline, website link). The system will record the submission each day but will not show posts from other days of the month. Users will only be able to view these daily submissions at the end of the month, as an entire month and collated experience. The arrangement of the daily posts will also correspond to the layout of a calendar.

Anonymity

The posts would be anonymous, with no identity, descriptions or captions. Unlike social media, perhaps not having an audience which knows us personally and only viewing the happenings of a single day would reduce the level of curation; i.e. reduce our urge to document our month according to certain cohesive themes/colours/forms etc. or document only the ‘nice sexy moments’.

Scale

Ideally, the project will have users from all over the world. The database would store the ‘months’ of users around the world. People can access these ‘months’, and have a glimpse into the lives of others. These experiences may vary from the intimate and personal to a collective experience. For example, on 23 Jan 2016, posts from Singapore may include a sound clip of a birthday celebration, failing a school assignment or a picture of a large tree. On the other hand, many people in the US may post pictures of the blizzard which hit the east coast, showing snow covered cars and streets. The significant-happening-of-the-day can be something that shook you personally, or an event that shook the larger global community.

On a side note, experiences and happenings seem to dictate the tone of the month. Hmm… I wonder if other more subtle aspects can be recorded, like feelings and moods.