I spent quite a lot of time watching tutorials to figure out what to do.

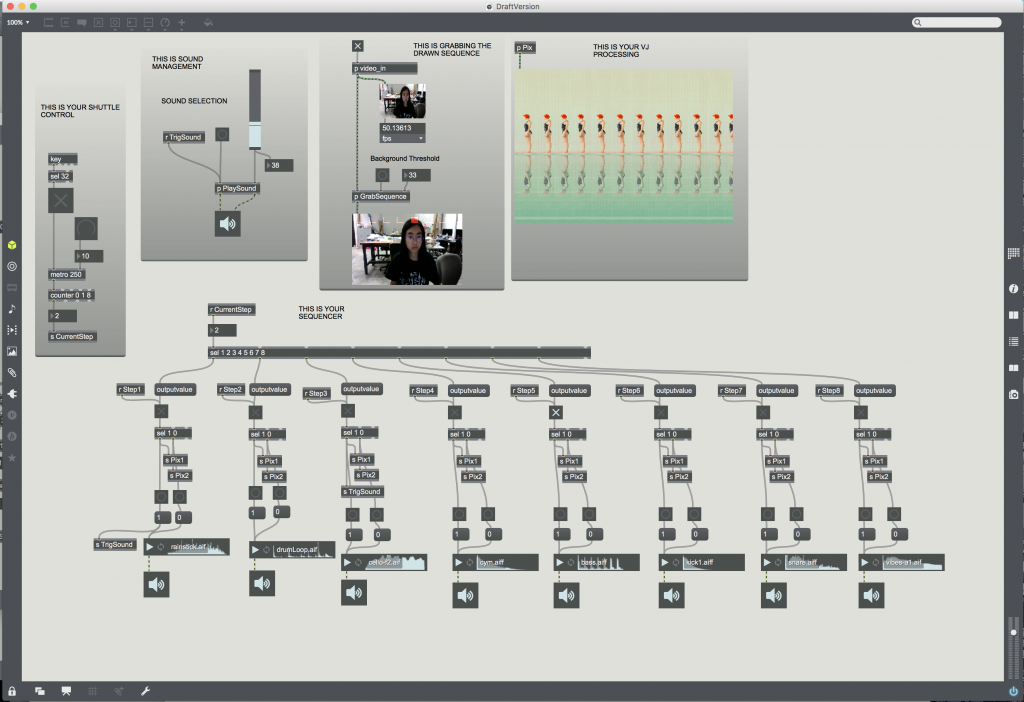

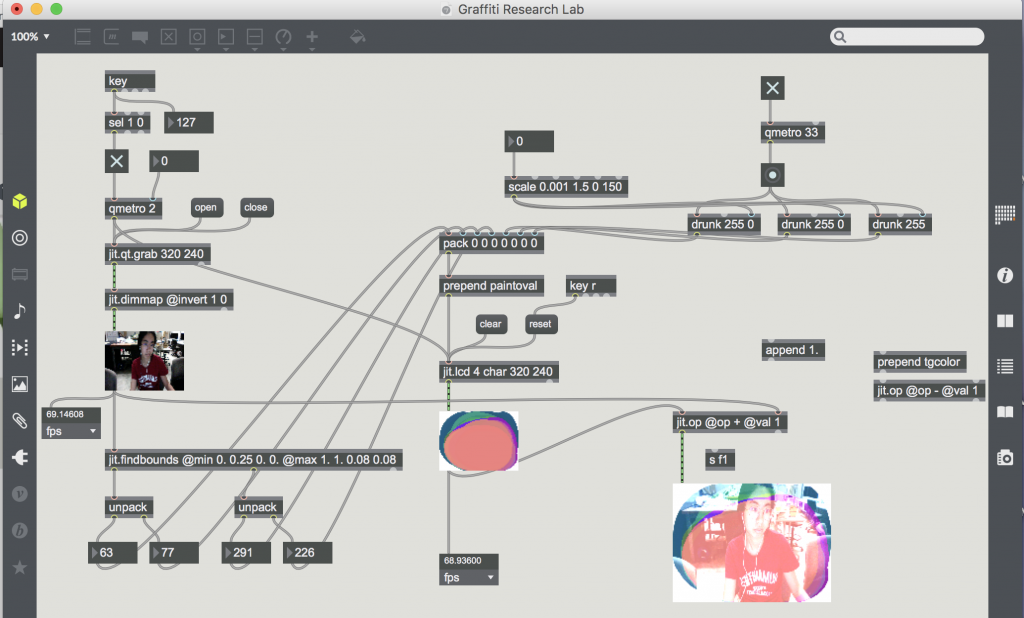

I think what I’m supposed to do is to get print on a jit.lcd a moving line of sorts from the camera detecting a moving laser light or object. I don’t really understand the code from the dropbox so I tried doing a different one.

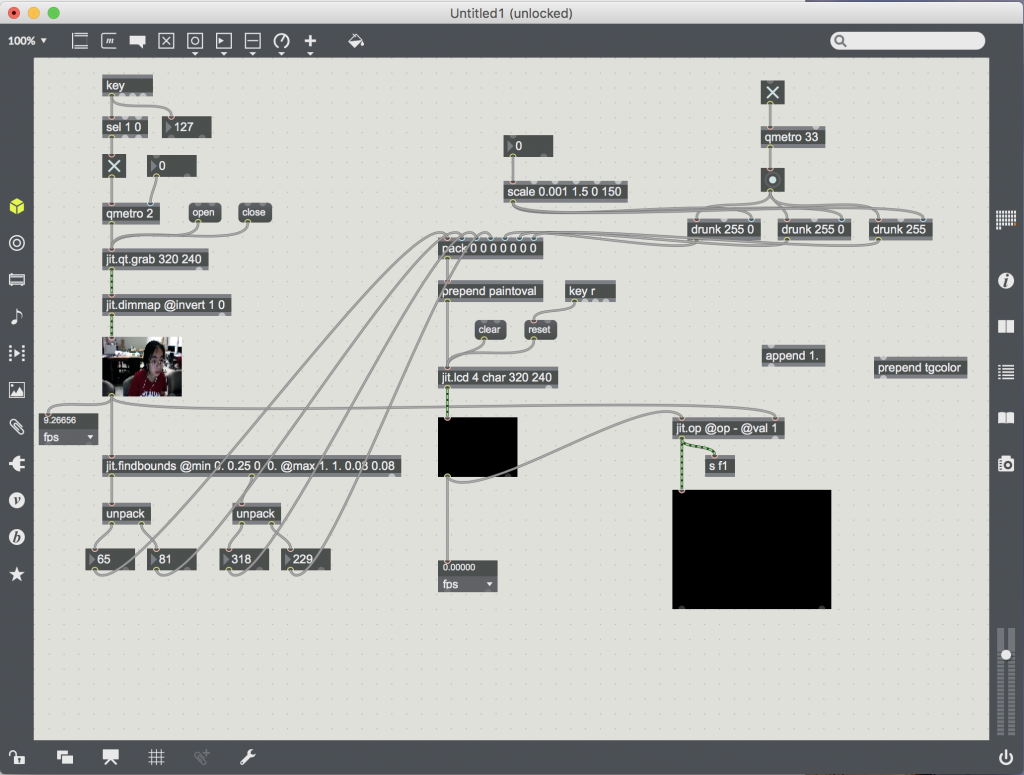

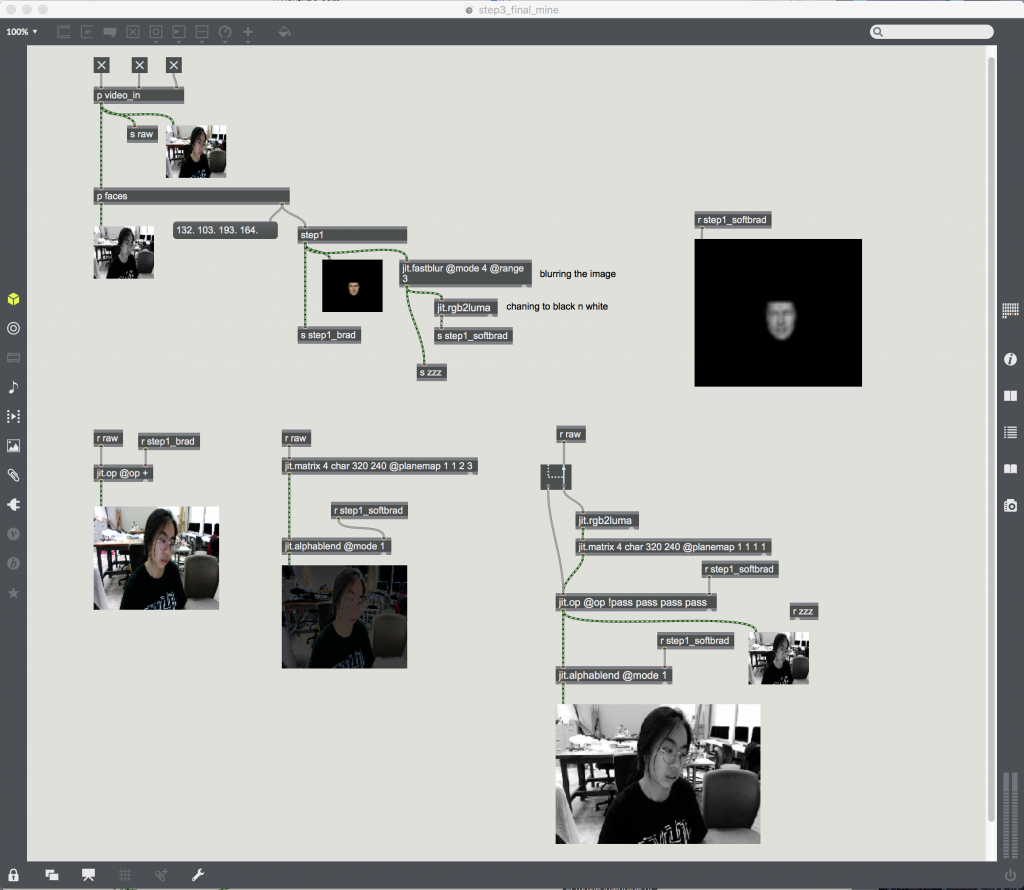

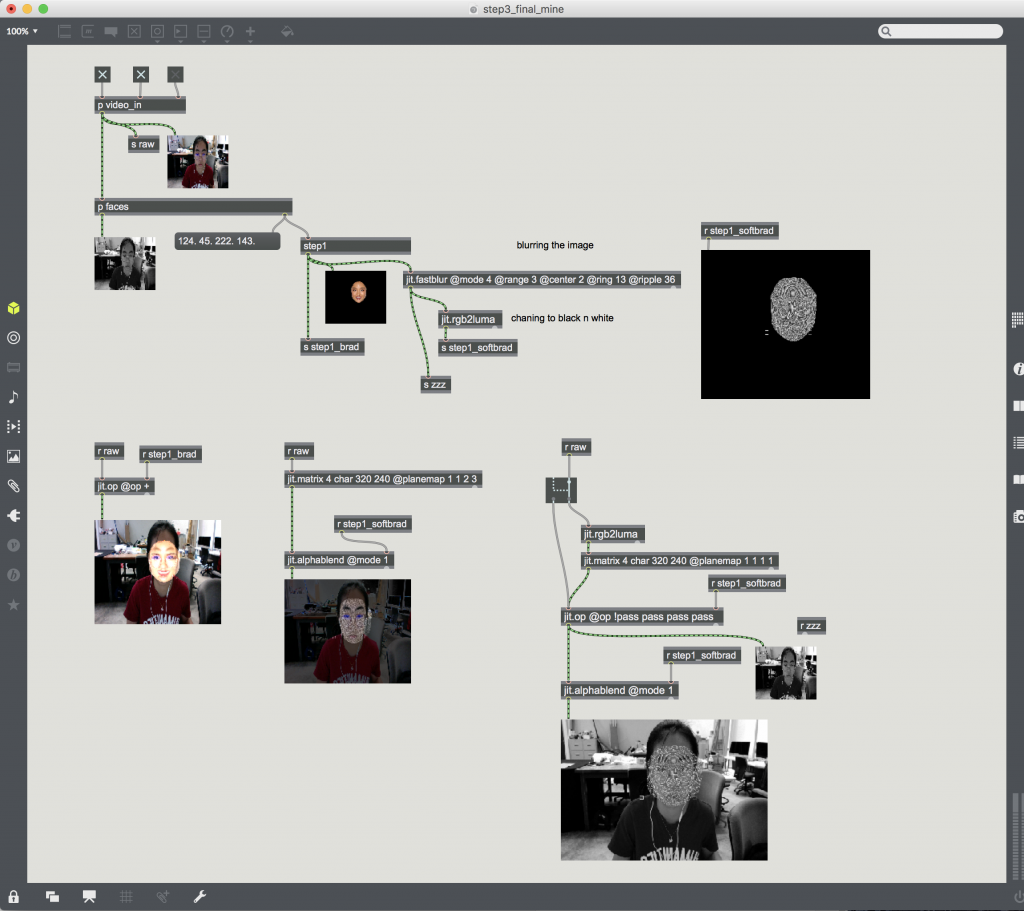

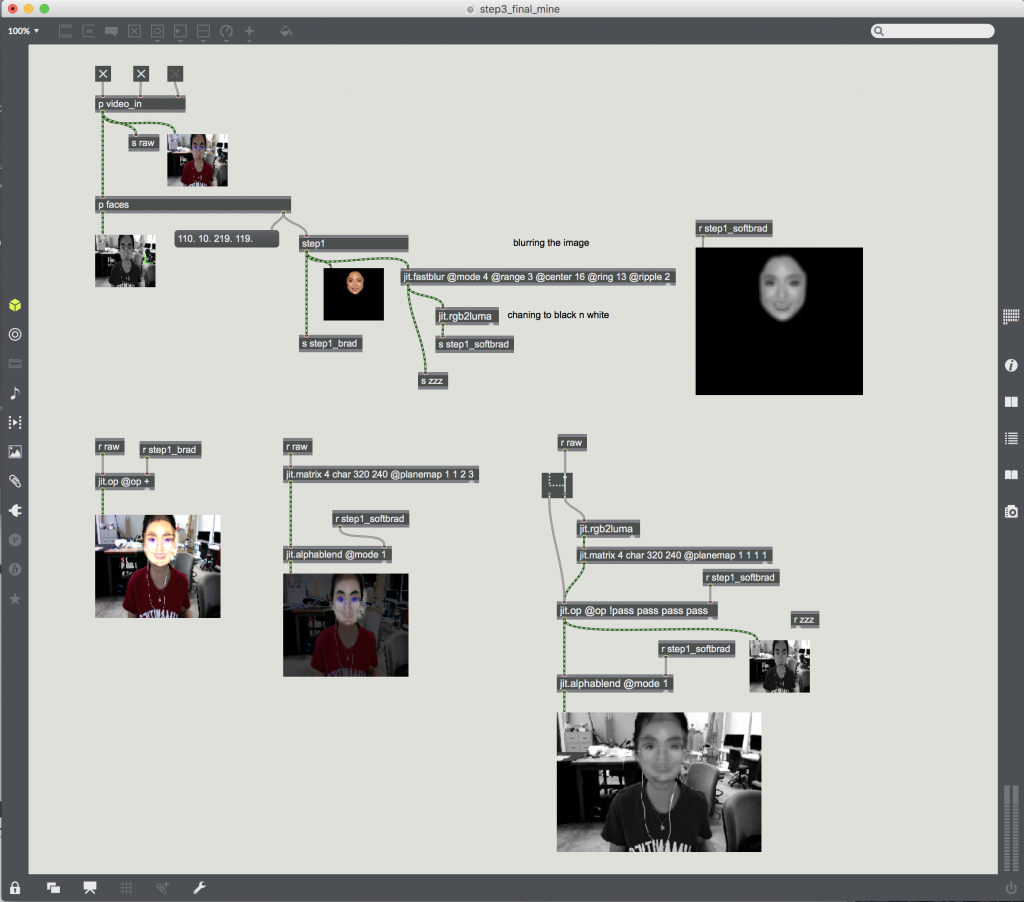

Here is what I have so far. Again, I don’t know where I went wrong such that my screens are black.

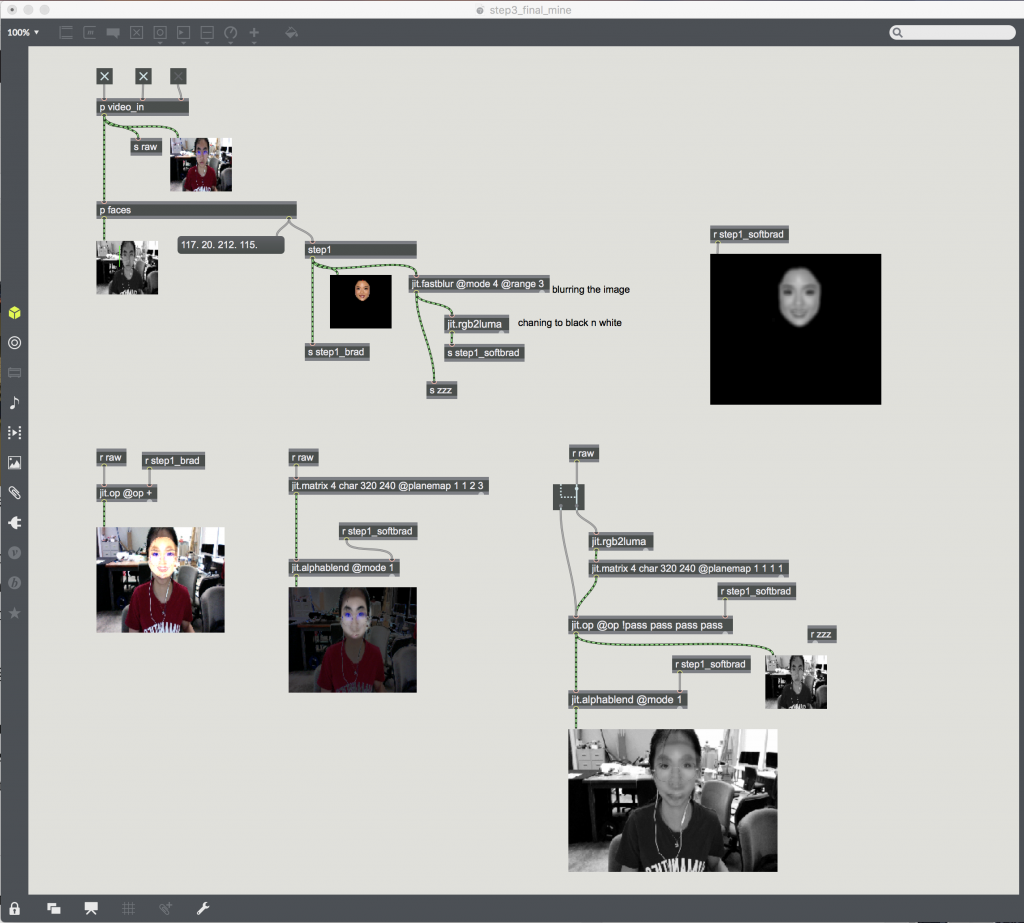

Okay, I figured that I didn’t connect qmetro to my jit.lcd directly thats why the visual did not appear. So currently it sort of works in that I think it detects my red colour shirt or a darker area and draws the giant oval according to where I move.

Above: Using my shirt to test.

Above: Using some black giant pen tool against a white paper background to test.

Above: I found it pretty cool that it can track the multiple times I moved then stopped for a while (see the diff coloured greens/pinks for each set of movements).

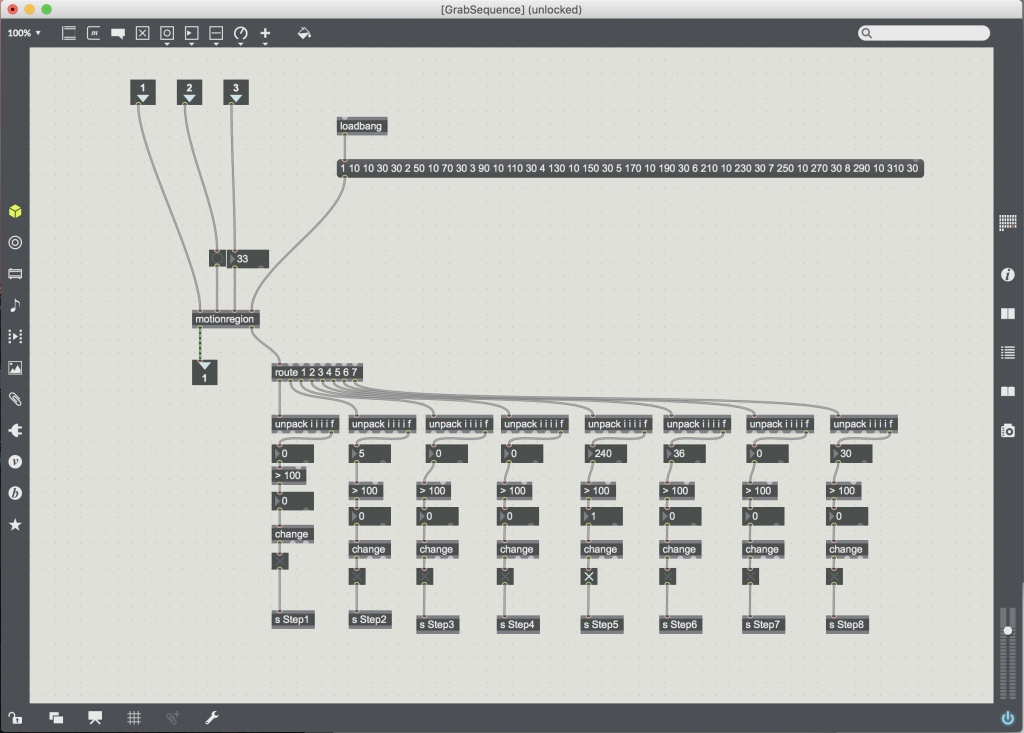

I went to clean up the code some more and took out unnecessary stuff.

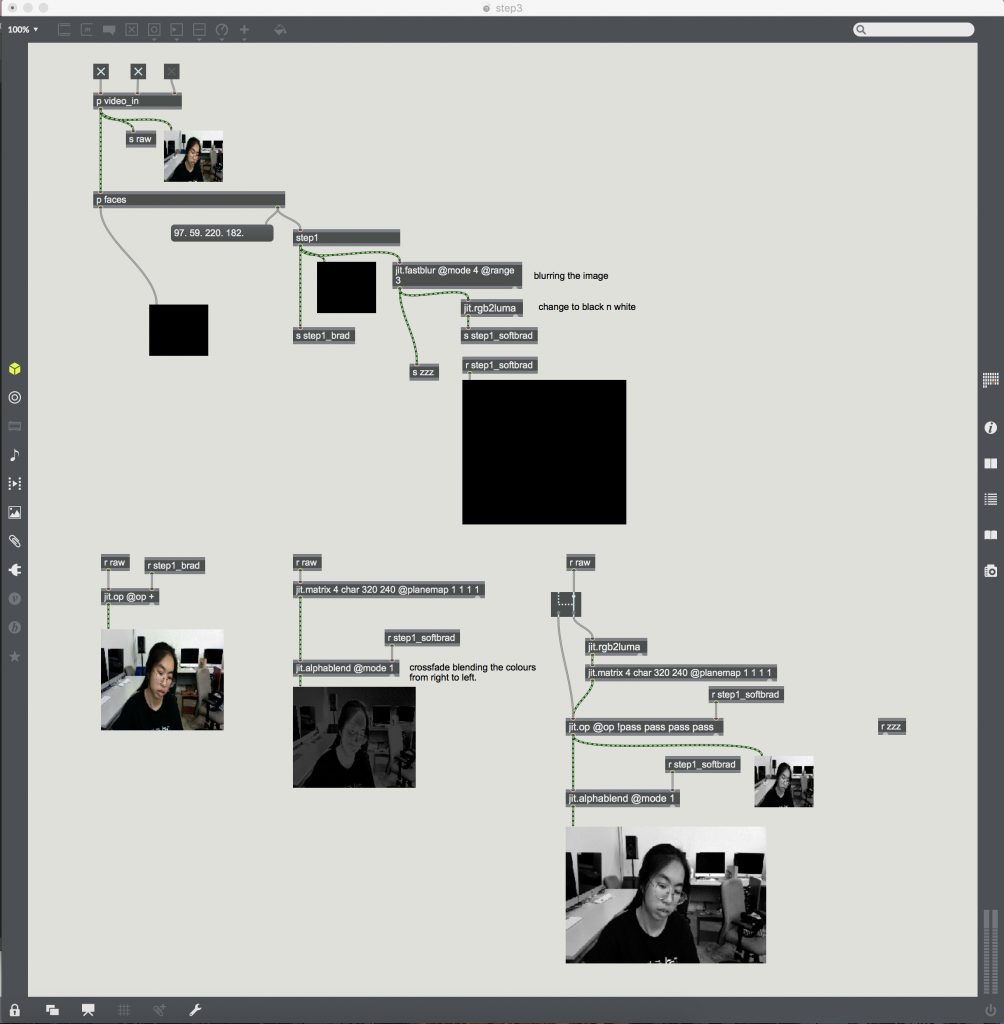

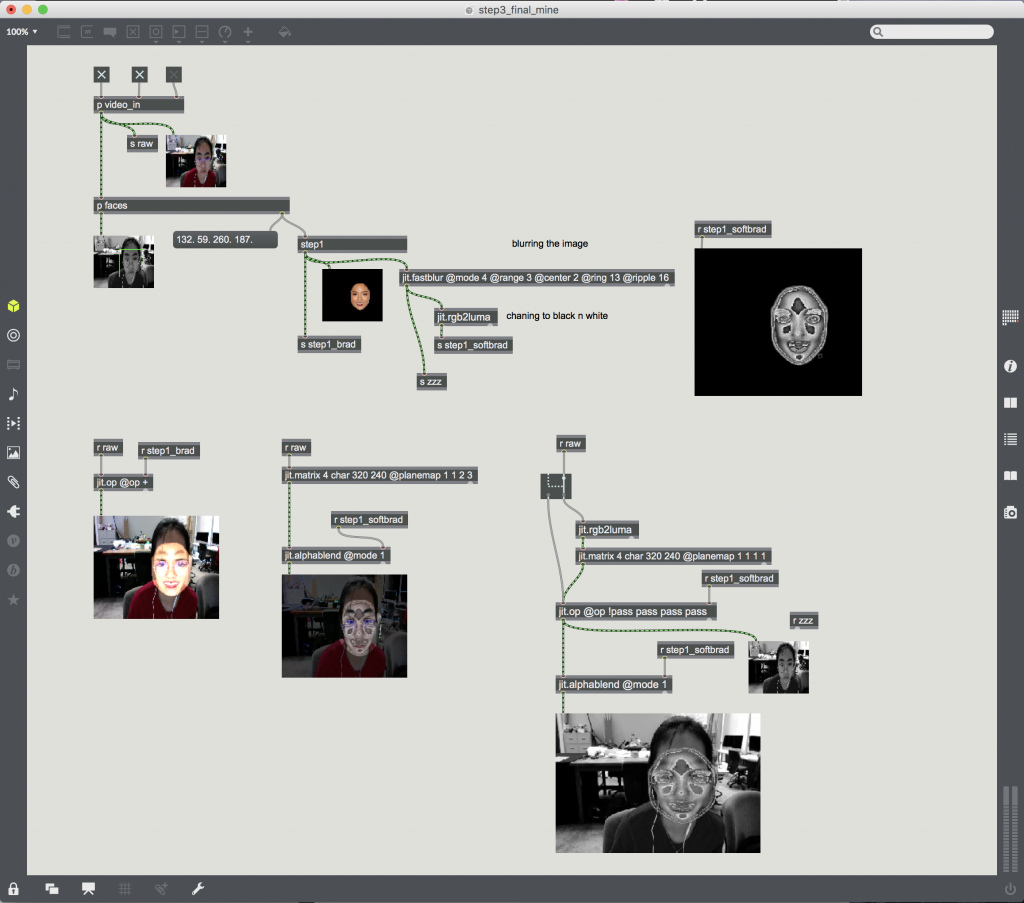

I added Jit.fastblur to play around if it’ll help and it changes it to black oval “lines” for me as well as being more sensitive in that it only detects the red of my shirt.

I realised it worked better when the lights are off, against a white background. I used a red marker to achieve this results.