THE FINAL INDIVIDUAL PROJECT for interactive 2,

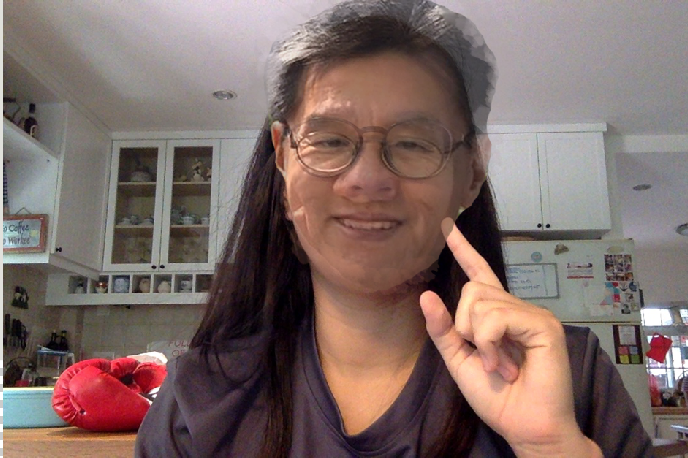

designed to confuse the blur me.

FIRST ENCOUNTER: it didn’t work at all, huh bummer. At first, all the patches were not sensing me at all.

I tried the different patches thinking that they would work, but the red regions were always highlighted and yet did not send bang signals or play the audio input.

Then I remembered motion region was a way to help MAX distinguish between what was there before and what we had just put into the screen input. There I looked into it and there and behold, I refreshed the screenshot and adjusted the threshold (to 33) to which it would sense the background and me well.

Subsequently, I looked into the more important parts of the project.

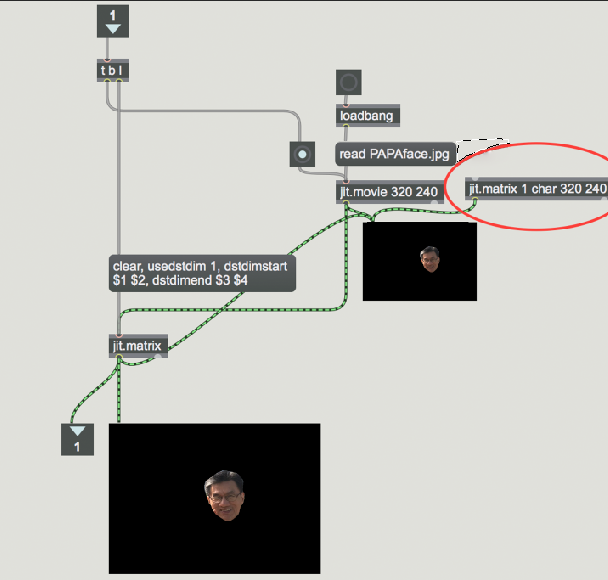

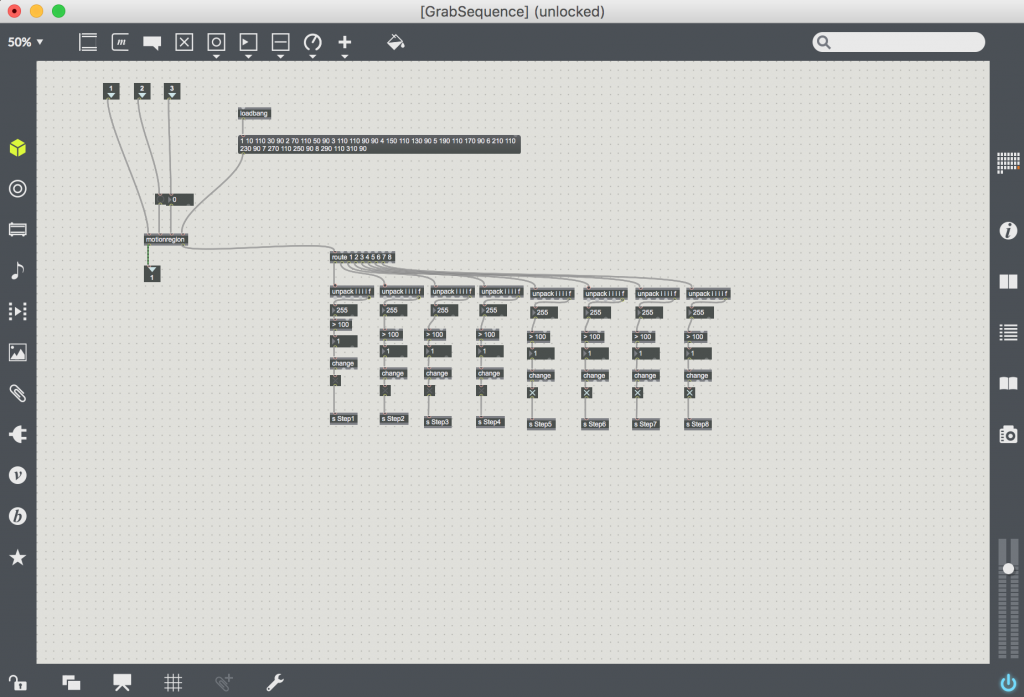

Firstly, Under pGrabSequence is where motion boxes was programmed. At first, I saw a list of numbers I could barely put a title on and realized shortly after that it was the coordinates of the motion region boxes that would sense the different things I put in it!

I then added 4 more boxes as there were supposed to be 8 music files compiled with it.

Then came the part where I had to match the motion region boxes with the music files! I had a very hard time trying to refer back and forth between windows of trigsound playsound and motionregion so I decided to remove that function and add that to the main patch itself!

In that, I added one button which would receive messages sent by the motion region and would send ‘1’ to the audio file whenever there was a threshold difference in the image coming in. However, the audios were overlapping with each other and getting very messy even though there was nothing in the frame! so there was a problem in getting the audios to stop running whenever I start it

Then I added the second bang which turned out to be a better addition as then the audios would then switch off by themselves as they received a’0′ from the bang when the motion regions did not receive a threshold difference in the image previewed!

Hence the final product, I did it with my arms and spare shampoo bottles I had lying around 🙂 enjoy!