15 min of randomness in adm

Posted by ZiFeng Ong on Thursday, 17 August 2017

Before the Live Video:

This was the first time I did Facebook Live video and I felt like a cave-man that doesn’t know how does it even work, I don’t even know how set my profile to public since I’ve set it to private years ago, I had, however, took part as an audience for the Live video of my friends and kind of like it due to the fact that people reacted almost instantly on the comments I’ve wrote. But the idea of going live on Facebook was really scary for me as I did not have the confidence to produce quality video which people will like, what if like there is totally no viewers? And also there are people who I am not very well acquainted with in my friend list and I don’t feel good putting the live video where they will probably not get what is going on. But Oh well~ What needs to be done must be done.

*clicks the red button*

*clicks the red button*

During the Live Video:

At the start of the live video, I was really lost and did not know what to do so I followed my classmate out of the class room and was feeling weird as my friends are all taking happily to the phone but I’ve got nothing to say so I only filmed them in silent. The anxiety of putting myself on the web Live slowly dwindle down as soon as I saw our IM juniors, since they are from IM, it feel like there is a connection with them just because I knew they will be doing this the next year, just like how I saw the Senior Nathanael did it last year.

And then, I’m surprise that my first viewer on this live video was also Senior Nathanael, I’m touched that at least someone was watching so I went to say hi to him in the video, at this point of time, I did not know about the function to switch the camera(because I’m a caveman) so I turn the phone physically to show my face, I had no intend to show my face at all as I planned to just film what is happening without showing myself but it just happened naturally.

After me exposing myself in the video(2:23), it feels OK since I’ve did it once and broken the barrier of videoing in a third person, suddenly my phone became something which I am interacting with, (so instead of me using as my phone to record and tell a story, it developed into me talking to the phone and the phone just extended itself from “part of me” into “a separate entity of me”. which is really bizarre as all I did was to show myself in the video and my perspective changed so much.)

After me exposing myself in the video(2:23), it feels OK since I’ve did it once and broken the barrier of videoing in a third person, suddenly my phone became something which I am interacting with, (so instead of me using as my phone to record and tell a story, it developed into me talking to the phone and the phone just extended itself from “part of me” into “a separate entity of me”. which is really bizarre as all I did was to show myself in the video and my perspective changed so much.)

After going around looking at what the second year was doing and semi-introducing what I was doing and filming others, it came to a point that I started to do random things and became numb on the fact that people will judge me while I am on live(my anxiety before the start of the Live video), I think this is partly because:

1) People around me were doing the same thing.

2) I’ve did it for a couple of minute and slowly getting used to it.

3) The pace of live video was fast and there wasn’t time to pre-think what to do.

4) The viewers were commenting on random events which gave me a feeling that I am not alone.

5) I felt like I have some responsibility in entertaining the viewers.

Therefore I did some random(disturbing) acts just because I could.(Just to clarify, I don’t go around squeezing people’s butt in real life)

I thought it was funny, and now looking back, its still kind of funny albeit the creepy movements. Just what the heck am I doing. LOLLL

And then I proceed to do normal activities like walking around, talking to people and trying to introduce ADM to the viewers whom are not from ADM like my Ex-classmates and such.

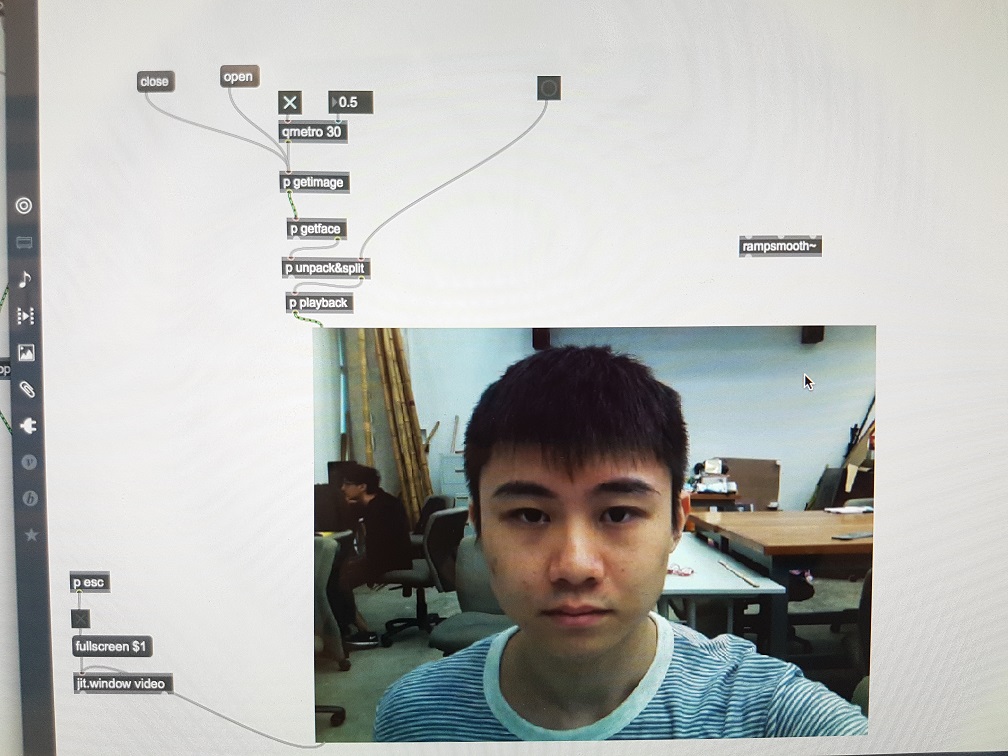

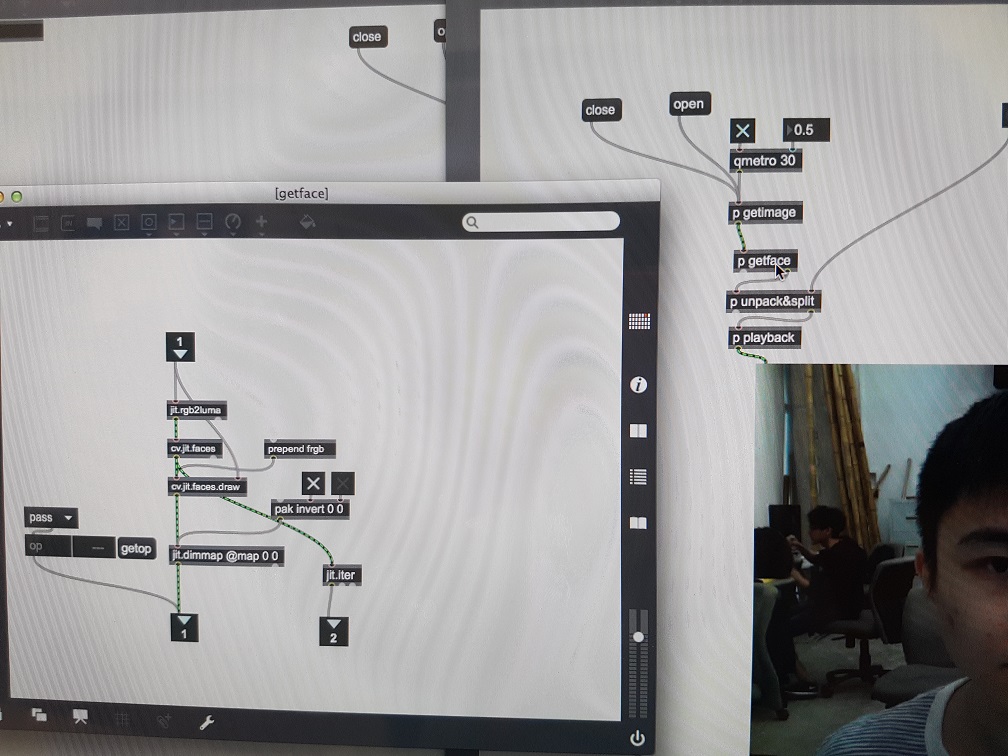

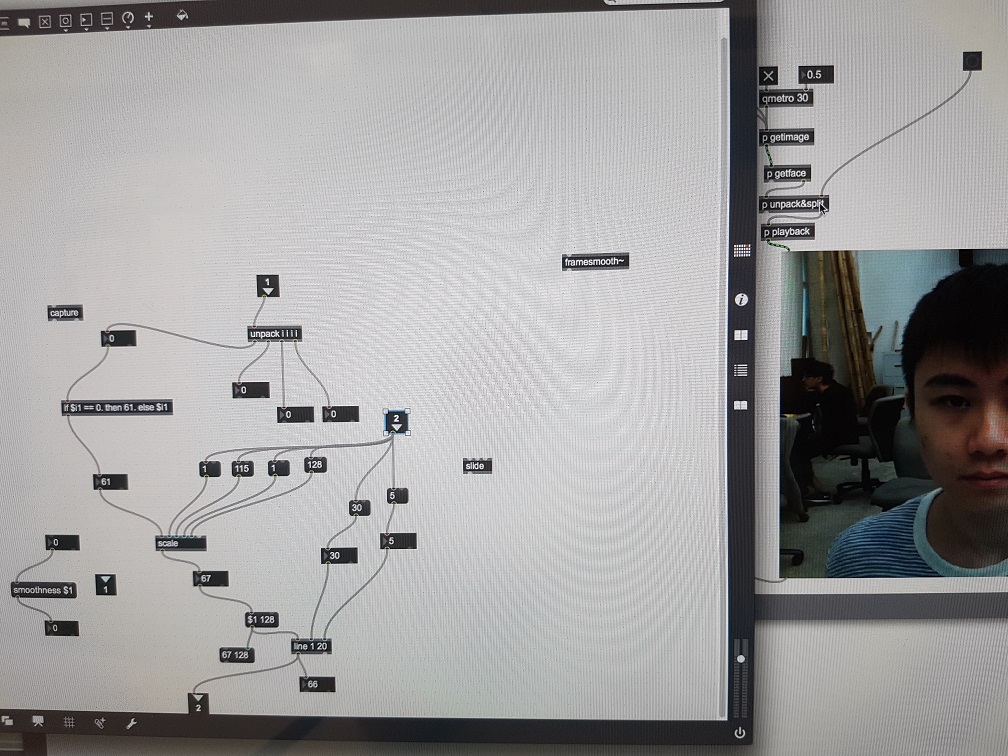

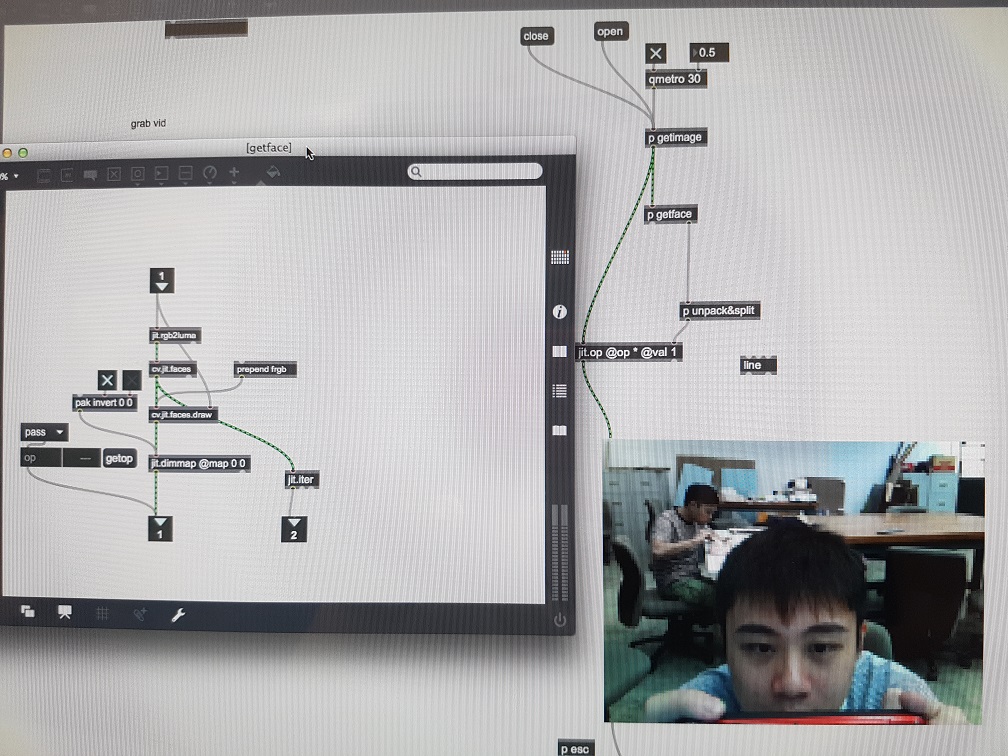

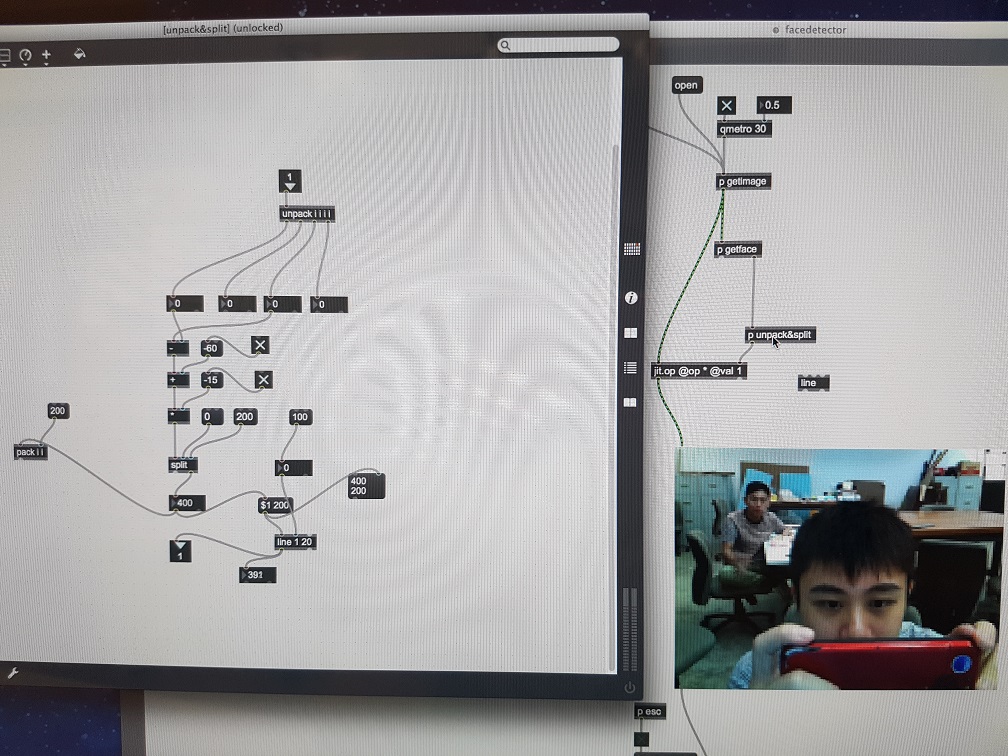

I also really like this part where I was filming what CherSee was Filming and he was on the front camera while I was at the back camera so I could see myself in his screen and I am recording myself recording myself like a video-inception (left), but from the video which he uploaded(right), its only a one way video which he only shoots my face trying to get into the center of the frame while filming him. This is really mind boggling and cool in its own way as both of us are having live videoing it feel like it is a real-time magic or something.

At the end of 15 minute while I was walking back, I went back to the IM Junior table and I only notice the difference when I rewatched the video. I was filming myself!! Comparing to when I just started this live video where I was just filming the juniors, Now I am in the video showing myself to the public. I was talking much more in comparison. (At the start, I was just waving to them without saying Hello and only briefly reply in short sentence about the live video, and towards the end, I am just talking aimlessly.)

*Thanks Bridgel, Tisya and Sylvester for entertaining me.*

*Thanks Bridgel, Tisya and Sylvester for entertaining me.*

At the end of the video, I got a slight panic when I stopped the video as I am afraid that I would do something wrong which accidentally delete the footage since it was live, there is no way which I could replicate it again if it was deleted so somehow, so a live video felt more precious to me than any other video I took.

After the Live Video:

Prof Randall showed us the 4×3 grid video wall of the with 12 of our videos playing at the same time, I love the way that everything overlapped but the non of the video’s time frame was in parallel (the same exact event happened at different timing on the video wall) and it is totally uncoordinated, in a sense, it is chaos, but the rhythm is there within the chaotic mass which made it much more interesting than just looking at one single video at once. The visual and audio of all the 12 video was overwhelming and just when I am focusing on a single video within the video wall, suddenly I heard something interesting happening and I will glance around to find which video produced the sound as there must be something interesting happening in it, this gave me a sense of treasure hunting.

In Conclusion

I love the way that our individual 15 minute effort was placed together to form “something greater than yourself ” piece of work. This 15 minutes of live video was really fun and enriching, it somehow changed me and gave me some confident in the future Live video that I will be doing. At least I won’t start with the Live video with anxiety and getting lost right after I clicked the red button.

Note to self: I should talk more in the live video.