Currently, the big idea is:

Playing around with Unreal:

Wanted to try out the water plugin with Unreal and also the idea of floating actors

However at this point, everything felt slightly flat, and i wanted helium balloon-float not water-float

Also tried to connect Unreal w Touchdesigner via OSC potentially for the MicInput

Space

While building my space, I wanted to just see what I could with the free packages in the marketplace.

The following are the ones I used:

- (mainly for the rocks) https://www.unrealengine.com/marketplace/en-US/product/soul-cave

- (pretty fireflies) https://www.unrealengine.com/marketplace/en-US/item/91e62feae7104ce6a2c471454cc4b683

- (other type of rocks) https://www.unrealengine.com/marketplace/en-US/product/paragon-agora-and-monolith-environment

- (fun torches etc) https://www.unrealengine.com/marketplace/en-US/product/a5b6a73fea5340bda9b8ac33d877c9e2

- https://www.unrealengine.com/marketplace/en-US/product/9efde82ef29746fcbb2cb0e45e714f43

And this is what I created:

I retained the water element, but wanted to build on it. During the week of building, I wanted to focus on lighting. Maybe perhaps… because of this paragraph from a book I was reading (Steven Scott: Luminous Icons):

Obviously I am no Steven Scott, but I really like the idea of experiencing “something spiritual”, and also because it’s inline with the ‘zen’ idea of my project. While my project has no direct correlation with “proportion”, I still wanted to try to incorporate this idea with building lights of the ‘correct’ colours(murky blue-ish) and manifestations (light shaft, fireflies).

Projection

You would think just trying to get it up on the screen would be easy, just got to make the aspect ratio the same as 3 projectors.. thats what I tot too…

Anyway summary:

Spout- doesn’t work with 4.26, latest only 4.25

Syphon- Unreal doesnt support

Lightact 3.7- which doesnt spout, cant download on mac nor the school’s PC because it was considered unsafe due to the little amount of downloads

BUT I did try Ndisplay, which is an integrated function in Unreal

I could get it up on my monitor

Though I couldnt get it up on the projectors, but i think it could be due to my config files, i need perhaps another week or so on this

Interface

With the interface, this was the main idea:

Plan 1 would have made everything seamless because it is all integrated into Unreal. But ofc

So I am back with Plan 2, via OCS.

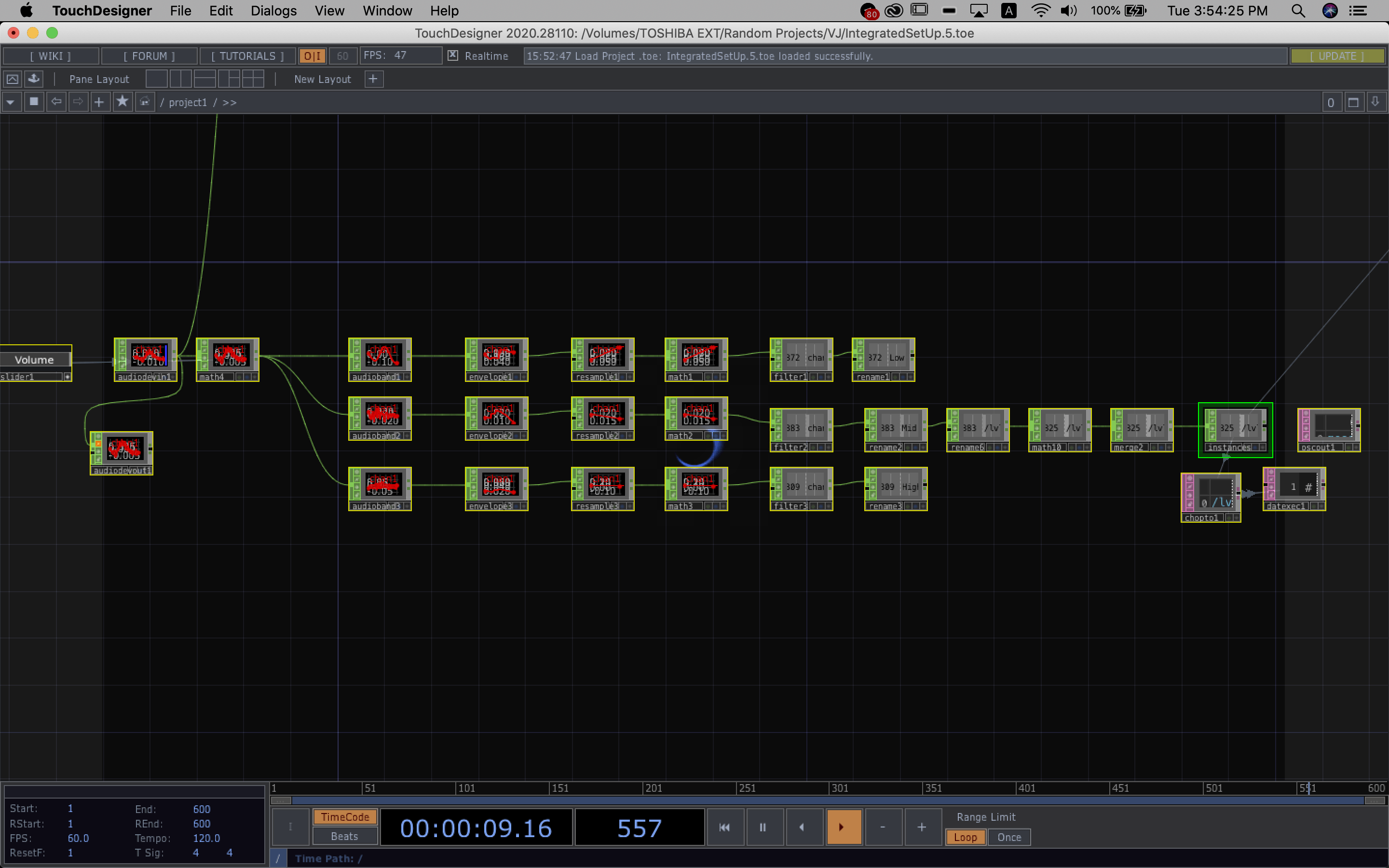

On touchdesigner’s end:

I have basically managed to split the audio that comes in via the mic into low, mid and high frequencies. For now, im only sending the mid frequency values over to Unreal because it represents the ‘voice’ values best. But, I had to clamp the values because of the white noise present. I might potentially use the high and low values for something else, such as colours? We’ll see.

For now, one of the biggest issues I feel is the very obvious latency from Touchdesigner to Unreal.

But moving forward first, the next step would be to try getting an organic shape and also spawning the geometry only when a voice is detected.

Since I wanted the geometry to ‘grow’ in an organic of way, I realised the best way to go around it would be to create a material that changes according to its parameters rather than making an organic static mesh because when it gets manipulated. by the values it’s literally just going to scale up or down.

This is how I made the material:

Using noise to affect world displacement was mainly the node to get the geometry ~shifty~. But I think as you can tell from the video, the Latency and the glitch is really quite an issue. And once again moving on, trying to spawn the actor in Unreal when voice is detected.

For that I am using Button state in Touchdesigner to do so. When a frequency hits a threshold, which assuming comes from the voice, the button will be activated. When Button is on click, ‘1’, the geometry should form and grow with the voice, but when the button is not on click, ‘0’, the geometry should stop growing.

Currently this is the button I have but it’s consistently still flickering between ‘on’ and ‘off’ so, I am starting to think maybe I should change to ‘When there is a detection of change in frequency instead, button clicks’.

You must be logged in to post a comment.