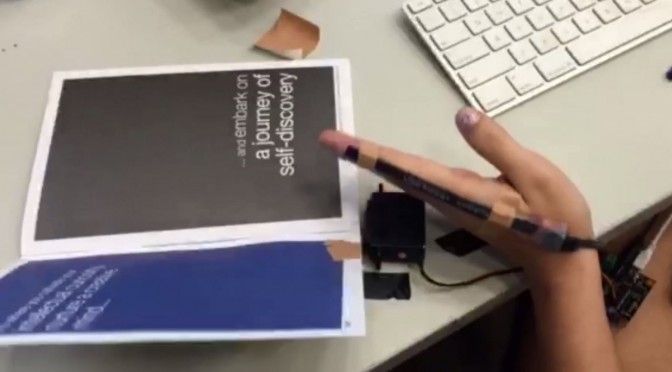

This is an experimental project to trick people into sensing a ghost by seeing and hearing when they interact with the “air”. They only can see the ghost on the screen projected from the top down camera. However, they can’t see the “real” ghost right beside them. This would make them feel skeptical to the environment as well as the feedback.

https://www.youtube.com/watch?v=y7PTzvySZ4M

It’s programmed to detect the motionregion, when a person step into a specific region, eg. ghost whisper, box moving, mannequin moving, ghost appear in different position according to where you stand, all these feedback will be triggered by the place of a person stand.

Standing position affects the appear of the ghost because when a specific region is triggered, the dumpframe will select the part of video, and show that specific moving image.

Alphablend- pre recorded video overlays with another video which is being recording. The good thing is, the colour could be adjusted to the extend that I want.

https://www.youtube.com/watch?v=cwGZaqwpCGs

In this project, phidget, top down camera are also used and function as the ghost’s feedback.

Done by Chou Yi Ting