Last semester, I did the reading assignment on the book Information Arts: Intersections of Art, Science and, Technology by Stephen Wilson. There were a lot of interesting sections in the book but I only managed to cover one previously, so I have decided to do the reading assignment on another section (Robotics and Kinetics) of the book this time.

The section starts off with the history and definition of robots followed by the research done on them and some examples. According to the book, the term ‘robot’ was originally coined by Karel Capek in 1917 and it came from the Czech word ‘robota’, which means obligatory work or servitude. Capek’s vision of a robot was an artificial humanoid machine created in great numbers for a source of cheap labour but robots nowadays have strayed away from its original definition. To me, robots are cool machines programmed to do a variety of things and with artificial intelligence incorporated into them, it seems almost as if they are invincible.

Before robots, there were simple machines that were made to do repetitive actions like automata.

But with technological advancements, we now have robots like Sophia and many other lifelike humanoids.

In this section of the book, the author talks about the areas of research in robots that researchers were focusing on. Even though the information might be a little outdated as this was written back in 2002, I believe these areas of research were what led to the birth of smarter and more lifelike robots like Sophia. These areas of research were: Vision, Sophisticated Motion, Autonomy, Top-Down vs. Bottom-Up Subsumption Architectures, Social Communication between Robots, and Humanoid Robots. I was interested in the social communication between robots so I looked up the examples given in the book.

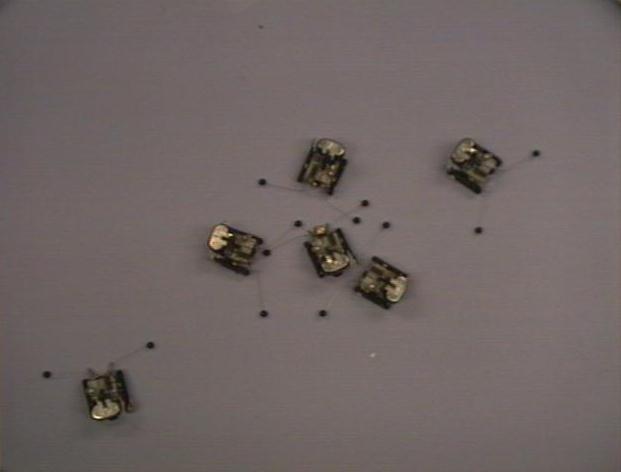

MIT’s The Ants: A Community of Microrobots

James McLurkin started with the goal of creating a robot community and he was inspired by ant colonies. By April 1995, James McLurkin and his colleagues in MIT had created 6 robot ants that pushed the limits of microrobotics as many sensors and actuators were incorporated into their small package. These robot ants contain IR (infra red) emitters and sensors, allowing them to communicate with one another like actual ants.

They can be programmed in such a way that when 1 robot ant finds ‘food’, they will emit IR signals to surrounding ants and these surrounding ants will move towards the first ant while passing down the message by emitting their own IR signals.

The ants can also be programmed to play games like tag where 1 of them starts off as ‘it’ and tries to seek and bump into another ant, turning them into ‘it’ instead. In the video above, you can see the robot ants taking turns being it after bumping into each other.

Other than tag, these robot ants can also play follow the leader and manhunt.

University of Reading’s 7 Dwarf Robots

The video above shows an example of flocking/follow the leader seen in (five out of) seven dwarf robots. The seven dwarf robots were created by Kevin Warwick and his team between 1995 to 1999 and they can communicate through IR signals. These robots can also learn to move around without bumping into objects through reinforcement learning.

In conclusion, I think it’s interesting to see how machines have progressed from a simple hand-cranked automata to microrobots with multiple in-built sensors and now self-learning AI humanoids. With more research and development, we might be able to see robots that fully integrated into our society one day! But for now, I could use these information that I’ve learnt about robots and incorporate it in my projects or even make my own robot ant.

References:

Wilson, Stephen. Information Arts: Intersections of Art, Science and Technology. Cambridge, MA: The MIT Press, 2002.

http://www.ai.mit.edu/projects/ants/

http://news.mit.edu/1995/ants

http://www.soc.napier.ac.uk/~bill/bcs_agents/sgai-2004.pdf

http://www.bbc.co.uk/ahistoryoftheworld/objects/0-6sufjRSnGw70a2l2TuvQ

https://blogs.3ds.com/northamerica/wp-content/uploads/sites/4/2019/08/Robots-Square-610×610.jpg