Context

Upon the completion of the mid term projects, we were tasked to further develop our concepts with the help of technology for our final project. My mid term project was titled Dis-harmony, a simply anamorphic perspective installation of a bonsai tree made out of found objects. The concept behind it was simple: to find harmony in the chaos that Covid-19 has caused on 2020. The idea of realigning ourselves to the ‘correct perspective’, and to relocate the balance in our lives during this pandemic period led me to move on and develop ‘the mind, the body and the sounds around us’.

Using similar concept but a different approach, it incorporated the idea of spatial awareness and repositioning to find harmony not in a visual, but that of an audio manner. In the description of the project, I explained that it was an attempt to bridge physical and psychological space, where we follow our innate inclination to make sense of the sounds around us.

First Iteration

The first version actually revolved the idea of transitioning found shadows according to the reposition of each person on the space. This meant a smooth transition of shadows that may be paired with background music to give the effect of ‘harmonizing’ these spaces through taking up different parts of the space. The troubles i went through included: Firstly, the inability to acquire similar shadow effects that sync with one another. I first tasked myself to create a video, excluding the interactivity portion to give myself a rough understanding on how it would work but it was difficult to source for such materials in the first place. Initially, I had wanted to have people model the shadows for me, but due to the short amount of time I had left, it was not a viable solution. As such, I had to rethink this idea and take a different approach when it came to shadow manipulation.

Second Iteration

I wanted to make responsive generative patterns that would link the movements of the participants to the projection. The idea was that our actions would create a visual harmony in space, an interesting almost generative method; except that it could be predicted by the movements in space.

I really liked this ideation and would pursue it further if given more time, thus its limitations. It did however involve the use of Processing and Kinect; two softwares that I was not particularly inclined to. The Kinect sensor was recommended by Bryan, our Interactive Spaces work study, who suggested that it may be useful to track real time positioning through the sensitive Kinect sensor. As such, I’ve spent bulk of my time on tutorials on Kinect, the softwares it could work with, which included OpenFrameworks, Processing, Unity3D and many others.

The depth and possibilities of Kinect were vast, and it created many opportunities to develop the project. More specifically, I was drawn to the idea of capturing raw Depth data and computing it into different elements. There was an online tutorial on YouTube by Daniel Shiffman where he used raw Depth data to calculate the average of pixels and used that to create particle effects in Processing.

Additionally, I found that Elliot Woods from KimchiAndChips made significant progress in using the Kinect and calibrating them to projections, making interesting interactions such as, but not limited to, the manipulating of lights as shown in this video – Virtual Light Source. Due to my lack of understanding of Processing software, the coding of the Kinect and generative modules did not come to fruition.

Final Iteration

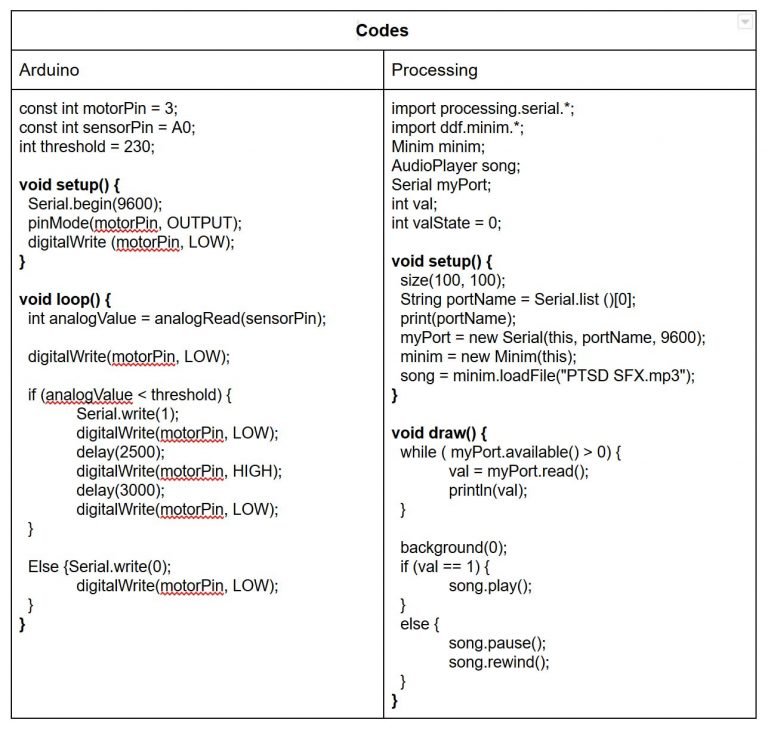

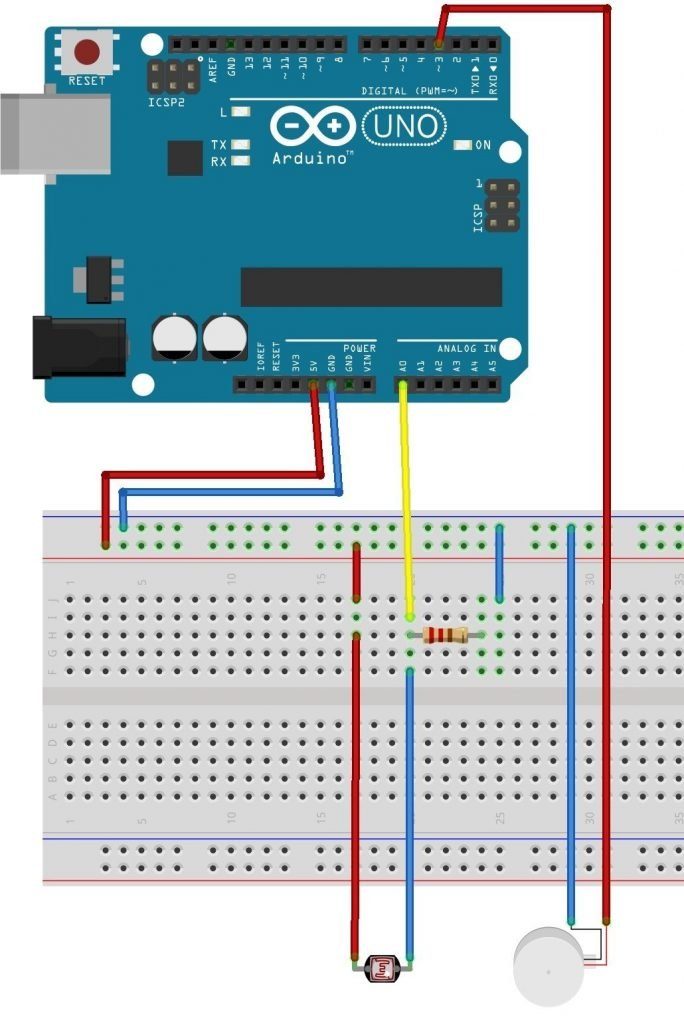

The development was actually discovered by a series of ‘mistakes’. Whilst trying out the colour schemes in Processing, instead of creating uniformed colour changes, much like a rainbow effect, I made a screen of white noise effect accidentally by using color (random(255)) and during the experimentation, the effect grew on me and I finalized it when I added the audio and they went together well. I chose to add audio effects as part of the project as it was difficult to manipulate the visuals on screen, using the minim library I was able to choose songs and add them into the Kinect projection. My impression was that through the code that I made, the audio would play if it senses depth data on the screen. This was a two-outcome programme – play or no play. However, after testing out the code, I realized that the audio reacted to the amount of data being fed into the screen, and when the screen was full filled, the audio played seamlessly. Otherwise, the audio would be choppy and incoherent, even annoying at some point. This aligned well with my idea of ‘making sense’ and using audio I was able to create an unconventional effect that I had not initially pictured, but worked well in my favour.

Music – Sæglópur by Sigur Rós

This project encourages us to slow down and reflect on the ‘sounds’ around us, the noise and discomfort caused by the adversities that we face currently, and ‘piece’ them together by acknowledging them and finding a solution of comfort and serenity through various means and ways. Instead of running away, we hear these noises and look within ourselves for a place of solitude.