Final Powerpoint Presentation : PPT Link Here

The Uncanny Valley

What is it?

The “Uncanny Valley” is a characteristic dip in emotional response that happens when we encounter an entity that is almost, but not quite, human. It was first hypothesised in 1970 by Japanese roboticist Masahiro Mori, who identified that as robots became more human-like, people would find them to be more acceptable and appealing than their mechanical counterparts. But this only held true to up a point.

When they were close to, but not quite, human, people developed a sense of unease and discomfort. If human-likeness increased beyond this point, and then became very close to human, the emotional response returned to being positive.

This is a more simplified graph taken from Frank E Pollick’s research paper. It is this distinctive dip in the relationship between human-likeness and emotional response that is called the uncanny valley.

Mori’s theory cannot be systematically verified. But the feelings we experience when we meet an autonomous machine are certainly tinged with both incomprehension and curiosity. Hence, I do agree to Mori’s theory of the Uncanny Valley BUT to a certain extent.

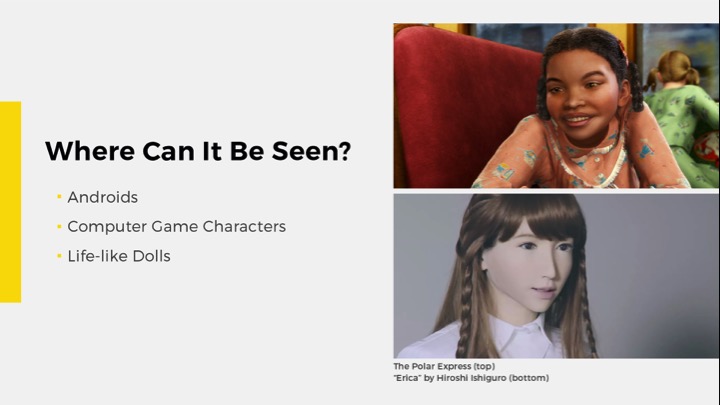

Where Can it be Seen?

Anything with a highly human-like appearance can be subject to the uncanny valley effect, but the most common examples are androids, computer game characters and life-like dolls. However, not all near-human robots are eerie and personally, even if there are criteria that people came up with, the perception of eeriness varies from person to person. What people find eerie and weird is very subjective.

What evidence exists for the effect and what properties of near-humans might make us feel so uncomfortable? It is interesting to see how people use the Uncanny Valley graph to produce their own theories. From the readings and research that I have made, these are, to me, the top three theories that seem particularly promising.

Top 3 Debate Theories

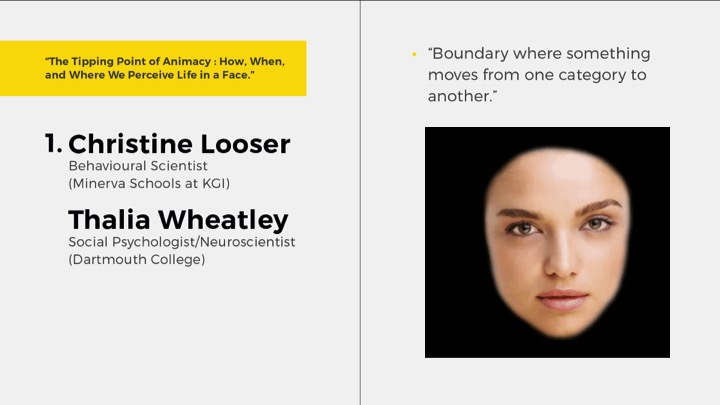

1) Christine Looser and Thalia Wheatley

First, the uncanny valley might occur at the boundary where something moves from one category to another, in this case, between non-human and human. They looked at mannequin faces that were morphed into human faces and found a valley at the point where the inanimate face started to look alive.

2) Kurt Gray and Daniel Wegner

Second, the presence of a valley may hinge on whether we’re able to believe that near-human entities possess a mind like we do. A study by Gray and Wegner found that robots were only unnerving when people thought that they had the ability to sense and experience things, and robots that did not seem to posses a mind were not frightening. It is the capacity to feel and sense, rather than the capacity to act and do.

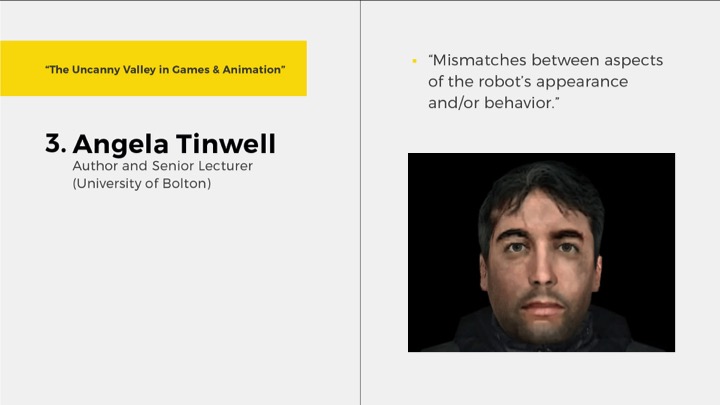

3) Dr. Angela Tinwell

A final compelling area for future research is that the uncanny valley occurs because of mismatches between aspects of the robot’s appearance and/or behavior. Tinwell’s work has looked at many types of mismatch, including speech synchronisation, speech speed and facial expressions. In a study in 2013, near-human agents that reacted to a startling noise by showing surprise in the lower part of their face (not the upper part) were found to be particularly eerie.

Case Studies

1) Geminoid by Hiroshi Ishiguro

One of the case studies that I would like to bring forward for a discussion is a robot called Geminoid made by Hiroshi Ishiguro. Basically, he made a copy of himself. Ishiguro is among those who believe that androids can bridge the uncanny valley by increasing human-like appearance and motion. Aside from their realistic hair and skin texture, the Geminoid was also designed to perform common involuntary human micro-movements, like constant body shifting and blinking, as well as breathing, to appear more natural. Air actuators were also utilized, with the help of an air compressor, to affect motion without emitting mechanical noises.

Ishiguro controls the robot remotely, through his computer, using a microphone to capture his voice and a camera to track his face and head movements. When Ishiguro speaks, the android reproduces his intonations; when Ishiguro tilts his head, the android follows.

As time goes by and technology advances, roboticists shifted away their focus from the appearance of human-like entities, instead exploring the robot’s mental capabilities as basis for observers’ discomfort. Where the so-called “Artificial Intelligence” comes, bringing me to my second case study.

2) Sophia by Hanson Robotics

Designed by Hanson Robotics, Sophia has incredibly dynamic facial expressions and communicative skills, an expansion from Ishiguro’s Geminoid. Dr David Hanson suggests robots like Sophia will be able to integrate into numerous job positions within human society.

Hanson is approaching Sophia with the mindset that she is AI “in its infancy”, with the next stage being artificial general intelligence, or AGI, something humanity hasn’t achieved yet.

Hanson is approaching Sophia with the mindset that she is AI “in its infancy”, with the next stage being artificial general intelligence, or AGI, something humanity hasn’t achieved yet.

Sophia has three different control systems: Timeline Editor is basically a straight scripting software, Sophisticated Chat System which allows Sophia to pick up on and respond to key words and phrases, and OpenCog which grounds Sophia’s answers in experience and reasoning.

Comparison

Sophia has been touted as the future of AI, but it may be more of a social experiment masquerading as a PR stunt.

Is the robot itself that makes it uncanny or is it the way the robot is controlled like a modern ventriloquist. Let’s compare how these two robots were presented and staged up to the public or through the media to make them feel “real”.

1) The Geminoid was introduced at the ARS Electronica, where the robot was presented at the front and a controller will be hidden behind the scene. The team wanted to create an analysis which explores the uncanny valley with Geminoid in a real-world application.

Concerning the visitors’ feelings, fear was the predominant emotion—an emotional reaction which is clearly related to the uncanny valley hypothesis, because in contrast to, e.g., anger, fear indicates a person’s submissive behavioral tendency to withdraw from a threatening or unfamiliar situation. Geminoid’s lack of facial expressivity as well as its insufficient means of producing situation-appropriate social signals (such as a smile or laughter) seems to impede a human’s ability to predict the conversation flow.

2) While popular in the media, Sophia has received strong criticism from the AI community. Joanna Bryson, a research in AI ethics at the University of Bath, called Sophia, “obvious bullshit” last October, a sentiment upgraded in January to “complete bullshit” by Yann LeCun, then director of Facebook AI Research.

Deputy-Secretary-General Amina Mohammed interviews Sophia on the floor of the UN General Assembly during a joint meeting. The dialog is entirely scripted but this is not disclosed during the session. It is striking that the DSG pokes and makes faces at Sophia’s hand movements, a decidely undiplomatic gesture from a high ranking professional diplomat. This suggests that she doesn’t take the interaction entirely seriously.

Then there was an interview with Sophia at the Saudi Arabia Future Investment Initiative. When the interview is over, the journalist disclosed that the conversation was partially scripted. He then proceeded to announce that Sophia has been awarded the first “Saudi citizenship for a robot”. Clearly scripted, Sophia immediately delivers an acceptance speech honoring the Kingdom of Saudi Arabia.

Have you seen the video of Will Smith trying to date Sophia? It was so awkward and scripted. My own issues with Sophia centered on the use of scripted, prepared remarks in her public performances without clear disclosure of such, unlike Geminoid. This setting or situation itself is very uncanny.

Hanson Robotics has basically taken an advanced facial expression robot and tried to present it as something it isn’t to investors and mass media. It is an attempt to sensationalize an important but small step as some kind of world-changing revolution for personal gain.

Hanson Robotics has basically taken an advanced facial expression robot and tried to present it as something it isn’t to investors and mass media. It is an attempt to sensationalize an important but small step as some kind of world-changing revolution for personal gain.

Can we bridge the Uncanny Valley?

Creating near-human robots that can portray genuinely believable emotional expressions presents a highly complex technological challenge. So while this tension between appearance and emotional interaction may currently confine robots to the uncanny valley, it could also offer a way out.

An alternative would be for robot designers to avoid trying to mimic human faces too closely until technology has progressed to allow their feelings to be represented realistically as well. Otherwise, our expectations about emotions would just be raised by their human-like nature and then not met by their expressive ability.

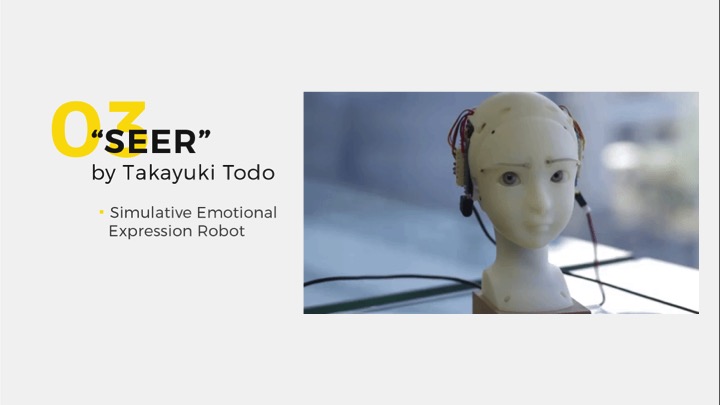

A new robot was developed called “SEER” (Simulative Emotional Expression Robot) by Takayuki Todo is capable of recognizing the facial expressions of people it interacts with and then mirroring that same expression back at them. To me, this is an incredibly expressive robot.

Culture may also play a part. In Japan, artificial forms are already more prevalent and accepted than they are in places like the US. There have been a couple of synthetic pop stars in Japan. Perhaps the uncanny valley can be traversed in other parts of the world via the increasing prevalence of androids. Maybe we’ll all just get used to them.

References

Kang, Minsoo. “The Ambivalent Power of the Robot.” Antenna: The Journal of Nature in Visual Culture 9, (March 2009).

Pollick, Frank E. “In Search of the Uncanny Valley.” University of Glasgow, 2009.

Stein, Jan-Philipp and Peter Ohler. “Venturing into the Uncanny Valley of Mind: The influence of mind attribution on the acceptance of human-like characters ina virtual reality setting.” Cognition 160:43-50, (2017).