Getting the scene layout is one thing, but lighting the scene is another; and on top of that working the camera for the scene, its focal length and movement all are another aspect. Here’s an example of how Scene 4 has progressed over time.

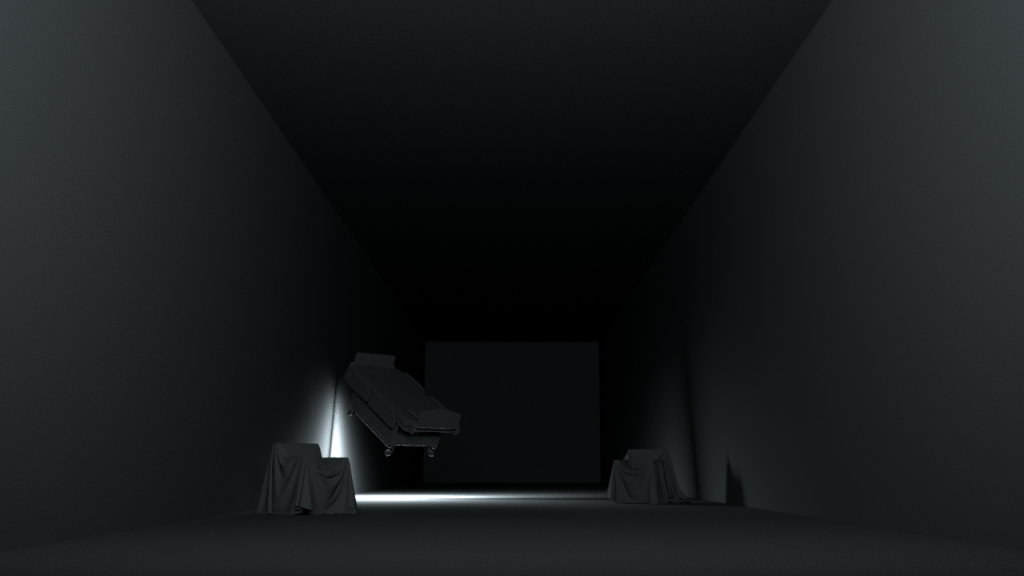

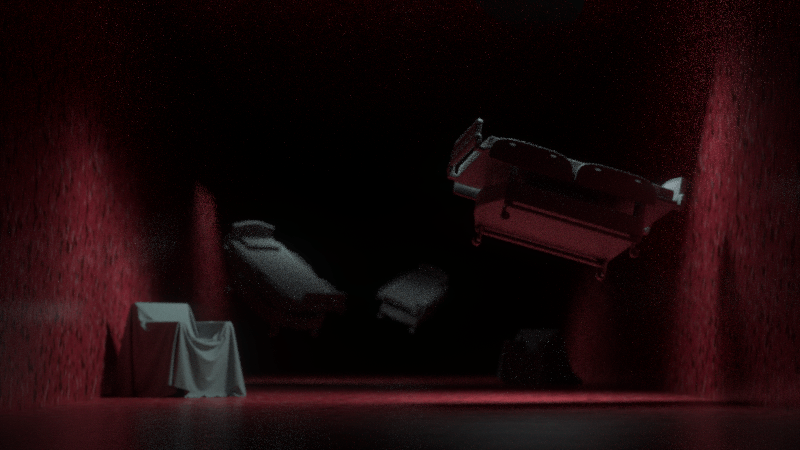

Before any real work has been done, but a concept was there in where the light will propagate through the room.

My initial idea was that the light would be from the back of the room, shining into the audiences face. Shafts of light/God rays will stream past the objects and through fog to create this grand image.

However, that did not work out the way i planned. For one, the lights kept leaving these hard dark lines wherever they intersected with geometry, mainly the room. You can even see it in the image above. This made me venture into IES lights, which really removed that problem (surprisingly) but still I could not achieve the look i wanted. Also IES lights from this point on were my go to methods for lighting. In short IES lights use real world bulb/light falloffs to replicate specific lamps or bulbs. Mainly used in architectural renders for bulb specific accuracy, they’ve really gone on to help other CG artists achieve better realism in their images, plainly because the light falloff wasn’t as linear as default CG lights.

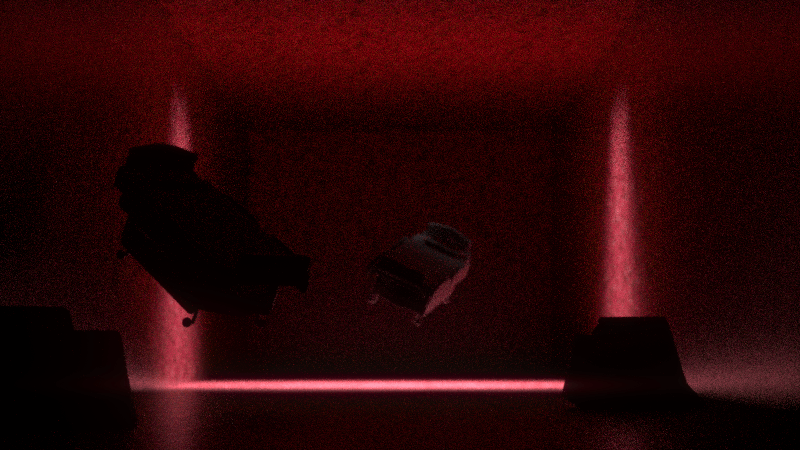

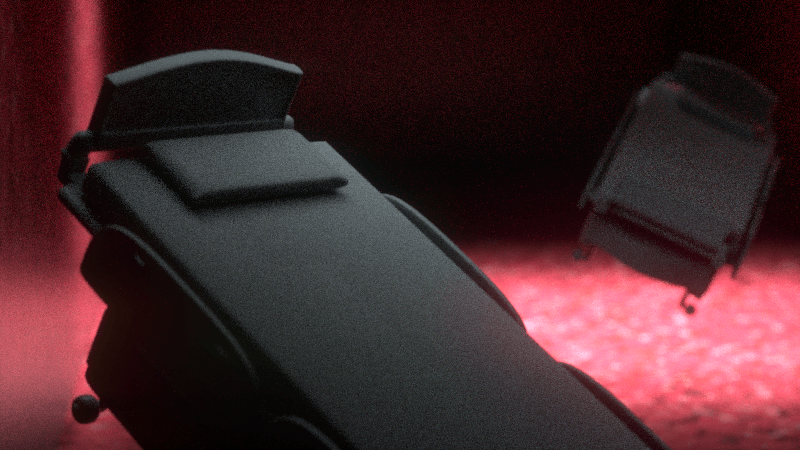

This lovely scene i tried to work for some time, camera angles weren’t exactly working, lighting wasn’t where i wish it were. Even the way the objects in the scene laid out I did not like. (especially those damn chairs). But this still had parts that i liked; for example the light that counces off the walls onto the middle bed looked good. Image composition was still rather poor however, it was sorely lacking a foreground element. Hence my next move was to slap another bed into the scene. I also had to relight the new portion of the room, but this time, i knew that i wanted a sort of rim light to catch the foot end of the bed frame; and by miracles of miracles, the scene just started to look better.

Additionally I started to explore other techniques such as focal drift. I wouldn’t set the focus exactly on the object, I’d push it a bit back or forward; trying to achieve that organic look. Though I’m still wondering how I’d do that with enough control in more static shots.

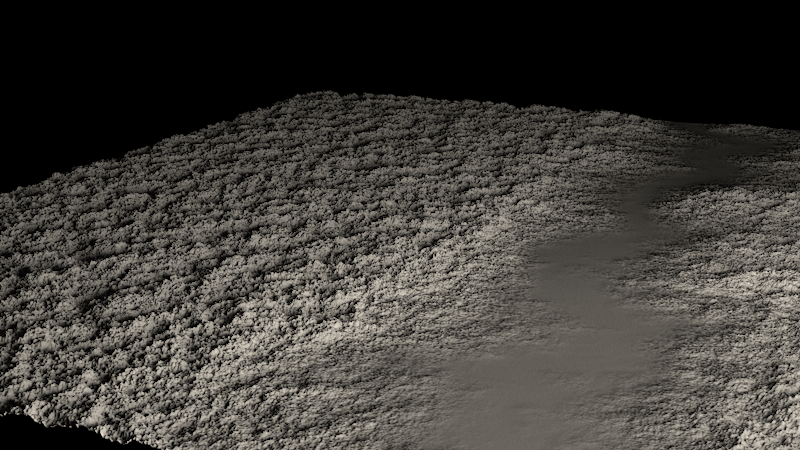

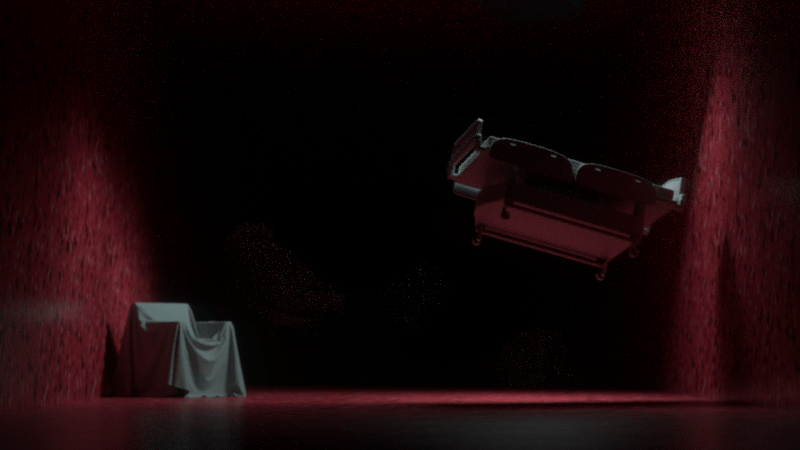

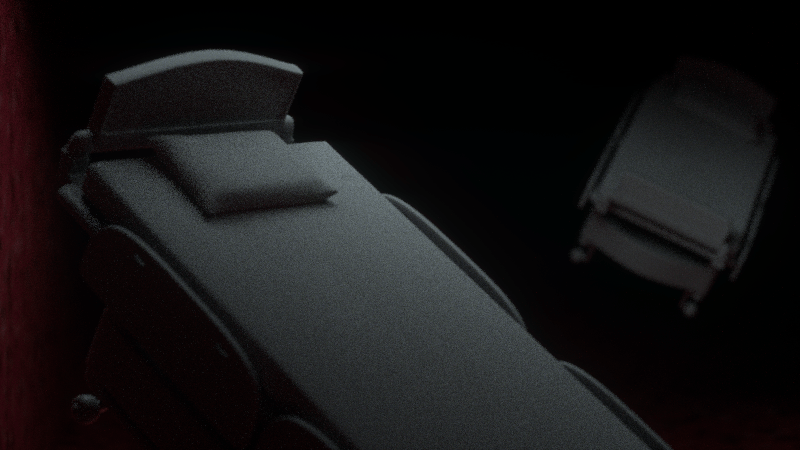

I also improved the lighting for a closeup shot for the beds behind. Going from this style below.

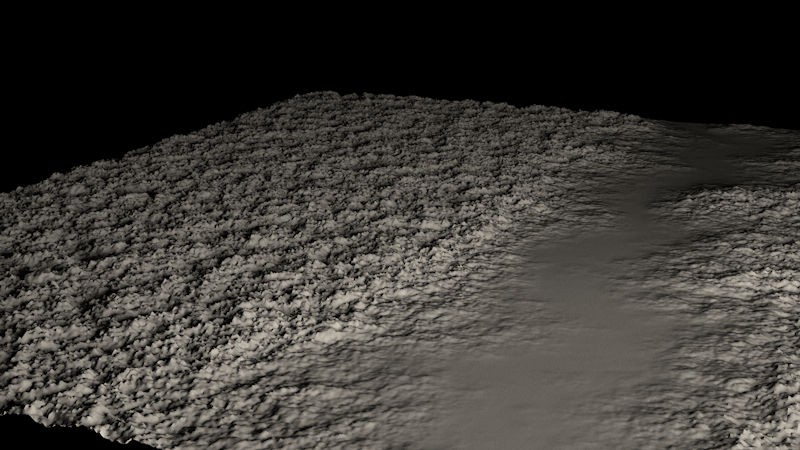

To this~ Which I’d say is a pretty good improvement. I’m also pretty proud of the visible material difference between the bed frame and the mattress fabric. Hopefully these material details are still textually noticeable after the post-processing of Red and Blue. Additionally, I sculpted better pillows using the cloth simulator in C4D and then smoothing it out with a brush.

Sometimes I worry that I’m spending too much time on superfluous details like these, but I do think they’re noticeable even if not completely conscious on the sort of quick viewing that happens during an FYP show.

Next up I’ll talk about the other dynamic simulations.