Hello! I’m back again with a new assignment!

So, this time round, we get to do this really cool project whereby we use objects to trigger sounds! And finally I found out the magic behind all those projects where our movement will trigger sounds wahahaha. So the secret is simply… a camera. The camera does wondersssss and now I’m being introduced to the world where there’s endless possibilities!!! Hahaha.

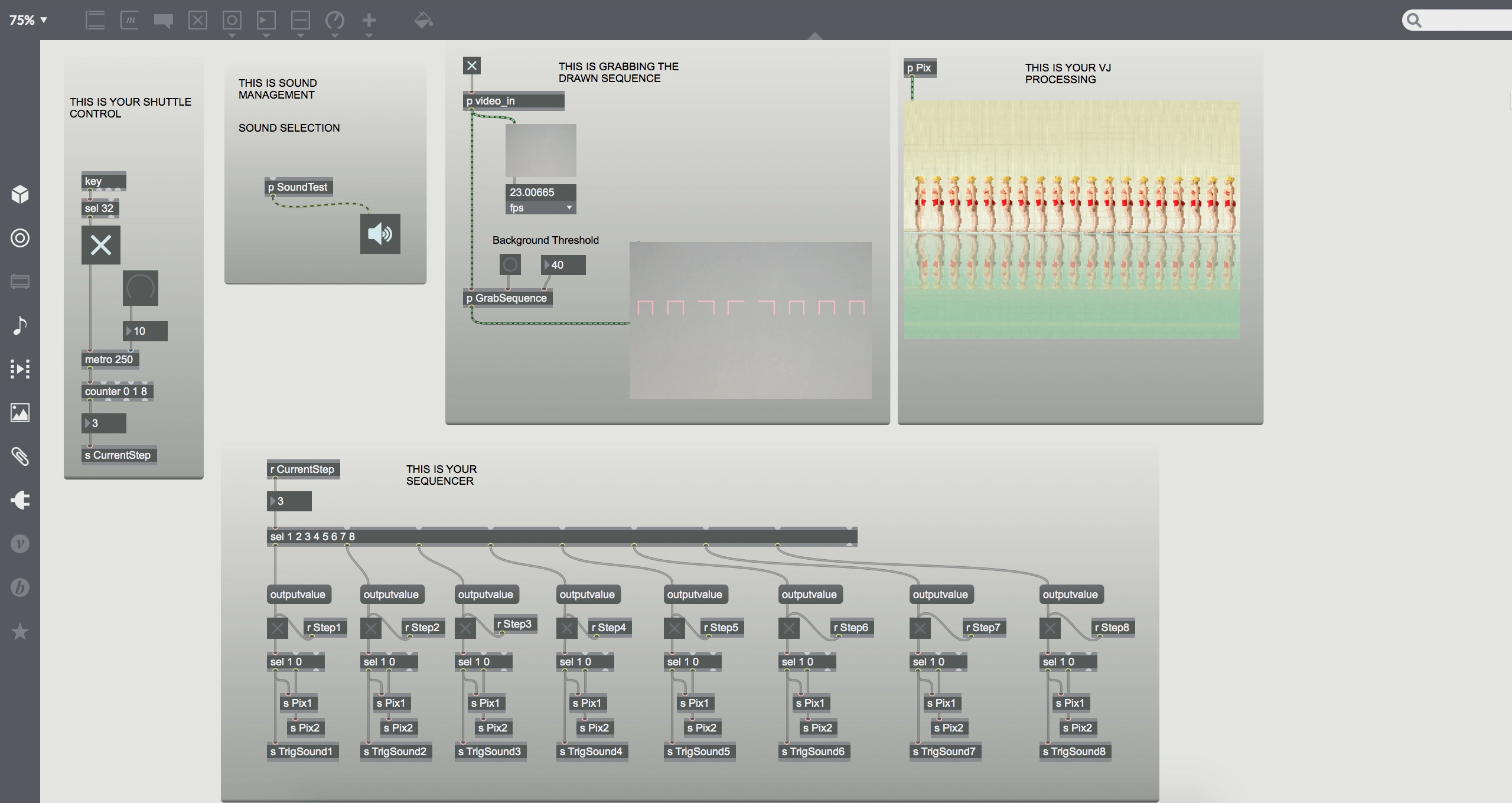

Alrighty then, here’s the final project!

Under pGrabSequence is where I adjusted the motion boxes. At first I was quite overwhelmed with the message that consist of the coordinates because of the numbers hahaha. It’s like numerical overload!! But yeah I sort of figure it so it’s basically for ( 1 10 110 30 90 ) , it means Box 1 ( x, y, x, y), I believe?? Something like that uh

pSoundTest. I didn’t use the playlist as I couldn’t figure out how to sort the audios out for each box. Thus, I resorted to using the Send and Receive method. By receiving the TrigSound, to play the audio!

And there’s this issue I faced, when I remove the item, the audio will continue playing the rest of music and will only stop when it has completed the track. Am I able to cut the music off halfway when I remove the item?

Overall this project was fun but it took me awhile to figure out how to complete this project, and thank you Chloe’s for lending me a helping hand on this too!!! :))

Here’s the final video for I Am VeJee! 😉