Project Brief: A Collection of Consciousness utilises the method of question and answer to seek out one’s subconscious – psychological and emotional state alike. It then shows the participant a visual and auditory representation of his subconscious.

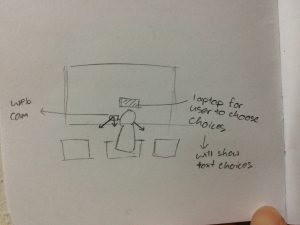

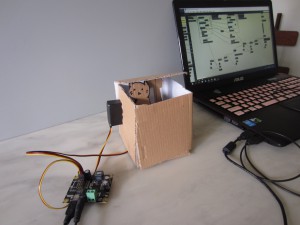

The set up: A total of 2 screens – one huge projector screen, and another smaller computer screen to look at for the questions. There is also a 3-step ‘dance pad’ for participants to select their answers.

An example of a computer graphic shown on screen:

The ‘dance pad’ was situated right in the centre – enjoying a vantage point of the screen. However, participants had to continually turn their head sideways to look for the questions.

Initial idea:

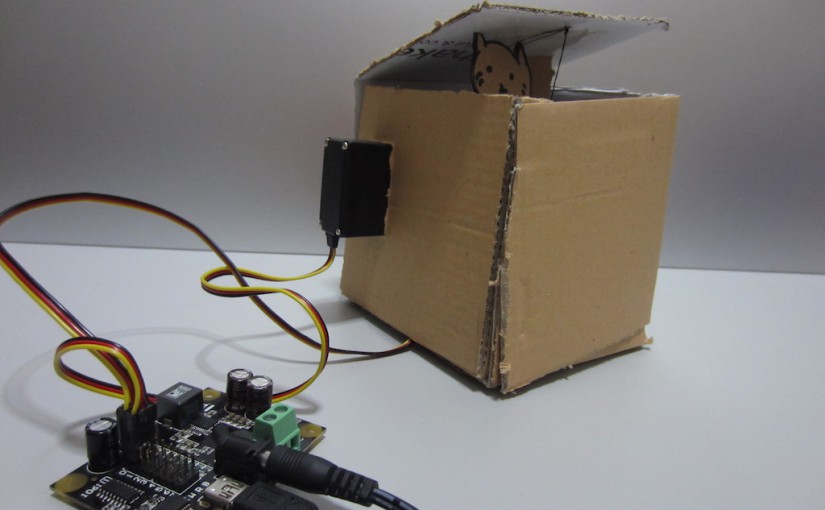

Initially, I planned on having the laptop right in front of the screen, but decided against it as it would block the visuals. Also, I planned on using motion sensing to trigger the selection of choices, but decided against it – past experience with it has taught me that different lighting would affect the sensing, and motion sensing as a whole might not be very accurate. Hence, I decided to change to using a touch sensor as my item of choice to trigger off the feedback.

Questions

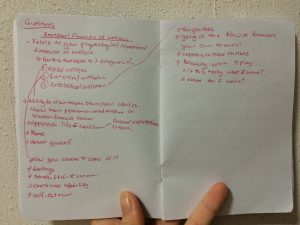

I also planned to have a total of 20 questions at the start. Later, I scaled it down to 7 questions as the patch was becoming too large. I based the style of my questions on the ISFG Personality test. I also researched on the different determinants of one’s consciousness, or to be more accurate, psychological state and came up with the following:

Thus, I decided to base my questions on these 3 factors:

1. Mental Wellness

2. Emotional Wellness

3. Intellectual Wellness

I do understand that the limited questions might not raise an accurate determination of one’s psychological state, but at present it manages to cover all 3 factors as a whole.

Here are my initially planned 10 ideas:

- How are you feeling today? Optimistic (faster), no opinion (slower), disengaged (more jazzy)

- You do not mind being the centre of attention. No, maybe, yes (change of beat)

- You get tensed up and worried that you cannot fulfil expectations (1-3) (change of colour and size, and zamp)

- You have cannot finish your work on time, what do you do? You: Continue working on it hard past the deadline, give up, blame and berate self (ADD ON NEW SOUNDTRACK, faster, slower, becomes white noise etc)

- Choose one word. I, me, them (goes down to nil, pale, sound slowly goes down to a steady thump)

- You often feel as though you need to justify yourself. Disagree, no opinion, agree

- You are _____ that you will be able to achieve your dreams.

Optimistic, objective, critical - Your travel plans are usually well thought-out. Disagree, neutral, agree

- Do you know what you want over the next 5 years? Yes, it’s all planned out, gave it some thought, will see how it goes

- You tend to go with the crowd, rather than striking it out on your own. Disagree, mix, agree

- Do you like what you see? Yes, No but I want to continue improving, no I wish I could restart

- Only you yourself know what you want. Do you see yourself? -> restart

I planned for the user to finish playing the entire round of questions, and the different answers would add on different, unique layers onto the graphic – creating a different, unique shape and colour for each player at the end of the game.

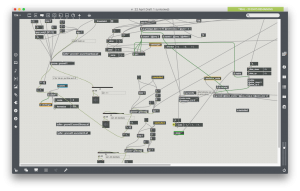

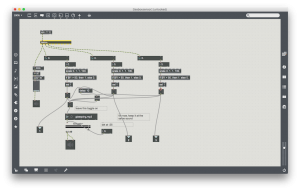

Technically, I used jit.gl.text and banged the questions (in messages) at intervals.

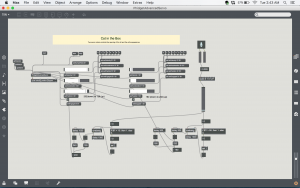

Here is a small portion of my subpatch for the questions, which were to be shown on the computer screen later during the actual artwork.

Graphics

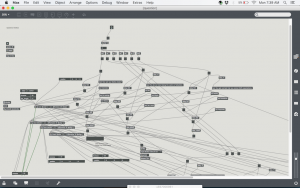

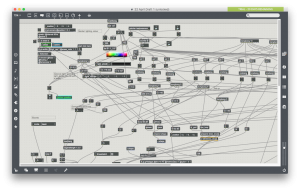

The changes in shape were made by sending messages (eg. Prepend shape – torus) to each individual attrui. In brief, these were altered: shapes, colours, how fast or slow the graphics ‘vibrate’, the range and scope it expands until, and the ‘quickness’ or ‘slowness’ of how fast it moves. Graphics was also rendered and altered in real-time.

To create 3D graphics, jit.gen, jit.gl.gridshape, jit.gl.render, jit.gl.mesh and jit.gl.anim were used. While I initially planned to use jit.gl.mesh, and plot out my own points to be animated (creating a more abstract/different/random shape), I spent a total of 5 days to figure it out, but failed to, hence the final project turned out different from expected. However, I am still pleased with the final outcome.

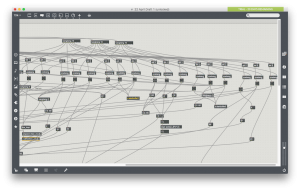

Here is my patch for reference.

The graphics was the most challenging portion of my entire project, making or breaking it. Perhaps it was not the most brilliant idea to foray into the untouched lands of openGL, but as I have always been interested in 3D graphics as a whole, it became an interesting experience for me.

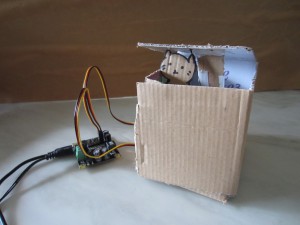

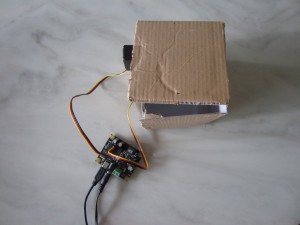

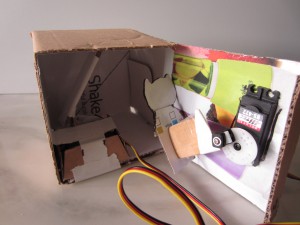

Sensors/Teabox

3 dance pads were used, and allowed for participants to send in their chosen responses to the questions to MaxMsp. Only 3 options were allowed, as it seemed more intuitive to the common user.

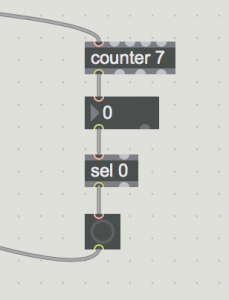

During the actual tryout on friday 22nd April, I realised that the trigger was far too sensitive, and questions were gone through far too fast. Hence, on the afternoon, I further added the below objects to slow down the sensing:

The counter value is to be changed – depending on how fast or slow you prefer the trigger to be.

Sounds

As the graphic rendering and the sounds were tied between each other, I decided on altering the sounds to firstly: give the sense of being enveloped in the whole experience, and also to further tie up the connection between sound and graphics. As aforementioned, the vibration of the graphics is with accordance to how loud, in terms of decibels, of the sound itself.

A total of 3 sounds were used. The longer the participants played, the more ‘rich’ the sound becomes: it becomes more shrill (higher pitch), and the speed of the soundtrack decreases, just to name a few. The longer you play, the more unorganic and synthetic the sounds become. It ties in with the visuals, which starts of as a red, beating object (that reminds one of the heart), but it starts to gradually take on inorganic, abstract forms. This was symbolic of the birth of a lifeform – firstly, you start off as a beating heart, but life’s experience will gradually shape you into becoming a unique being.

Here are the 3 sounds used:

See the final artwork in action: