After doing my research, I decided to start small – making just a single bubble. However, I want the singular bubble to pulsate, as though it’s a living organism. When it later bursts, I wish for the feeling of loss to be more pronounced – by initially thinking of it as a living being, one would feel pity, a sense of loss, and sadness (hopefully), as per what they would feel should a real living being dies.

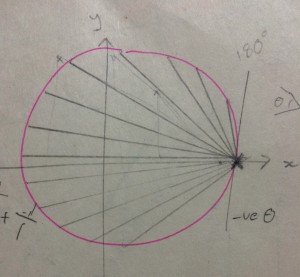

Honestly speaking, when I start thinking of the idea of a singular bubble, the image of the atomic bomb on Hiroshima during WWII comes immediately into mind (hope this isn’t offensive/it isn’t meant to be offensive):

Partly due to the fact that it has indeed caused many deaths and destruction, but this symbolic model was very arresting – the bright red contrasting with the vast city landscape.

I did consider putting placing a huge singular bubble into a small room, where people would have to squeeze past it to get to the other side – it seems fun, but there were considerations that they might accidentally touch and make the bubble bursting, making it not-so-practical after a while. At the same time, the bubble will pulsate like a pumping heart, giving it life-like qualities.

Thus, remembering Prof Randall’s words to ‘crawl first before I run’, I decided to start small and create a small bubble before I envision it in the whole space. That being said, small seems okay to start with – in my previous research the artwork The Long Now by Varena Friedrich, she too starts small – yet the product was very effective.

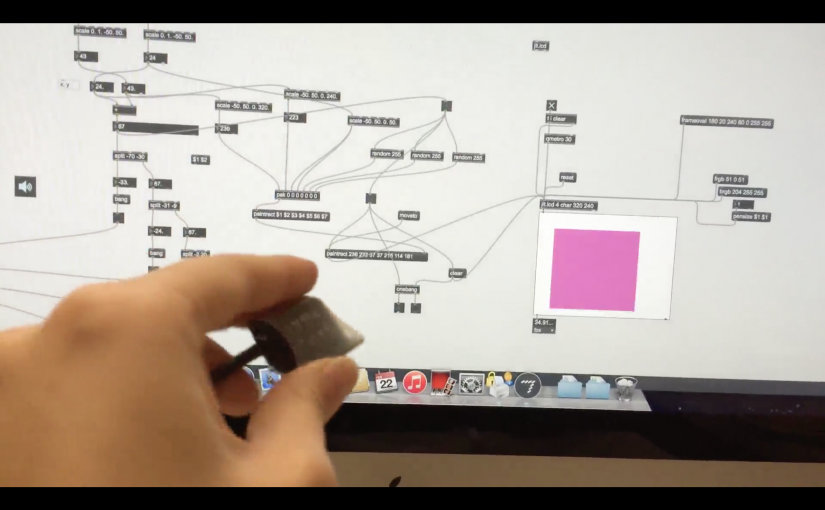

Before I thought of this idea, I did a few experiments with DMX lighting. I did want to try using projection on the bubbles, but decided to postpone it as I haven’t had the fog ready to intensify the projecting (but am loaning the projector again today to test it out this weekend).

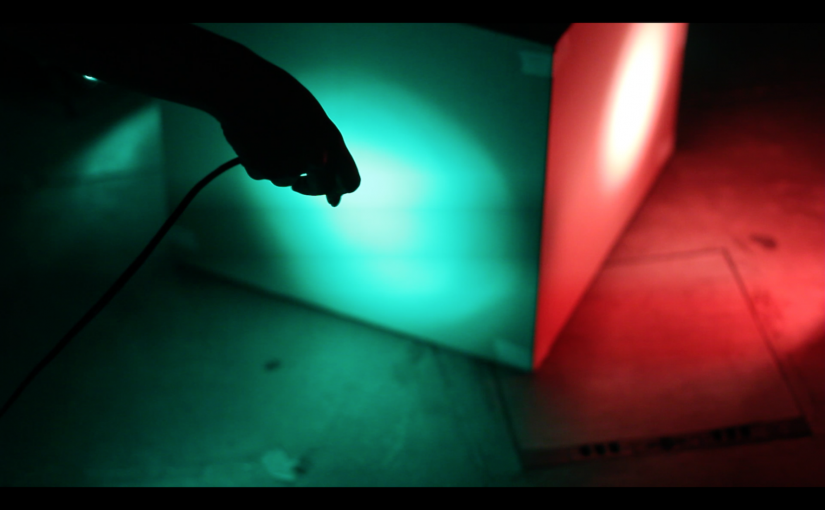

DMX Chauvet Lighting and Bubbles

I first wanted to beam the light sideways, but the leftover light shone onto the background wall which was very distracting. In addition, there was too much surrounding light from the chauvet lights – thus it was hard to pick out the lighting of the bubbles itself. So, I pointed the chauvet light upwards instead (and risked the cables getting wet but shower caps are always a lifesafer)

I also did experiment with the flickering lights, as seen below. From my tests, a slow strobe could dramatise the effect of the bubbles, but it really was not what I envisioned for the experiment.

Caution! Strobe lighting in the below video!

(Please mute the above video while watching it; sounds of the video does not correspond with the visuals – I’ll explain why later in the post)

Here, there are two different ways of strobing: fast, and slow. I played with different light colours (purple, white, blue) to test out the effects, and particularly liked the purple colour out of all 3 colours.

One thing to note that while it was resplendently pretty, lighting was a considerable issue – the surroundings had to be STARK black else the surrounding light will wash out the lights of the bubble. Another issue was that the medium simply is hard to capture with the camera – the shimmer of the bubbles, how airy it was, and the glint and floaty-ness that it had. This is truly an experience that one has to feel first hand.

In this experiment, a pure red lighting was chosen as I envisioned that the strong lighting would translate into visually powerful bubbles exuding a single colour.

To add on to my previous point, only by directly placing the bubbles at the top of the light itself could really bring out the reflective quality of the bubbles. However, this would mean that the bubble has to be either suspended over the light, or that it would have to sit on a flat surface right above the light – whereby the spherical shape of the bubble would no longer be possible.

I did love the reflections of the bubble, particularly here:

However, it was very hard to be able to angle one’s sight successfully to view this reflection, and that the structure of the chauvet lights dictate it that each individual RGB colour is seen, rather than the blend of R, G or B to achieve a new colour. This is especially so in the case of the bubbles were the light has to be very close to the bubble and has no affording distance to blend together. I suppose that this limitation could be overcome by directly wrapping the coloured cellophone paper over the light, hence it will not be an important consideration as of now.

I tried using many small bubbles, and one singular bubble to play with the lights. I concluded that while the small bubbles really gave off an airy feel, I would rather use larger singular bubbles as I could more properly play with the bubble medium. It would also be easier to control, and individualise my project rather than the conventional bubble explosion scene.

Sounds of.. Making Bubbles?

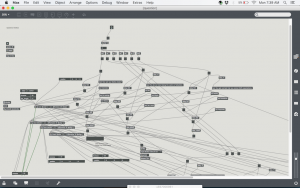

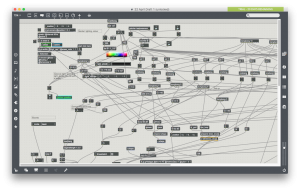

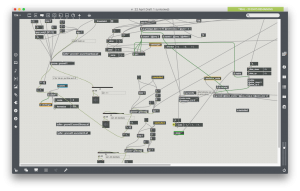

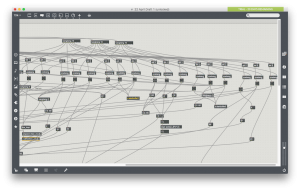

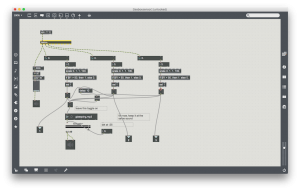

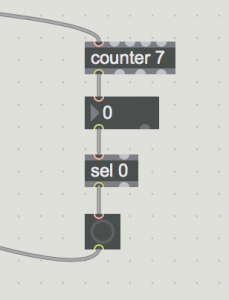

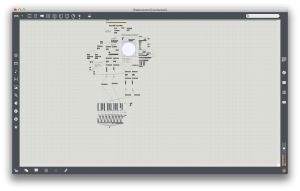

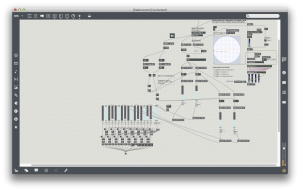

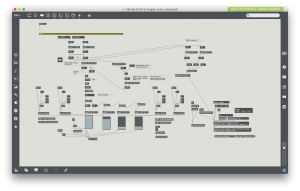

I recorded the sound of bubbling and edited it via Audacity. I will show a few samples.

(Please un-mute the video while watching it)

The edited sounds are included in the video, and there are 3 tracks in total.

Track 1: 00:00 – 00:19

Track 2: 00:20 – 01:05

Track 3: 01:06 – 14:20

Next Steps!

To do: make fog machine

Attempt projection surface tryout with singular large bubble

Make a singular bubble machine

Inspiration: