Fabian Kang and Zhou Yang, Bubble-Down!, 2018, laser-cut medium density fibreboard, sound-sensors, LED lights, powered by Arduino.

Concept

The idea is for players to use a sheet of bubble wrap to play the game as it was this touch and feel that we wanted to be integral to the interaction. Bubble wrap also becomes this expendable medium that has to be ‘refilled’ after each game.

Bubble wrap works like a physical button, having the haptic feedback of the contained air being squeezed and emission of a pop sound when it then bursts.

Bubble wrap is usually something that people press without much thought to it. And indeed it can be somewhat addictively mindless. Hence, we were wondering what if each press of the bubbles has to be considered very very carefully? With Bubble-Down! (2018), we invite players to battle it out in a minesweeper-meets-battleships game of suspense, interacting with bubble wrap in an unconventional way.

Gameplay

- Players will plant their bombs without the knowledge of their opponents. They should take note of those positions they have rigged up.

- Players will swap places.

- The game commences with players having 5 ‘lives’ each.

- Players will take turns to pop the bubble wraps. It is mandatory to pop once upon each turn. Should a light go on, the player loses a ‘life’.

- The game continues till either player has exhausted his or her 5 ‘lives’.

Documentation

Fabian Kang and Zhou Yang, Bubble-Down! Documentation, 2018.

Above is a short video to showcase the process underwent by our team from ideation to execution.

Design

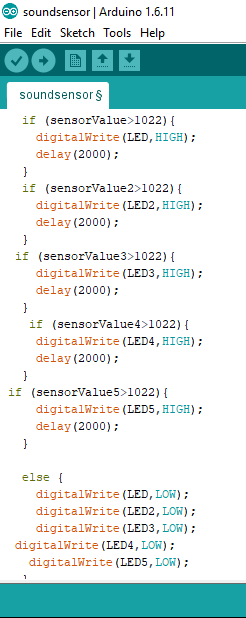

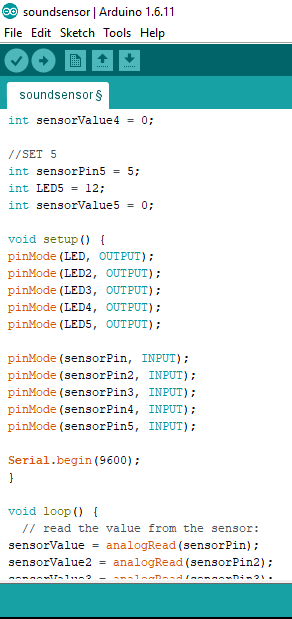

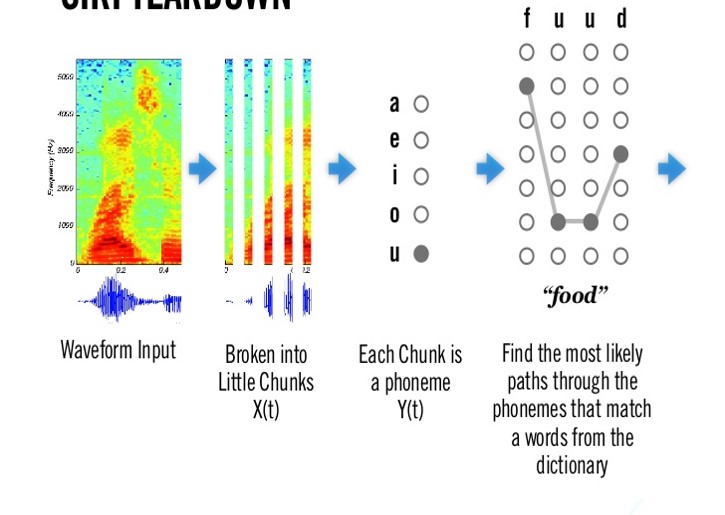

The main challenges faced was understanding how a sound sensor works and what inputs is Arduino recognizing. We realized that although we tried getting an analogue input, Arduino was picking the bubble wrap ‘pop’ sound consistently as a value of 1023 (analogue inputs having only a maximum of 1024 values). This meant that the analogue inputs were no different from a binary digital input. It was either recognizing the reading as a sound or as silence.

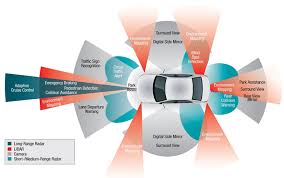

Hence, much of the design was highly focused on the contact areas of the bubble wrap and the medium-density fiberboard (MDF). We had to ensure a layer of separation between the cubes that had ‘bombs’ in them or those that were without. MDF was the chosen material as it was able to allow the sounds to travel to the sound sensor. We realized that the sensor had to be directly in contact with the board surface, hence we improvised a solution involving clothspegs that will secure them as desired.

These are some process of the Design:

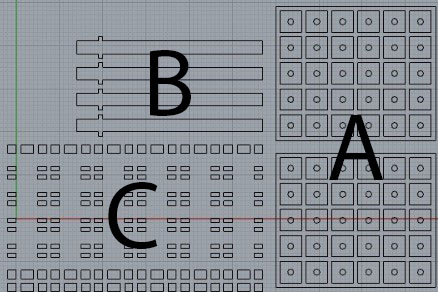

The design solution was to separate Part A from Part B which in turn had to all be separated from the table surface as well. This was done by the small pieces of Parts C that would be stacked to create stilts for the bases of the aforementioned parts. We also calculated the exact length of the ‘bombs’ so that that stick would cause contact from Part A to Part B.

Without 'bomb' With 'bomb'

The key takeaway from this Final Project is that working with something like a sound sensor that is very depended on the environment and the interactive situation, one certainly needs to be wary of the efforts to adjust to the calibration requirements of the hardware. We did realise the immense difficulty at some point, but decided to push ahead simply because we liked the whole process loop of things. From haptic touch with the bubble wrap, popping sounds produced by it, being picked up by the sound sensors. It felt right to endeavor towards this and stay true to the concept we had agreed upon.

Programming

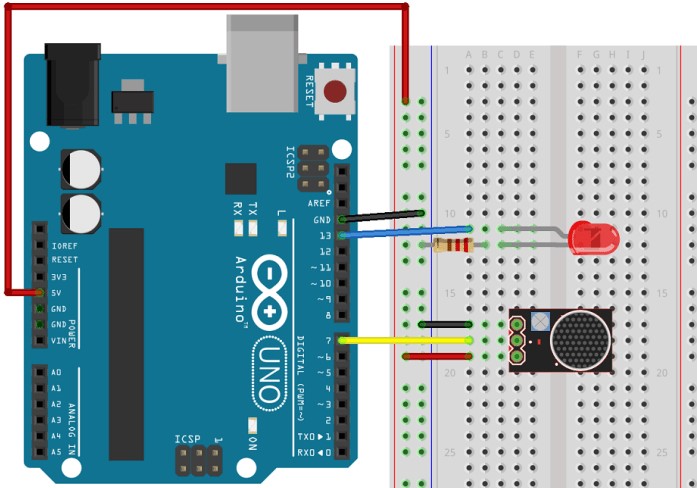

This was the basic circuitry we were working with:

And we of course rigged it up to include 10 sound sensors and 10 lights, as well as to ensure the inputs from the sound sensors are individually directed to the outputs of the corresponding lights.

After we did that, we realized that it would be cumbersome to have the players move the actual sound sensors around the board, hence the design had to allow for the sensors to be static, which was when we incorporated the idea of the ‘bombs’ to be moved around instead of the sensors.

Extras

Lastly, it is always certainly about the process, like in these last timelapse videos we would like to share:

With that, I think we shall look forward to a fruitful second half of FYP year!