Progress Thus Far

- Done research & reading on Cyberpunk genre

- Not done moodboard (rescheduling to another time to focus on, as it’s not important now after reviewing)

- Sorted out and filtered journal papers that are relevant

- Made eye-to-eye periscope, working on vibrawatch prototype

- Read Sensory Pathways for the Plastic Mind by Aisen Chacin

- Realised I’m doing something very similar to what she is doing, but with different intentions. She wanted to critique on the idea of sensory substitution devices as assistive technology, and want to normalise the use of these devices. For me, I want to go beyond normalising, I wanted to pretend that it is already a norm, and imagine what people do with it.

Next focus

- Finish vibrawatch prototype

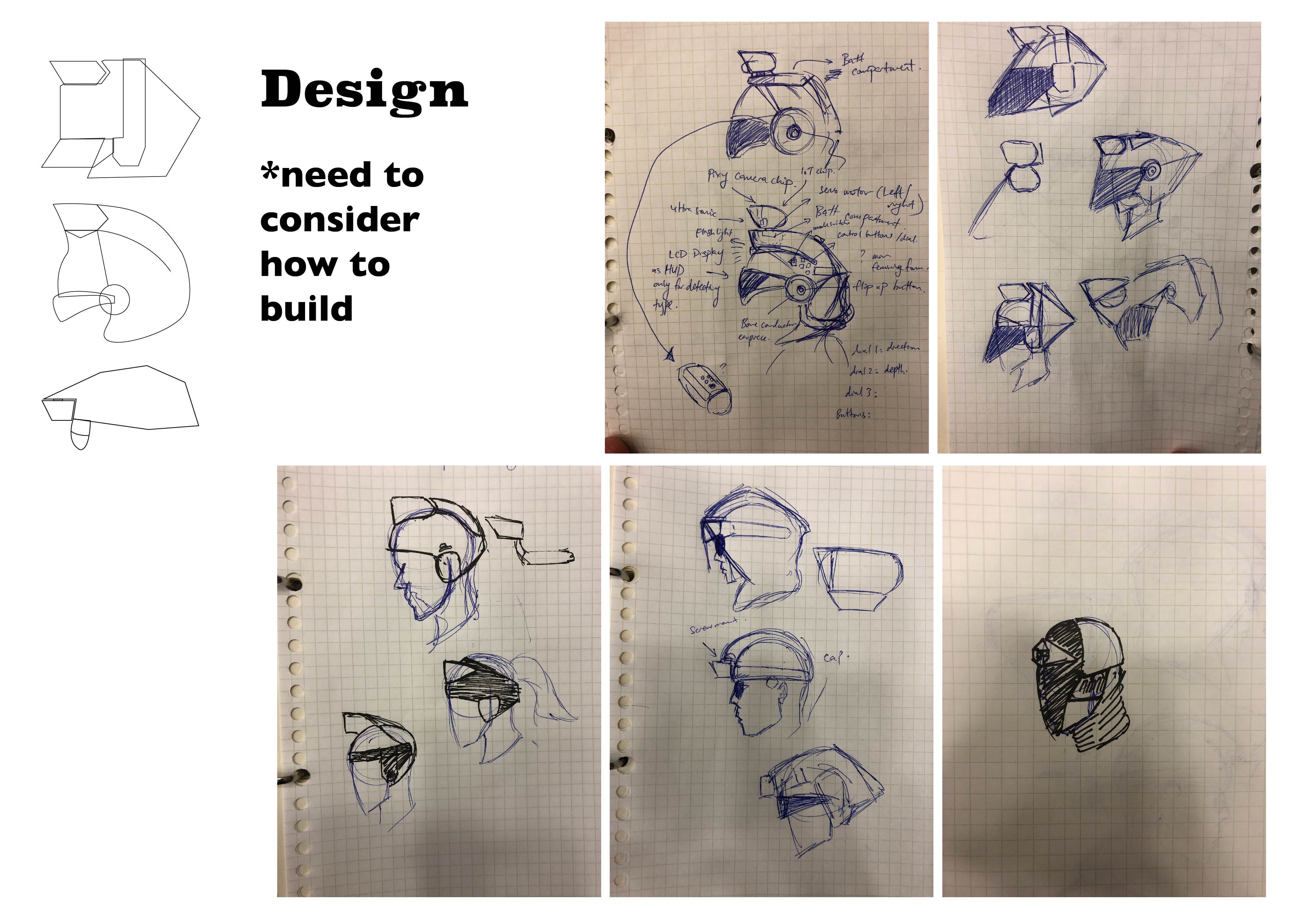

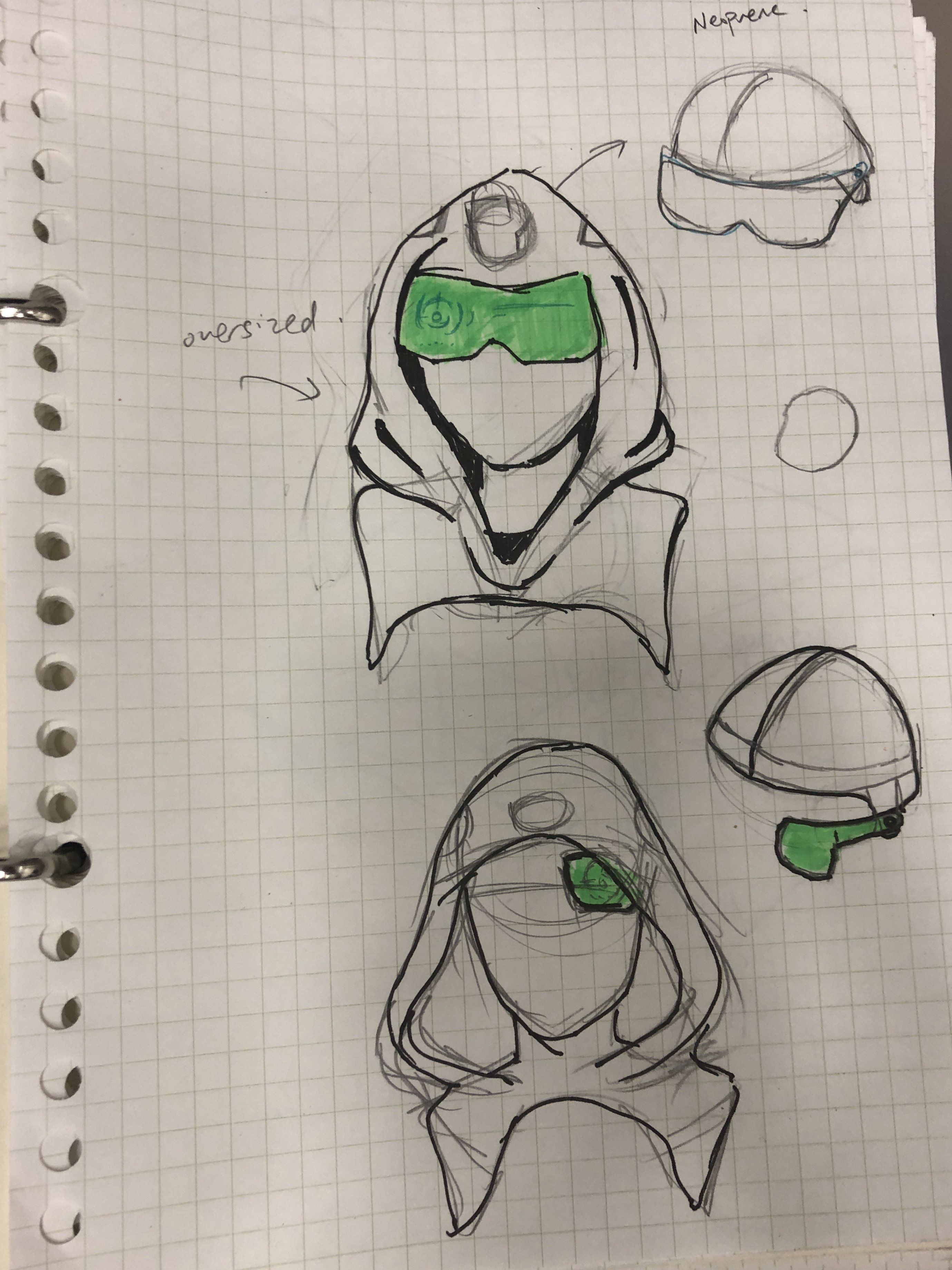

- Sketching of ideas so I can present them and visualise them

- Play with creating said ideas and test them

- Read when I have time

- Focus on sensory experiments and exploration with current few ideas

I have changed my focus from research to hands-on after talking to Bao and Zi Feng who gave me a number of good advices!

Cyberpunk

I went to research a bit deeper on cyberpunk genre. This is done through reading Tokyo Cyberpunk: Posthumanism in Japanese Visual Culture which, to be honest, was not very helpful as it was a lot of text analysing deeply on different films or animes that tackle different cyberpunk themes. What I found useful were the references to certain films or animes that I can search online for video clips or photos to refer to. This is speaking in terms of the aesthetics.

After reading the book, I realised there are more to the genre than just it’s aesthetics (I mean of course, just that I didn’t really think about it). I went to watch some videos on the topic and came up with some insights:

- Cyberpunk genre revolves around themes such as mega corporations, high tech low life, surveillance, internet of things, identity in a posthuman world (man / cyborg / machine, where is the line drawn?), humanity and human the condition in a tech driven world.

- The aesthetics is not a main point of the cyberpunk genre, as previously thought. It is all about the themes, that’s why many films that are “cyberpunk” may not have the iconic aesthetics of neon holographic signs, mega cities, flying cars, etc. Cyberpunk genre can exist in the era we are living in now and does not necessary have to be set in the future.

- It might be more realistic to look at the current world and set the “look” accordingly rather than to refer to the 80’s vision of the future aka what most cyberpunk depiction look like.

So why are these info useful for me?

I think it’s good that I actually try to discover what cyberpunk really is and at least get a brief idea of it. I can try referencing certain themes that apply to Singapore and see what works best. How will Singapore be like if we are set in a cyberpunk world? This does not only affect the aesthetics of my installation, but it also affects the theme I’ll be covering, which can narrow my scope a bit more. In the end, I hope that the installation will be realistic and aesthetically convincing as well.

Also, I got my hands sort of dirty

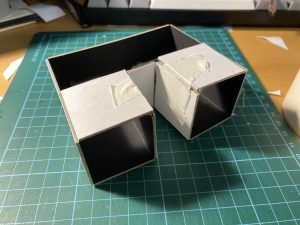

I had the idea of a periscope that lets you see your own eye. This is more of a perception experiment than anything really important.

As a thought experiment, I was wondering what we will see, if one eye sees the other, and vice versa. Turns out, nothing special. You will see your eyes as though you see it in a mirror — except the mirror is not flipped. I can’t think of an application, but it is interesting to play with it. If you close one eye, you can see your closed eye with the other eye.

I show this to Zi Feng and Bao, and Zi Feng mentioned that if the mirrors are the scale of the face, you can see your true reflection with this device, with only a line separating the middle, which I think is an interesting application for a mirror that shows your actual appearance (how other people sees you).

But yeah, it’s not anything super interesting. I just wanted to start working on something haha.

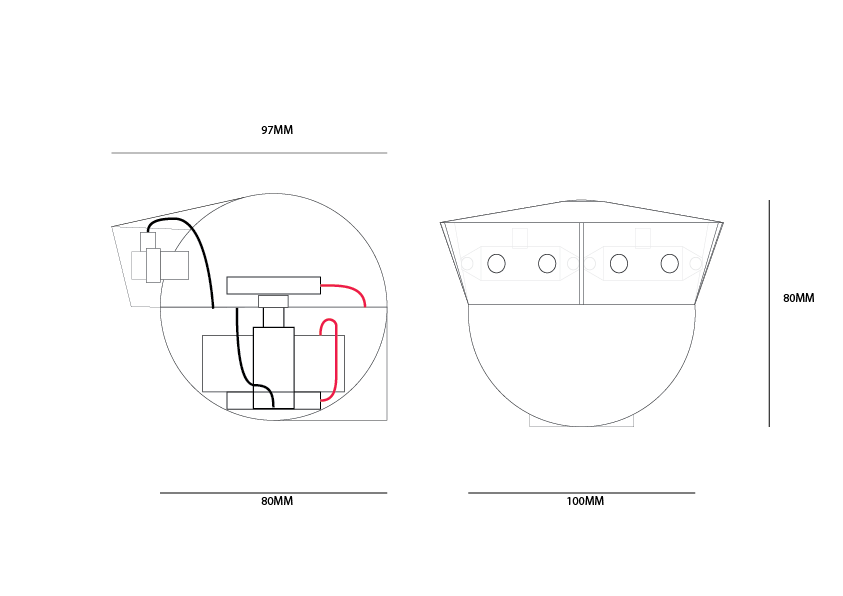

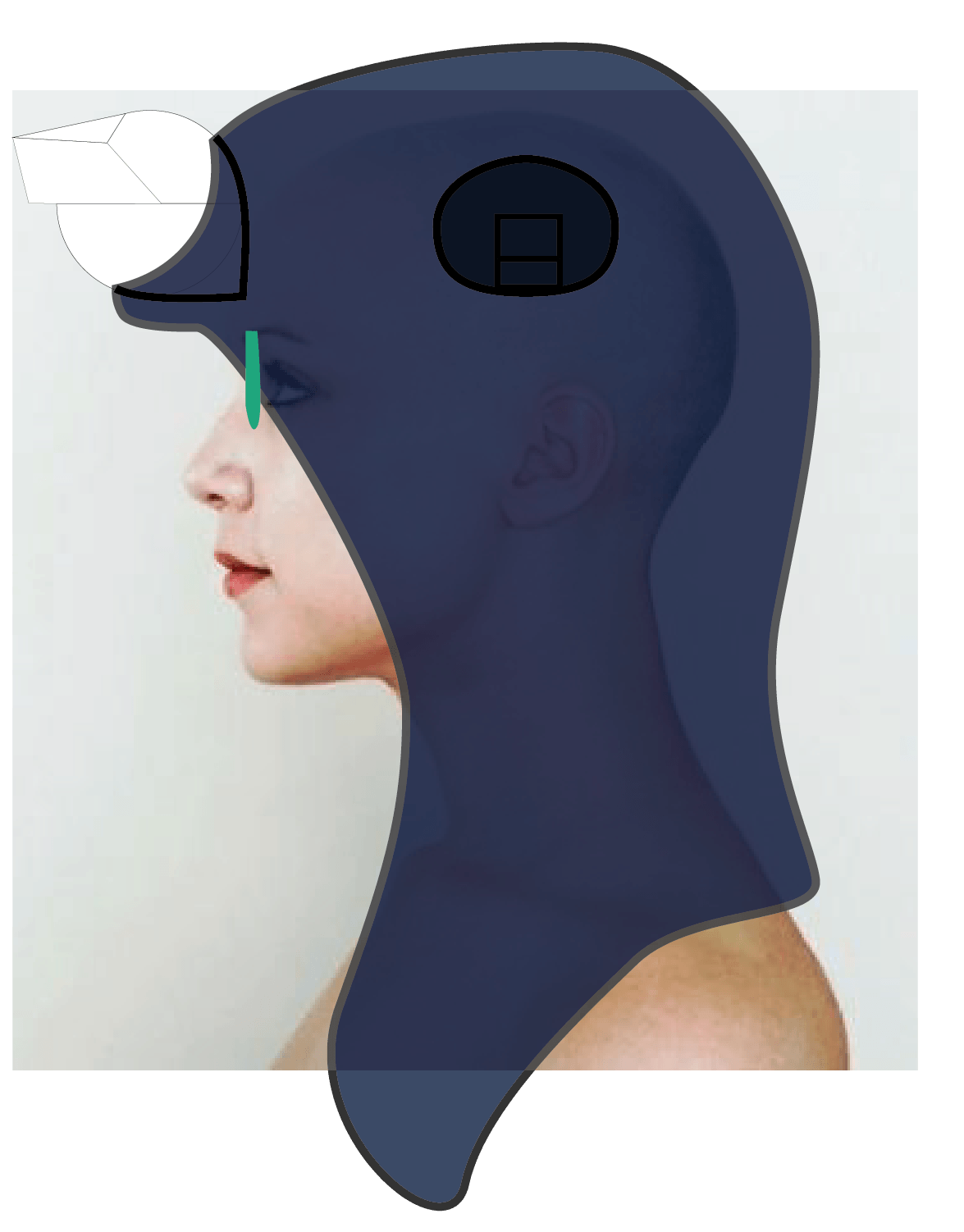

Anyway, I’m also working on the vibrawatch, but it’s still WIP.

What’s next?

I’m gonna start experimenting with making sensory prototypes. I’m also starting on a device that responds to weather immediately after I’m done with vibrawatch.