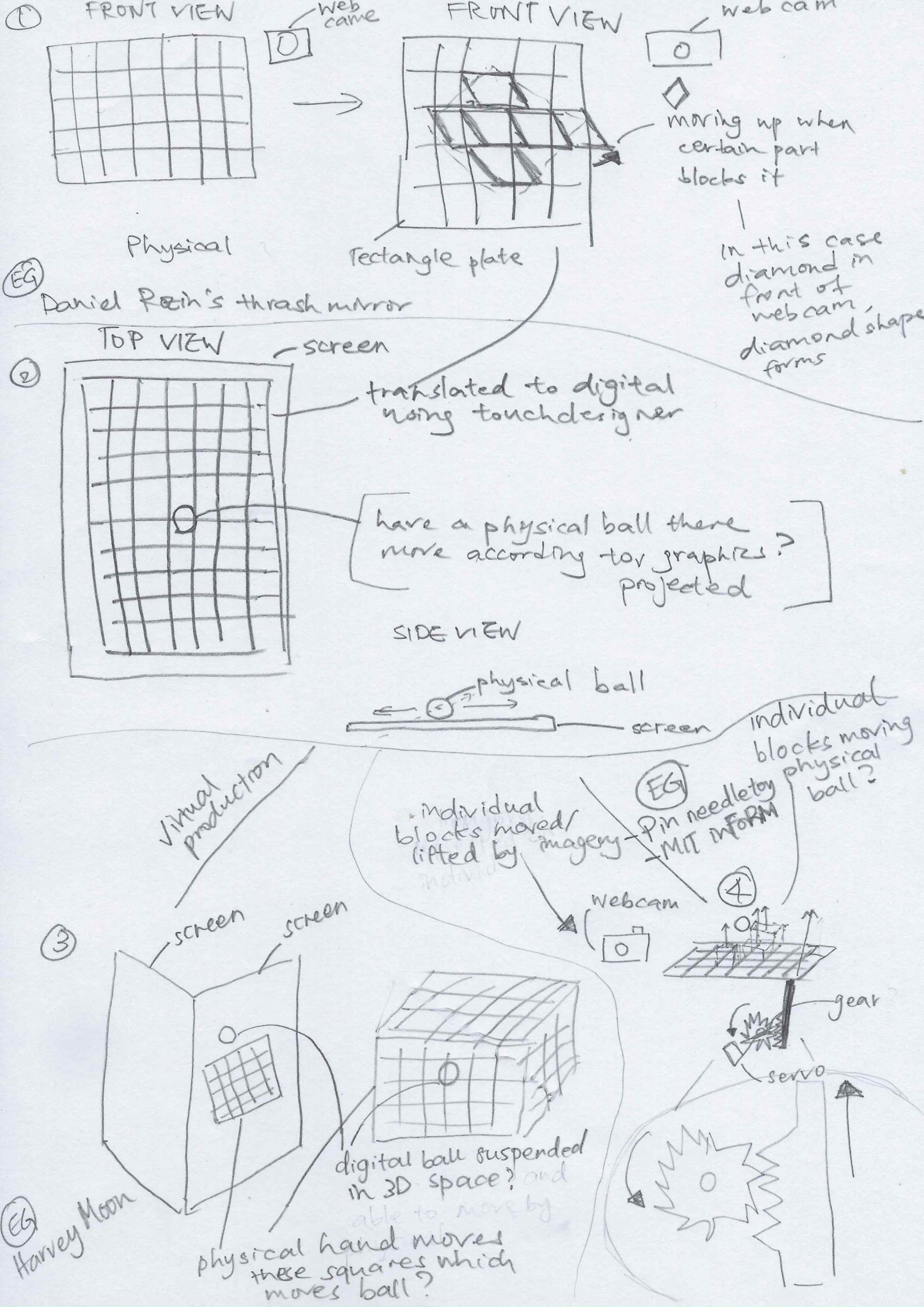

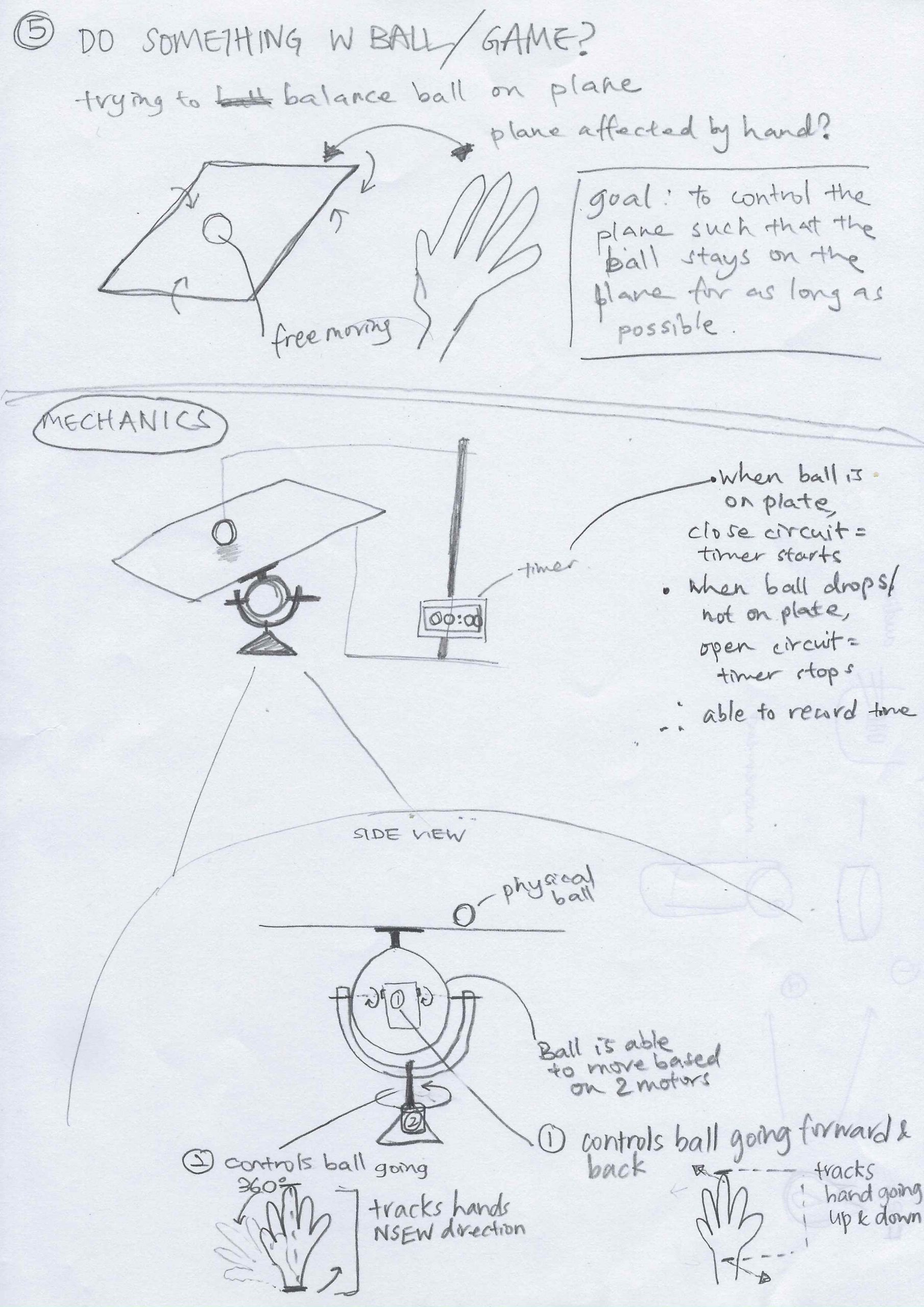

The goal of BALLance is to balance a ball for as long as possible without having to physically touch it. By redefining the idea of physical toys, BALLance is made to exemplify the idea of telepresence. It gives participants a façade of being present at a place other than their true location. Being able to play BALLance remotely, this system renders distance meaningless.

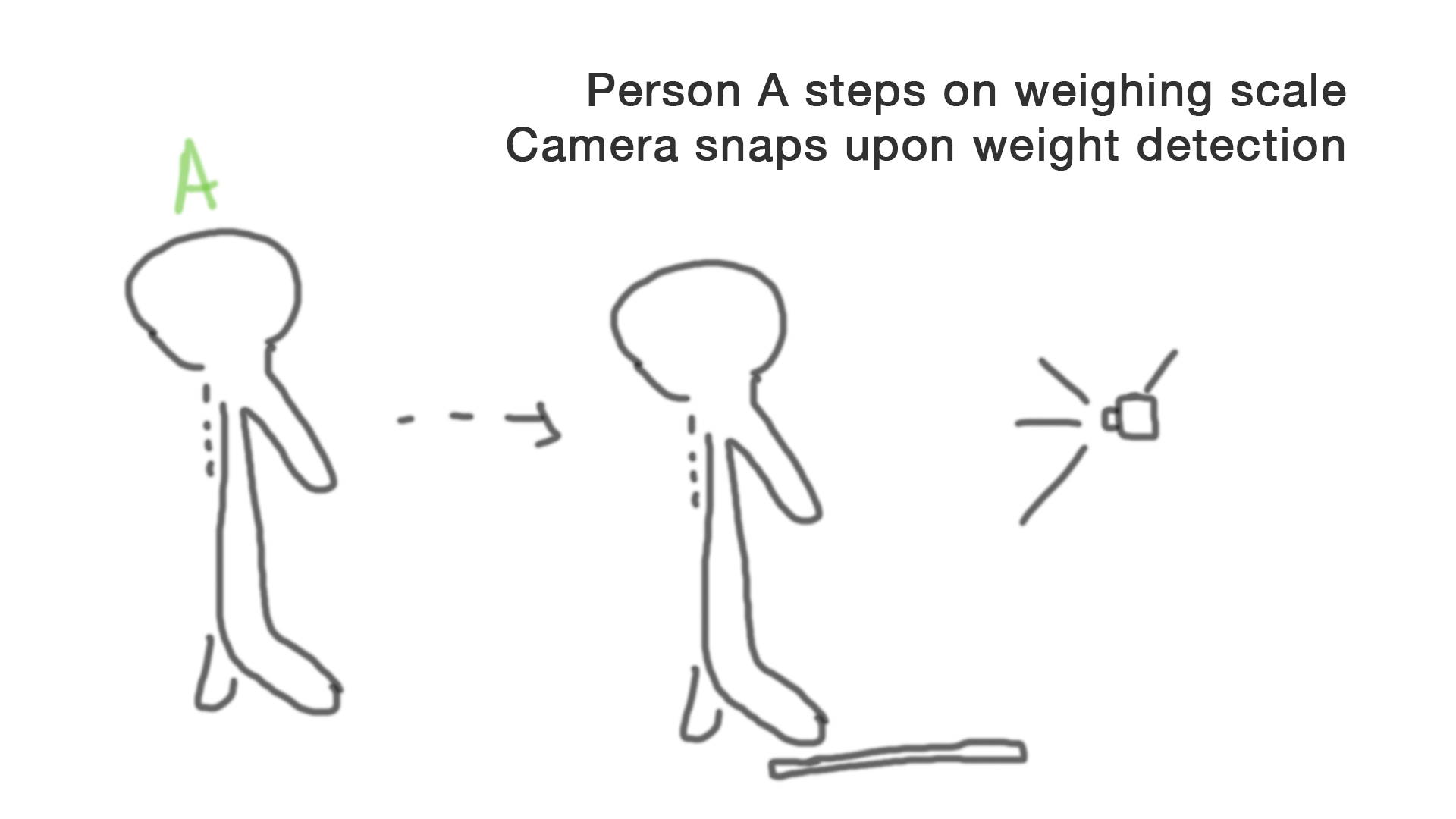

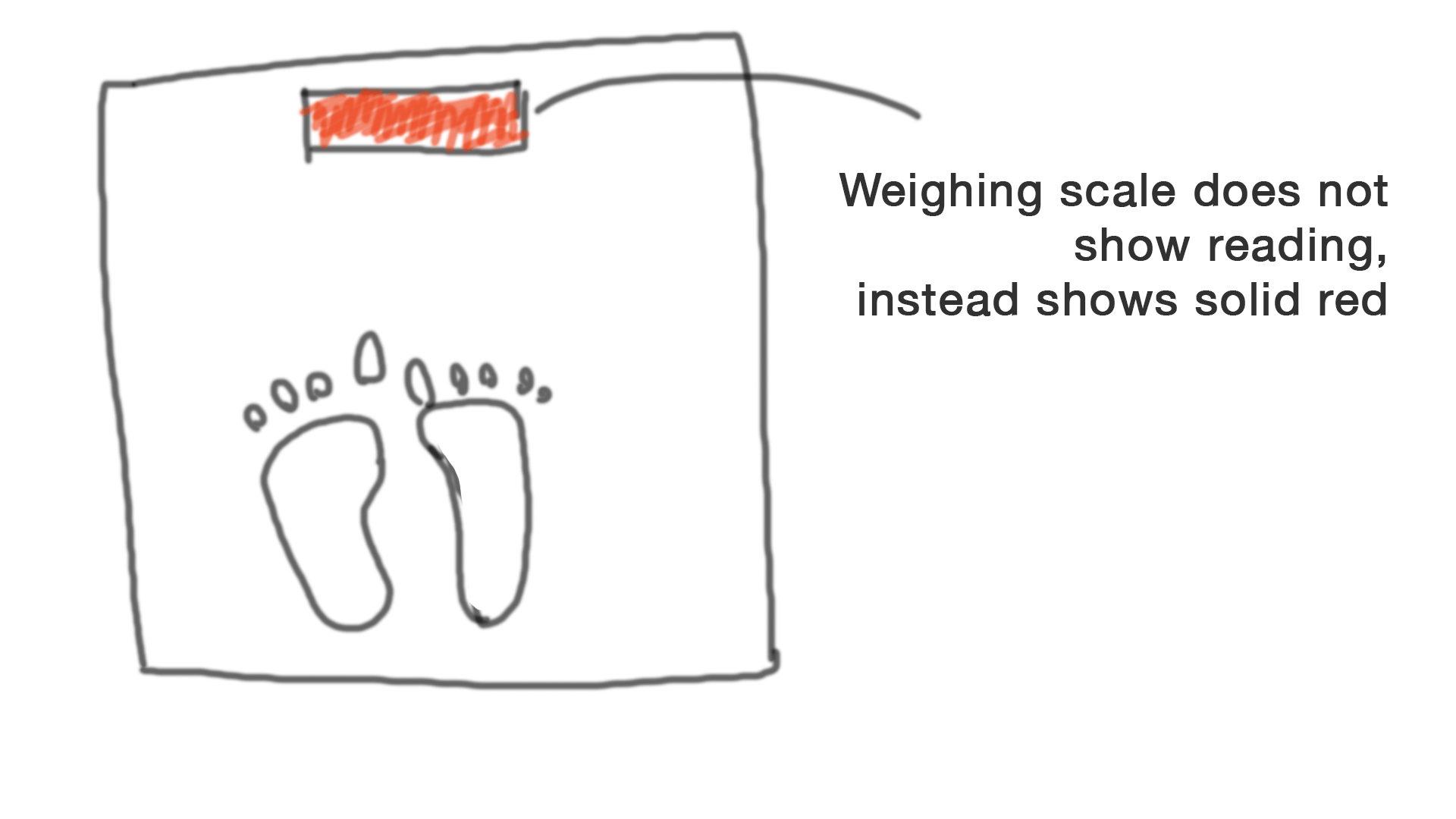

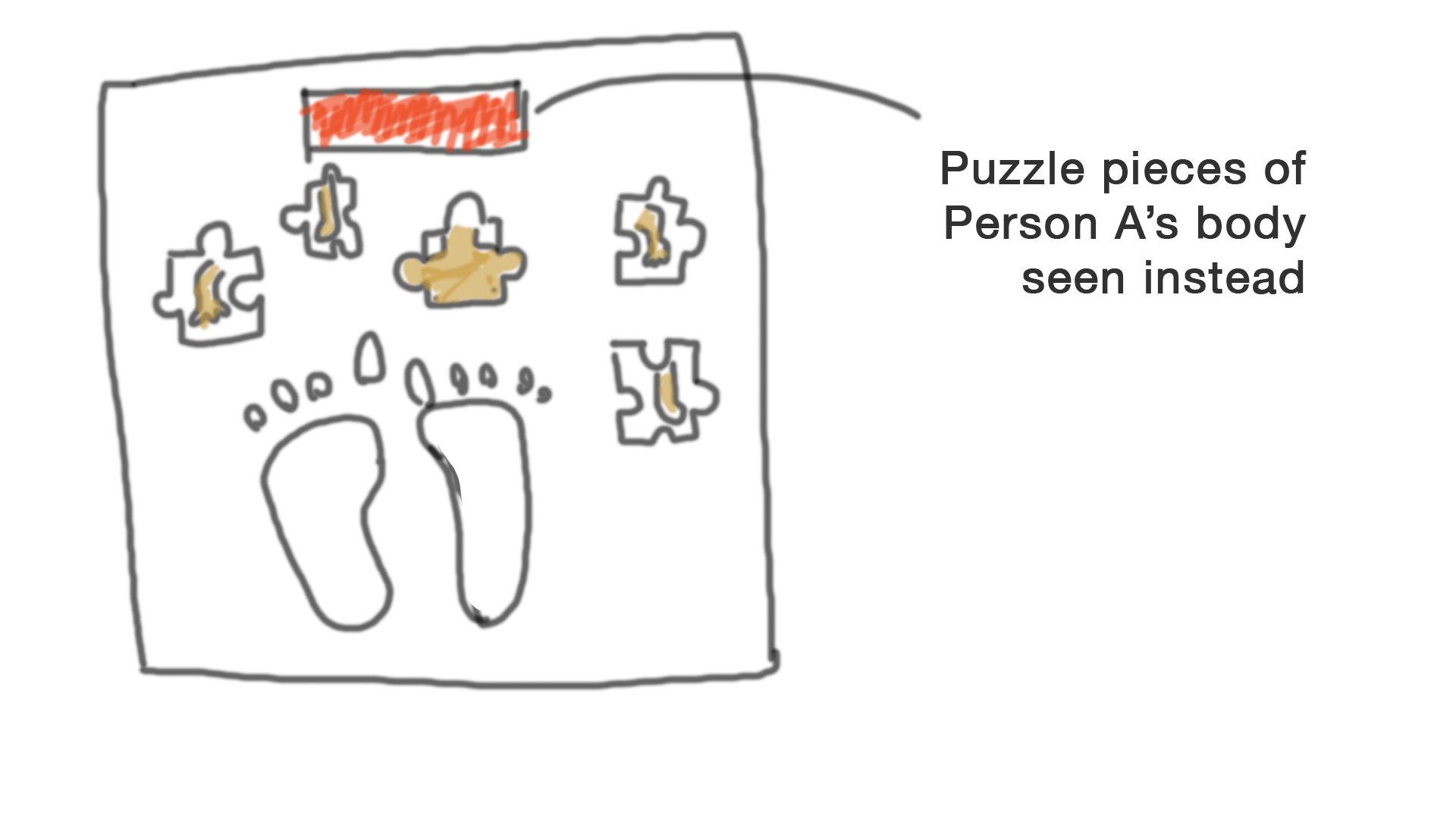

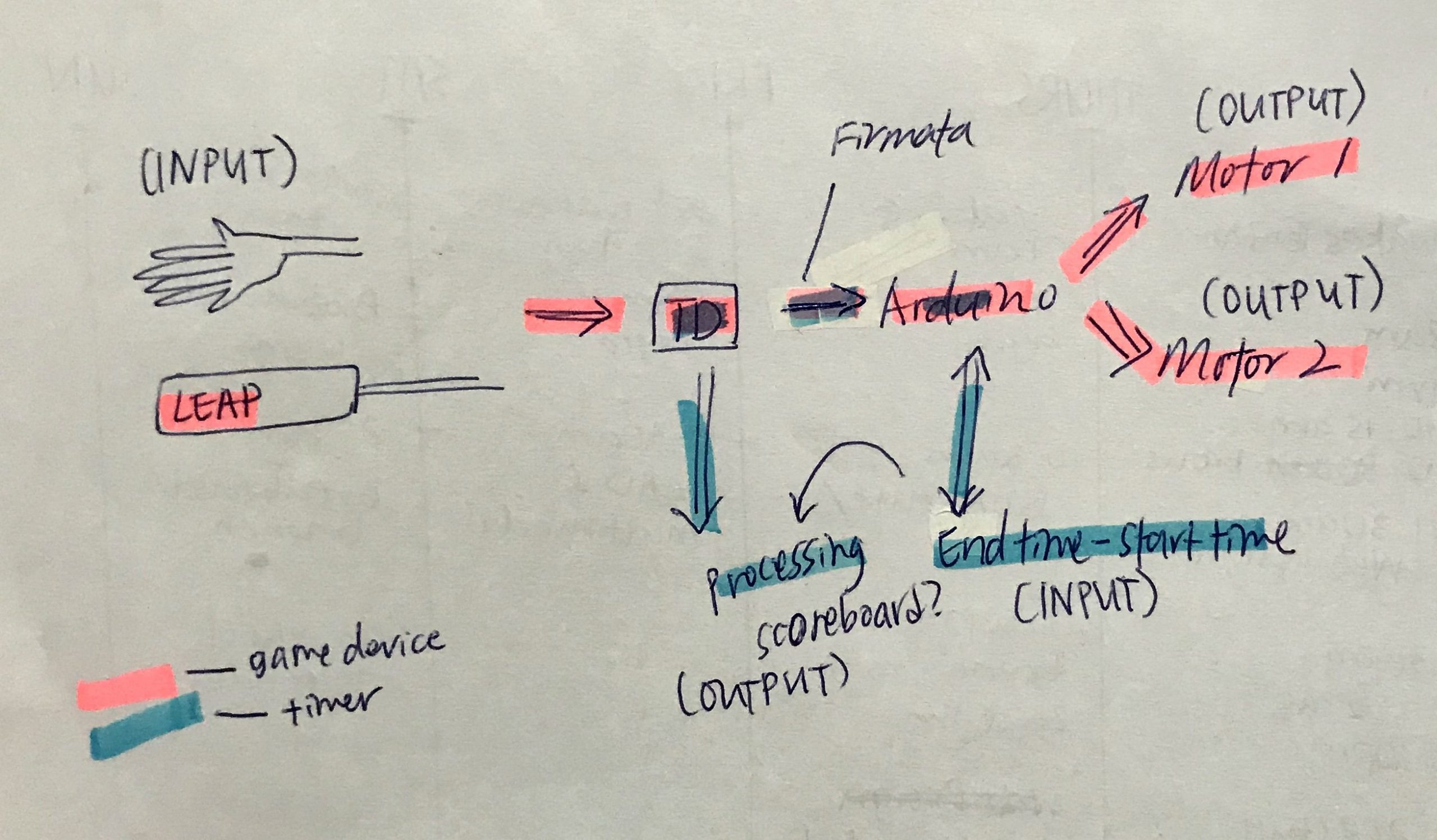

General Flow of setup:

Leap motion takes the:

– angle of HandPitch which translates to the front and back movement of Servo motor 1

– x position of the middle finger which translates to the left and right movement of Servo motor 2

In order to release a ball:

– When a pinch is detected, Servo motor 3 is activated.

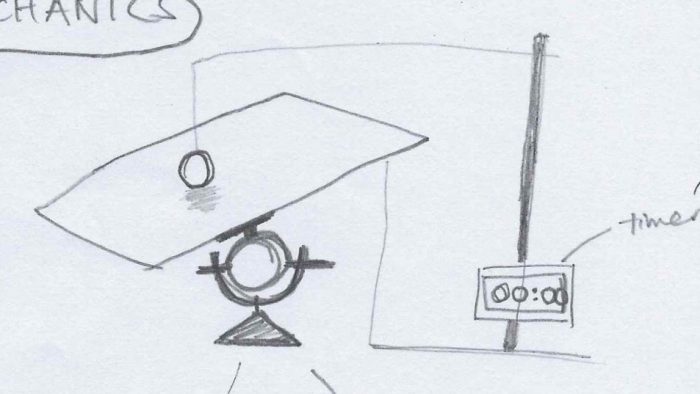

Tracking High Score:

– When an orange colour pixel is detected, the timer starts. When an orange pixel is no longer detected, the timer stops.

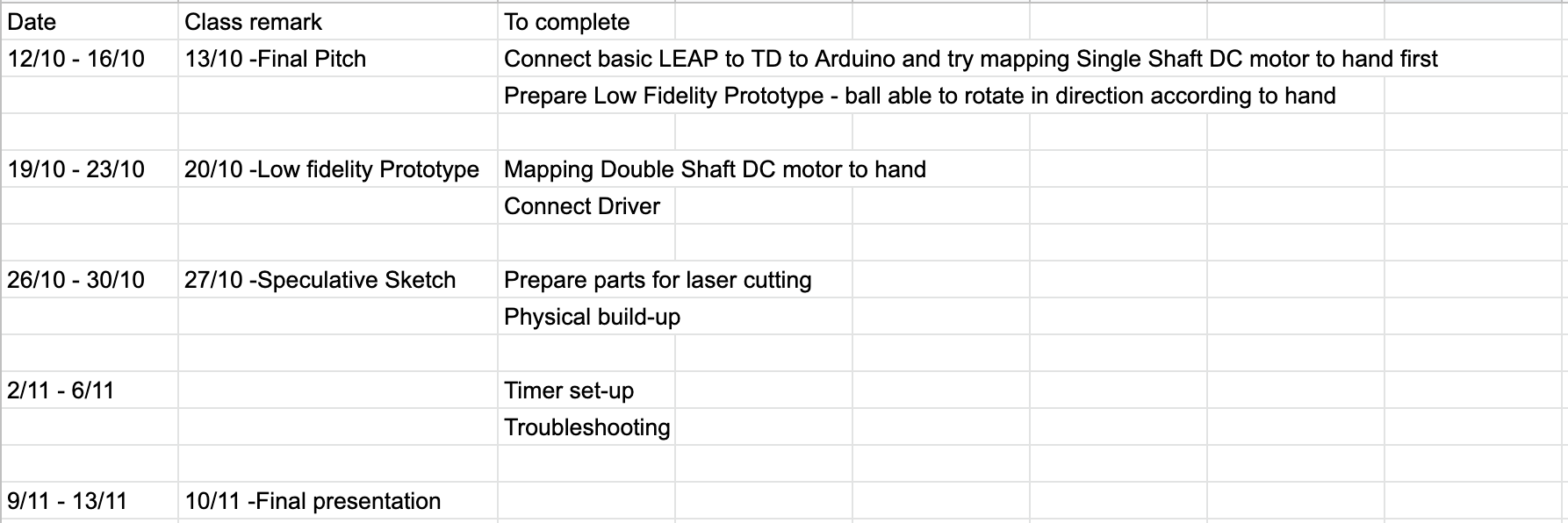

Process:

Structure

From where I left off, the low fidelity prototype, here are the updates to the structure:

The main issue with this structure is that I totally forgot the body of the motor needs to be mounted on the structure so that only the rod turns. Thats why in the photo it is held on by rubber bands. After dealing with the mounting, the corners were in the way and needed to be shaved.

Also the snout of the motor was SO tiny the rod barely held on so I needed to find another way to attach it.

Changes made:

The other motor for front and back.

Calibrating the board

From the video above, the next alteration from advice of LP is to add a cloth and thats what I eventually settled with. Works great!

Highscore

Basically works on: looking for a pixel closest/is orange and starts timer when that pixel is found aka ball rolls in. Timer starts. Timer stops when pixel is gone aka when ball rolls away. Compare this time to previous high score time. If it is longer, becomes new high score.

Initially had some problems with setting up a camera, but problem solved using Processing 4. Also, managed to set up DSLR with Camera Live and Camtwist through Syphon to set up a virtual webcam.

Final thoughts:

This project was so SO much trial and error. Because there was not one fixed result I was looking for, sometimes I was not too sure if the ball is rolling off because the ramp is at the wrong angle? Or was it the board is small? Or simply because I was lousy at the game and lack hand eye coordination. I had to try many various calibrations, keep picking up balls to try my best in finding a good in between. I also had to brainstorm a way to get the ball onto the device without having to physically touch it, since I felt that could vary the gameplay depending on how the ball was placed onto the device.

Overall, if given more time, having the feedback from user testing, I believe if I could some how calibrate the board toward the Z-axis, this game could be a-lot more convincing.

Trying to put the devices around me also meant I couldn’t conceal a huge bulk of wire and I needed to make a lot of “long” wire to be able to reach from the controller to the user’s neck. Mess.

Trying to put the devices around me also meant I couldn’t conceal a huge bulk of wire and I needed to make a lot of “long” wire to be able to reach from the controller to the user’s neck. Mess.

In case of fire, the sensors placed around the house would detect heat and smoke.

In case of fire, the sensors placed around the house would detect heat and smoke. It will alert the user via the speaker on the fire extinguisher. It will also guide the user on how to use it.

It will alert the user via the speaker on the fire extinguisher. It will also guide the user on how to use it. When the fire extinguisher is activated, the nozzle is able to rotate and automatically find the source of fire using the heat detection camera.

When the fire extinguisher is activated, the nozzle is able to rotate and automatically find the source of fire using the heat detection camera.