http://mattrichardson.com/Descriptive-Camera/

In this post, I will be talking about Descriptive Camera created by Matt Richardson in 2012.

Have you ever gone into an expensive restaurant, open up the menu and instead of seeing beautiful pictures of exquisite food, all you are getting is a bunch of fancy culinary terms stringed up together, and somehow they expect you to know what to order?

In my opinion, the Descriptive Camera reflects that. After the shutter is being pressed, you would expect to see the photo taken, yet instead- all you are getting is black and white text. And then it’s up to your interpretation skills to decipher what was taken- just like how you would have to imagine what your food was going to look like.

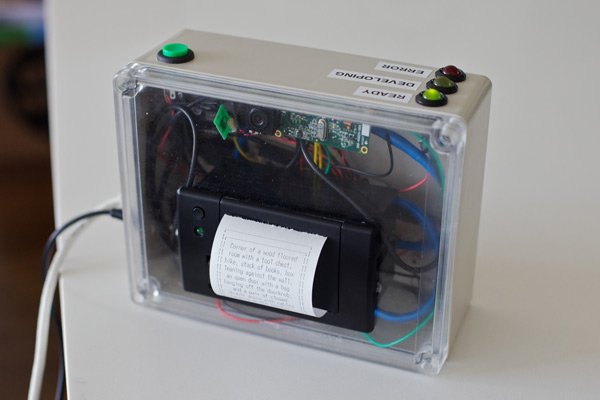

In my own (and simplified) words, this was how the Descriptive Camera was built. We have a USB Webcam, a thermal printer, 3 LEDs, 1 button(acting as a shutter button), a BeagleBone (microcontroller) connected together via a series of Python scripts. When the shutter button is pressed, it would trigger the webcam to take a photo. The photo will then be sent via the Internet to a platform where there are people waiting complete tasks. This platform is called the Amazon Mechanical Turk, and it’s almost like a 24/7 workforce. The photo is sent together with a task, which is to describe what is in the photo. Someone out that, would do exactly that and send a description back, and this will be received once again by the Internet. This output would then be translated as a print via the thermal printer.

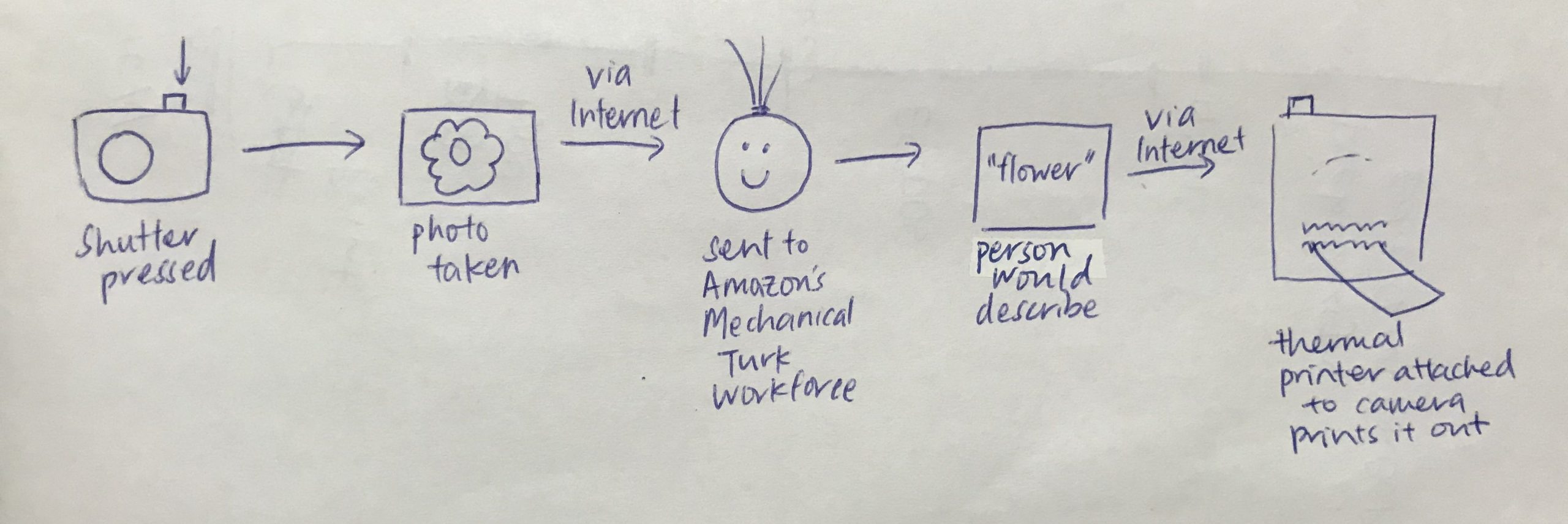

To all my visually-inclined readers who have no idea what they just read, here’s visual aid:

These days, when a photo is taken, there’s a lot of data that comes with it, in terms of date and time of photo taken, where was it taken, camera settings of how it was taken but not so often the contents of the photo such as what they’re doing, their environment or certain adjectives to the photo. The Descriptive Camera was created with this in mind and wanted something of the latter. Richardson also believe this could be incredibly useful in being able to search, filter, and cross-reference photo collections.

These are my thoughts-

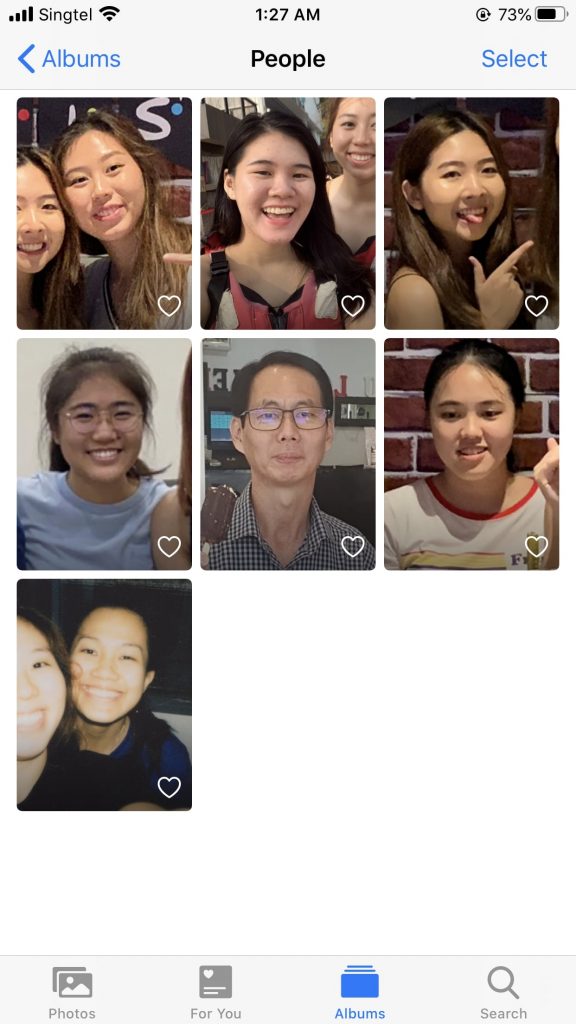

Considering that this was created in 2012, a good 8 years ago, I think he really foreshadowed what we actually see today. On our Iphones, Apple automatically uses machine learning to identify repeated faces in our Photos app and collects them into the People album. Here’s an example of what I see in my gallery:

So, this camera, though doesn’t do it, speaks of a concept that is very relevant and practical.

I also appreciated the fact that Richardson decided to build a camera form instead of just using a smart phone built-in camera. The phone itself contains a truckload of data and with his intention of streamlining data, using a phone camera could compromise just that. And though this might sound shallow but having the tactility of a physical camera is just fun! Personally, the sensation of clicking cameras is very… satisfying.

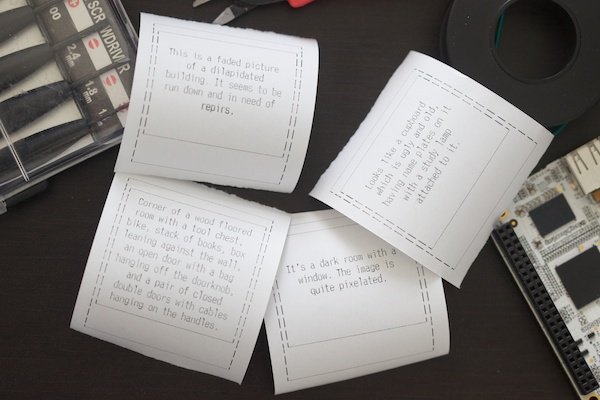

The description receipt that comes at the end also feels sort of like a reward, also seem to reassemble a Polaroid camera. The novelty of having a physical print, away from the boring digital pixel compound, excites me!

On the flip side, Richardson did mention that each print is cost money, because you do need to pay the person who is transcribing the image, and especially at an instance. There is also an apparent lag time of 3-6minutes.

I think with technology these days, if there was a chance to reconfigure the system, we could definitely look at the method of face recognition using OpenCV, Python and Deep Learning, which seems to gain traction only a couple years back. And accompanying this should be object recognition AI, which is an even more recent technology. Overall, I believe this would eliminate the need for any humans at all and a shorter time before feedback.

In conclusion, the Descriptive Camera is a paradox in many ways, you expect photos but you get text, it has a touch of rustic-ness but speaks of an idea so pertinent to the present. All in all, this work got me thinking and I genuinely enjoyed learning more about it.