Video:

Short Essay:

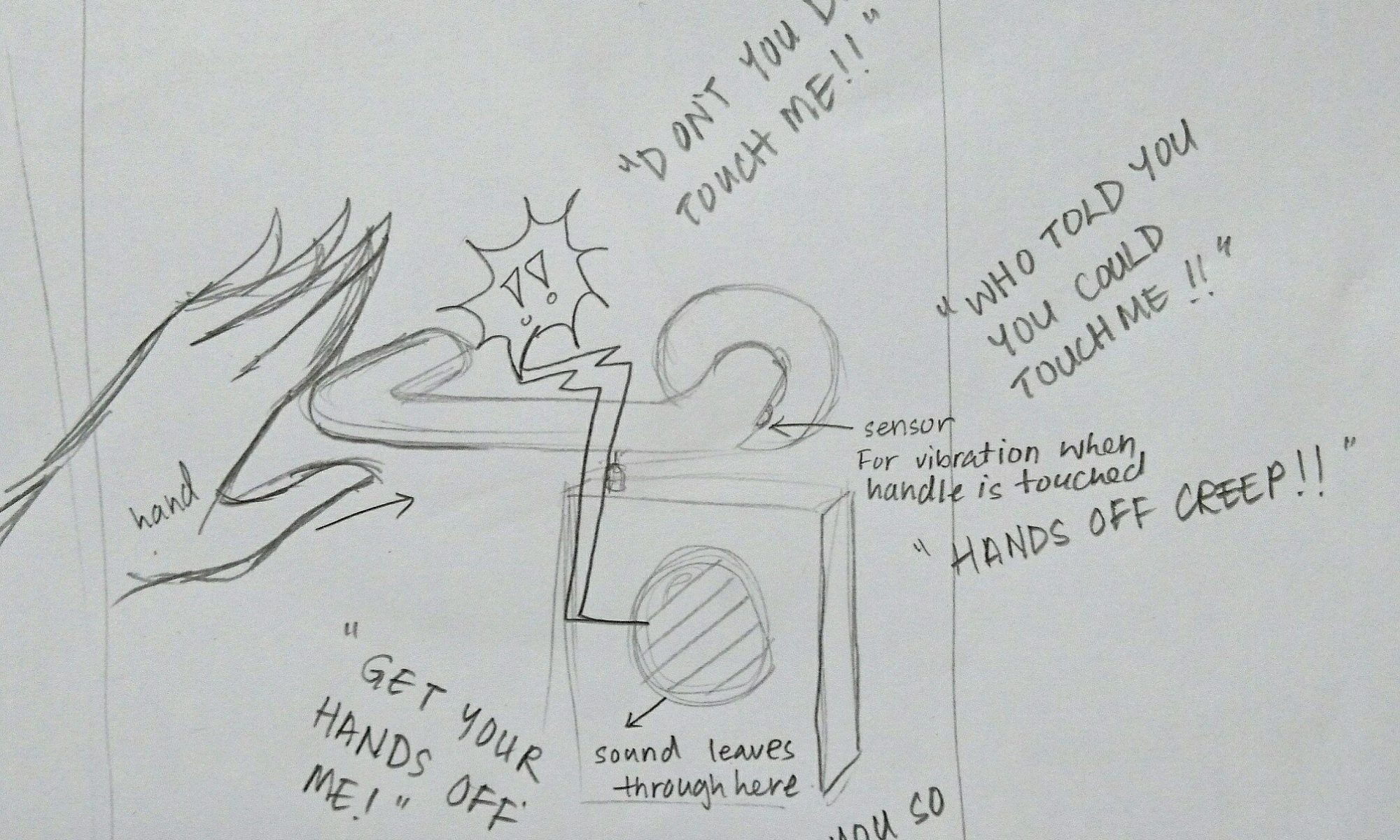

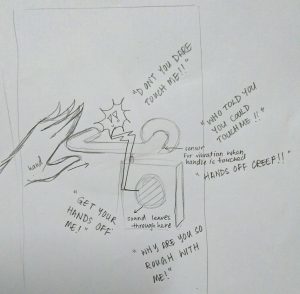

This project revolves around the idea of the gaps between noise/sound, hence we created a portable device that will sample the overall surrounding sound and in response would light an LED in a corresponding colour. The colour is based on a calculation where ‘red’ is volume , ‘green’ is pitch (regardless of octave) and ‘blue’ is pitch (exact octave). Red and Blue were scaled to fit a range of 0 to 255, however, for the Green there were 5 ranges created, skewed accordingly so that the range for a humanly possible pitch is larger then a not humanly possible pitch. The code makes use of an array to store data in each pixel, until all nine pixels have been used up, then the information would be overwritten for the following pixel.

References for the code:

- Origin of basic-ass code (which is no longer here): https://www.teachmemicro.com/arduino-microphone/

- Origin of getAmplitude code: https://learn.adafruit.com/adafruit-microphone-amplifier-breakout/measuring-sound-levels

- Origin of getFrequensea code: https://www.norwegiancreations.com/2017/08/what-is-fft-and-how-can-you-implement-it-on-an-arduino/

- Origin of NeoPixel code: https://learn.adafruit.com/adafruit-neopixel-uberguide/arduino-library-use

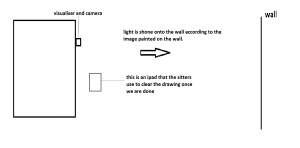

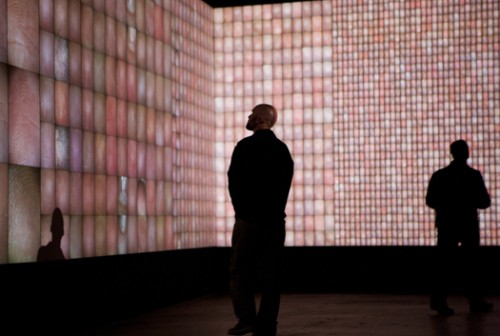

Our work takes reference to works like ‘Pulse Index’ by Rafael Lozano. It is similar in the sense that it takes record of the viewers in put, in their case the thumbprints, in our case sound, and record it on a visual plane to show the changes overtime.

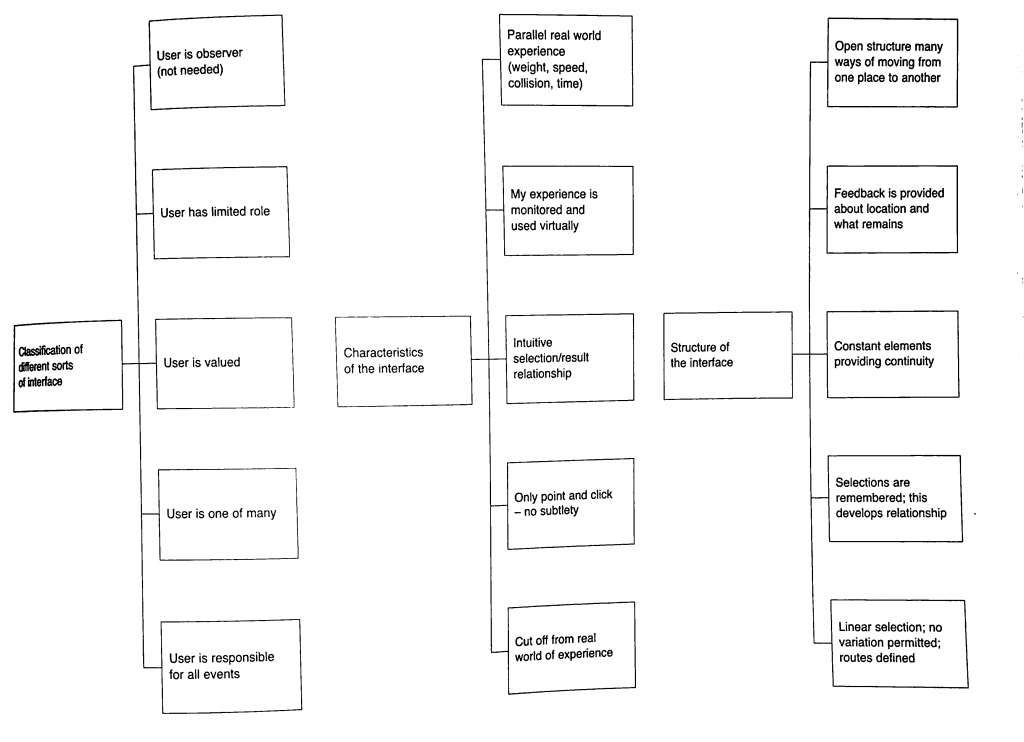

Characteristics of Interface:

Classification of interface:

Our project falls under ‘User is one of Many’ and ‘User is valued’. Our project values the unity of the environmental sound and how your sound is captured in this collective and you cant discern what is your sound and what is the environment, hence the user is one of many part. However, the user is valued is also present in a way that they are the anomaly that created the most change when they interact with it directly.

Characteristics of interface:

Our project falls under ‘Monitered and reflected experience’ as well as ‘Intuitive selection/results relationship’. For the former, the device is to collect the environmental sound and show a colour represnetation, hence all interatctions are copied and shown directly based on the sounds that you make. The latter is true as when you see the light changing to sound, the viewers will automatically try to interact with it to see the extent that it will change to, hence creating the result of trying to find the gaps between the sounds you make when you see the different coloured representations of each instance of sounds made.

Structure of Interface:

Based on the flow chart, our Project complies to everything except the last one ‘Linear Selection’. The first idea of open structure is seen in the way we made our device portable. The second idea of ‘Feedback provided’ is done so in the form of LED lights lit in accordance to the sound of the environment/people within the environment interacting with it. The third idea is ‘Constant elements providing continuity’, since the set up is designed to reflect the sound at every (how many seconds). Finally selections are recorded in nine LED pixels, showing 8 seconds of the recently past environmental sounds.

(Liz finally answered the question yay)

Who did what:

The coding for this project was done by En Cui and the physical fabrication of the device was put together by me (Elizabeth) (but you know in the end Liz kind of screwed up alot of the soldering and stuff and needed En Cui and Lei’s help to put them together. Thank youuu)

Process:

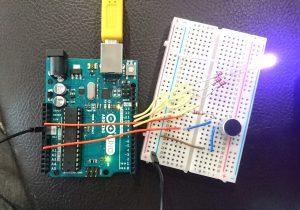

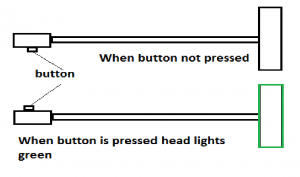

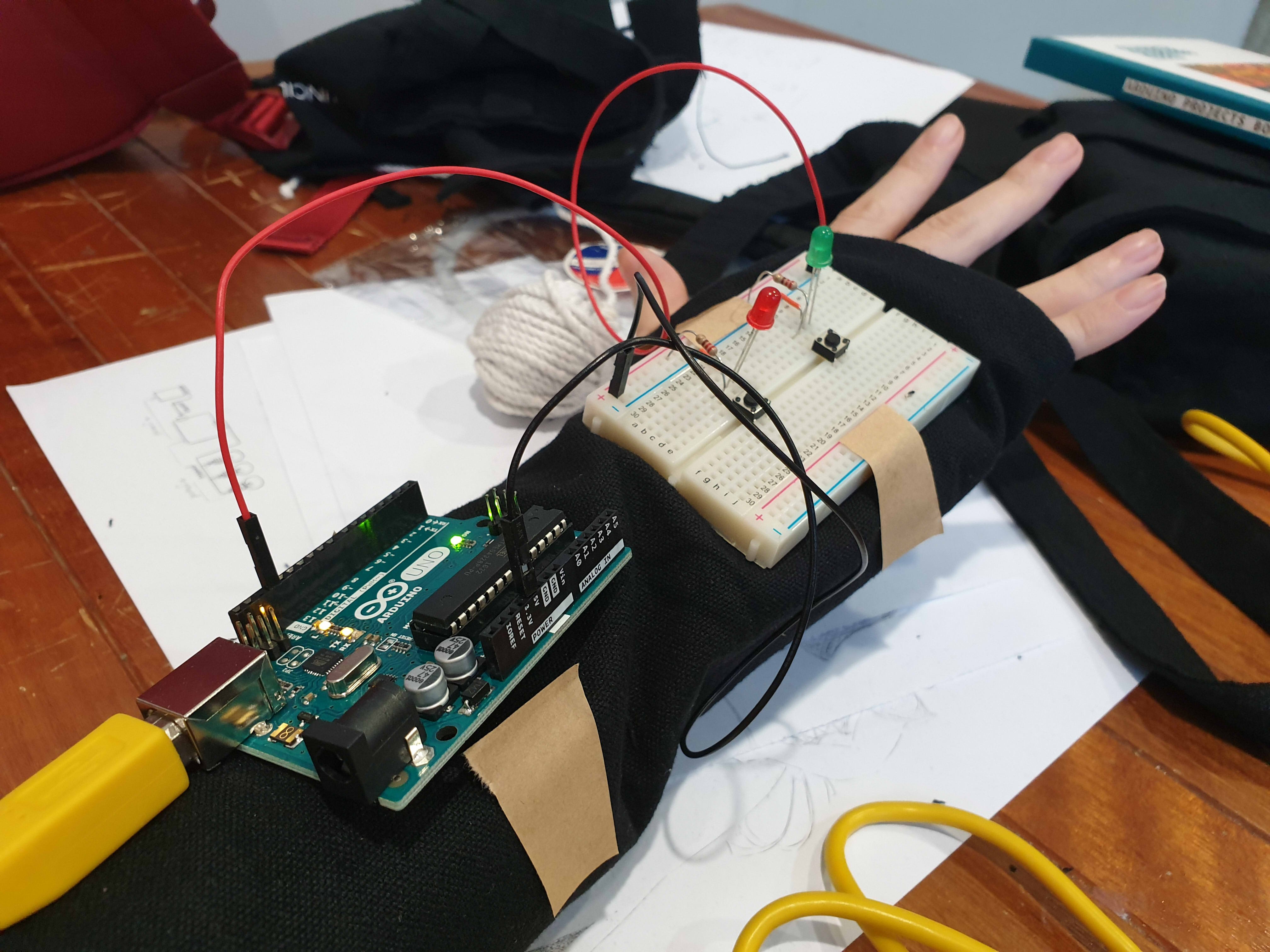

From the initial stage of mannually making LEDs light up by pressing the buttons whenever someone made a sound we created a circuit where the LED would light up in a certain colour according to the environmental sound.

After that we used this circuit as a a reference and moved from a single RGB LED to a strip of LED wire. That way we could create a set up where the colour of a certain period of time could be recorded and compared to the pervious period of time.

yay the LED lights up.

Measuring the length of wire for the glove.

This is where problems started surfacing on the soldering part so there was a redo. (soldering wise and circuit wise sob)

Testing out the Circuit.

Yay it’s done.

After Review:

Everyone reacted to the work as we hoped they would despite only having two participants. They crowded and tried to put in their own input by making noises around the two. Though we have coments that the feedback is not fast enough to show the exact inflection of voice as one is speaking, hence not very obvious. We forgot to mention this during the review, but the delay is also constrained by technical limitations. If we reduce the delay, we will need more LEDs to represent the same amount of time, and the Arduino memory overloads at 13 LEDs. Additionally, even at delay(0), the Arduino still cannot function fast enough to get the desired result:

As a result of the delay, our theme in this work might not be very obvious to the viewers to pick up on as a result. The eventual solution may thus be to use something with more processing power.

There are comments on how they are working very hard to satisfy the device as well. Some say that it seemed like a prop for band or choir performances, or a tool for training how to get the exact pitch.

Summary Reflection:

EC needs to actually know when it’s not possible than maybe possible.

Liz should not be so innovative. Liz is just not good with technology.

We should have thought out the final form better.

Extended Concluding thoughts (if you want to read about our woes):

En Cui’s Reflection:

Concept-wise, the challenge was that the core concept and form were not well-aligned. While we talked out several issues, there’s still the challenge of the interstice being unclear. But I think, in the end, the clarity of the message depends on how you interact with the wearable. For example, the distinction is much clearer if you experience the wearable in multiple contexts, than just one.

Regarding the code and circuit, it was actually mostly okay. While things didn’t always work, the only solution needed was to observe the problem, deduce what could be possible reasons for its occurrence, then test out my hypotheses one by one. Examples include mathematical errors and faulty wiring. I also did soldering part 2 for the microphone, and honestly the solution was just learning to recognise patterns of problems and solutions based on past mistakes, such as the solder not sticking to the iron (wiping more), or getting fingers burnt (plasters).

I also realise after a full day of reflection that I’m just incompetent at doing group work efficiently. Leaving me in charge is a generally bad idea.

Elizabeth’s Reflection:

For the most bit I felt very challenged by the project, especially since it is the first time we were using and putting together components to make a circuit. for the physical fabrication portion it was the first time I used a solder, and my circuit looked very ugly after that, and I dont really think I improved in that aspect very much even after multiple attempts 🙁 When using the Hot glue gun to insulate the exposed solder I think I made the circuit worse, because there was already a built up of solder.

Also, I did not solder the circuit down the right way apparently. You can only solder your wires to one side of the LED because they are fickle and like to have their electrical charge flowing in one direction. Also, do not solder and hot glue your circuit till you are 100% sure it works, saves you a lot of heartpain and time, (thank you Lei and En Cui for dealing with my screw ups D;).

I also made a few mistakes by piercing the LED strip’s digital pins on accident thinking I can sew it down that way. Thinking about it now, I should have known better then to try piercing any part of the components.

Speaking of computer, I feel very attacked by my own computer, since I think it has issues running the code we shared over google docs, and gave me a heart attack that I might have short circuited the only RGB LED in the starter pack, and still the circuit refused to light after I confirmed that I did not. I think there is something wrong with my computer DX. I either leave the testing for computer to En Cui or find a school computer for this (pick the right computer for this, not all computers have arduino).

If we had a bit more time and I had a bit more skill in soldering, we wish to have more LED lights to reflect the change in sound.