Butterflies

My fashion concept revolves around the visualisation of the feelings of anxiety. Anxiety is sometimes described as the butterflies in your stomach and I wanted to work with butterflies on my outfit. For the dress the interactivity was meant to show the phases of being anxious. The first being where the butterflies come alive under the use of servo motors, loud like the blood rushing in your ears. The second being the calm of overcoming that anxiety, where the butterflies calm, and there is light.

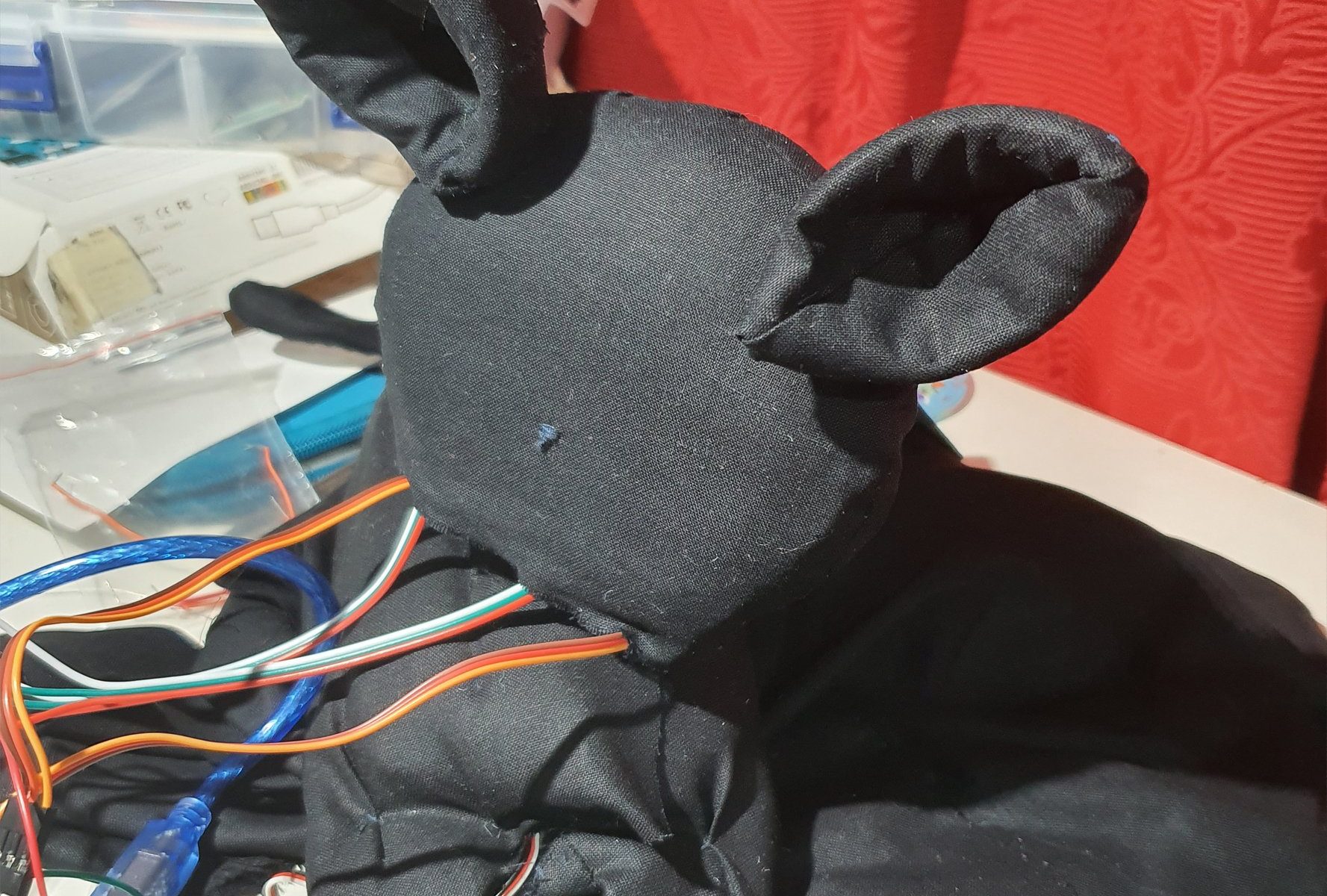

The idea of the dress was supposed to be dark, representing the things lurking in the shadows and you cannot really see them, hence I chose of black fabric. Eventually though to liven up the dress, I had chosen some red accents so as everything is not just one dark mess.

Technology:

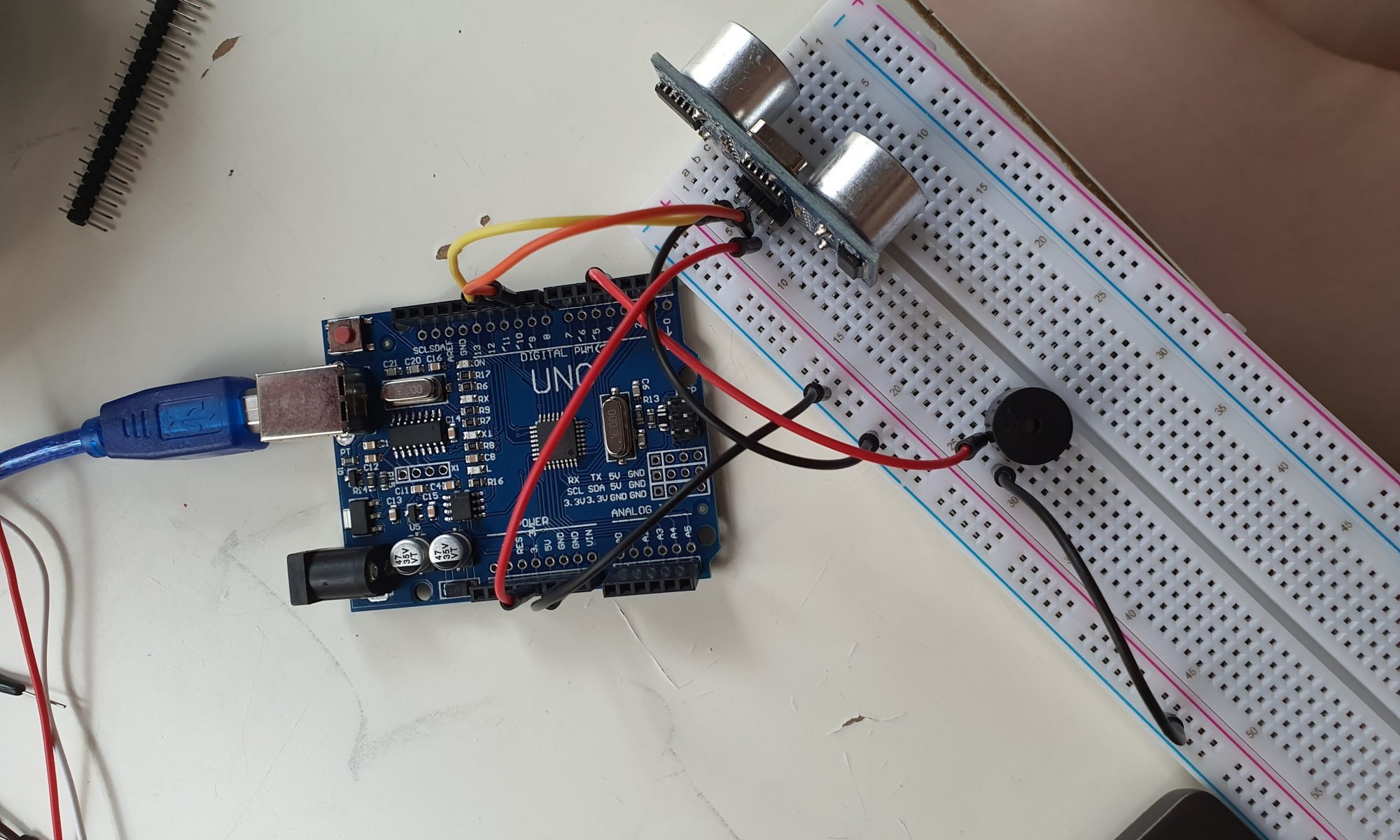

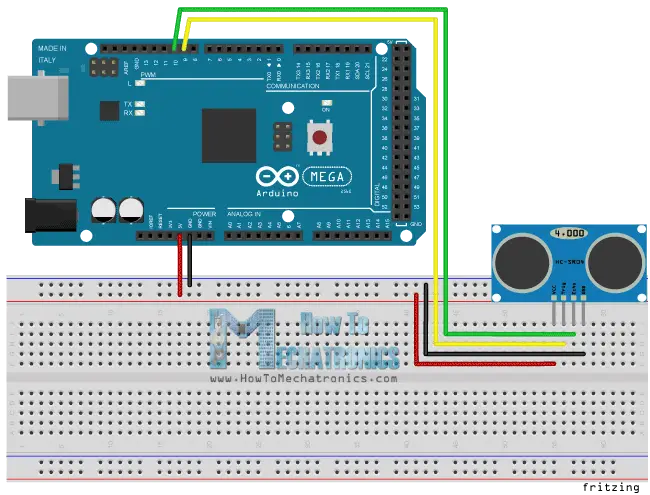

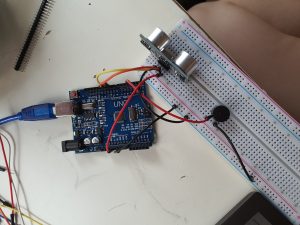

For the circuit I separated the interactivity into two circuits.

The first is the servo motor where its like a pulley system to pull the butterfly wings up, and gravity brings it down.

The second state is the lights which pulse like a heart beat. I felt that it would be nicer as a pulsing light as it is softer and calmer.

I had thought for if this project exist on its own it will either respond to heartbeat with a heartbeat sensor. For the fashion show I wanted to do a collaboration with Pei Wen, where when her dress comes close then it would trigger the second part of the dress where it lights up, kind of representing the comfort a close friend gives, which results in the calm.

Improvements:

I need a bit more support for the butterflies. As it relies of gravity to let the wings fall, it has to perch horizontally rather then on the side of the wall. So I would like to either remake the butterfly system or make a kind of support.

I would also like to conceal my wires and the butterfly stand better, by maybe making cloth flowers to conceal it.

I would also like to expand the butterfly circuit to the hat portion, so hopefully I can cut or find another way to make more butterflies.

For the Fashion Show:

My role was originally to help out with the photo shoot, I didn’t really think about it too much because the role sounds straightforward enough. Um, I could help out with the other areas too if help is needed.