Continue from my previous Process 2.

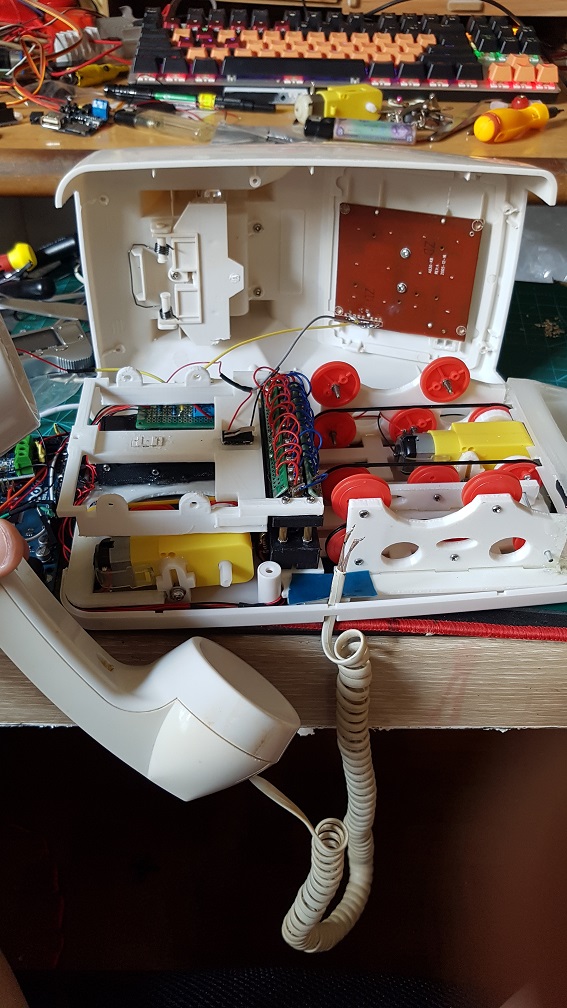

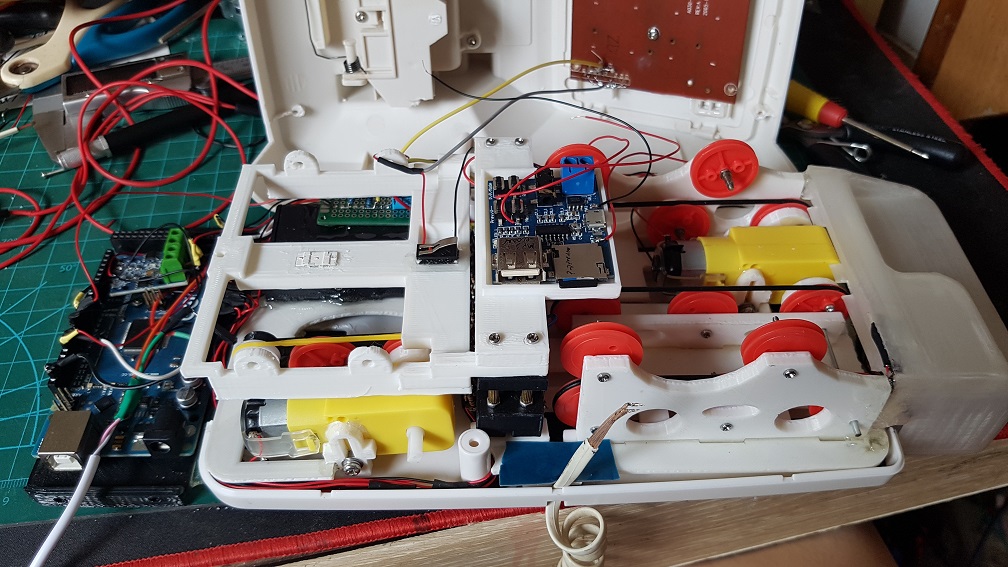

again, I had progressed much further since that post, mainly in designing the workable electrical and mechanical system that could fit into the Telephone and to write the Arduino code up.

First, lets start with the Final Video!

Back into the Process

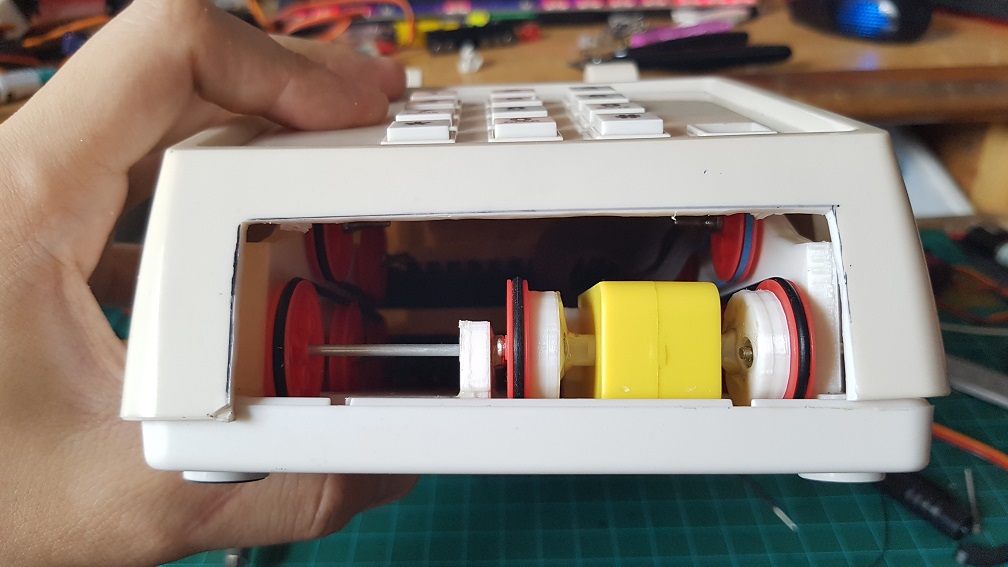

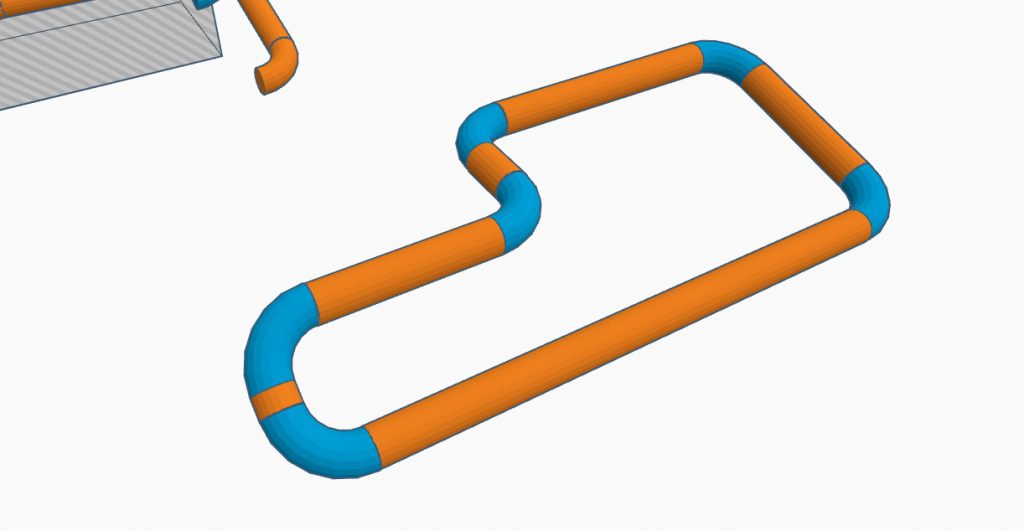

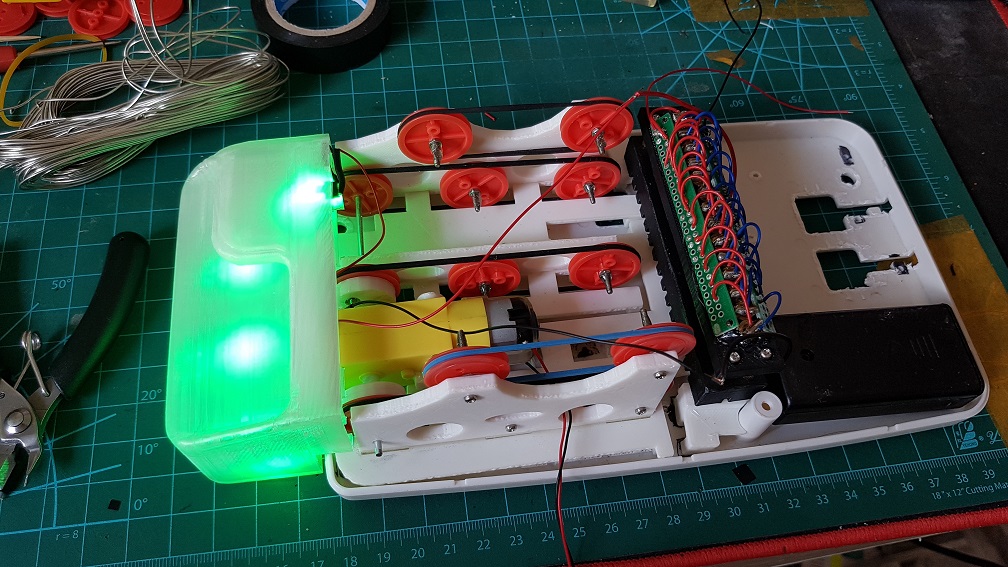

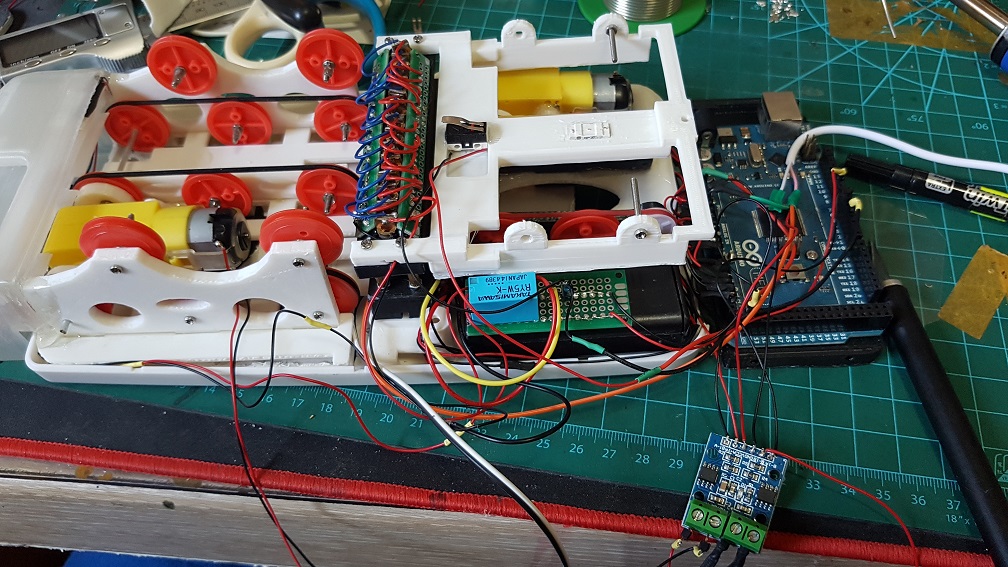

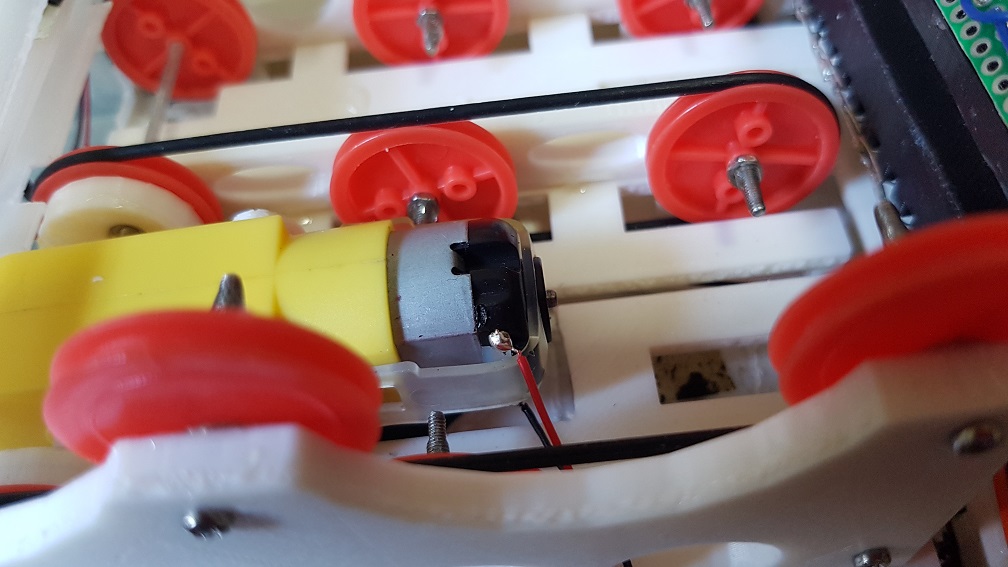

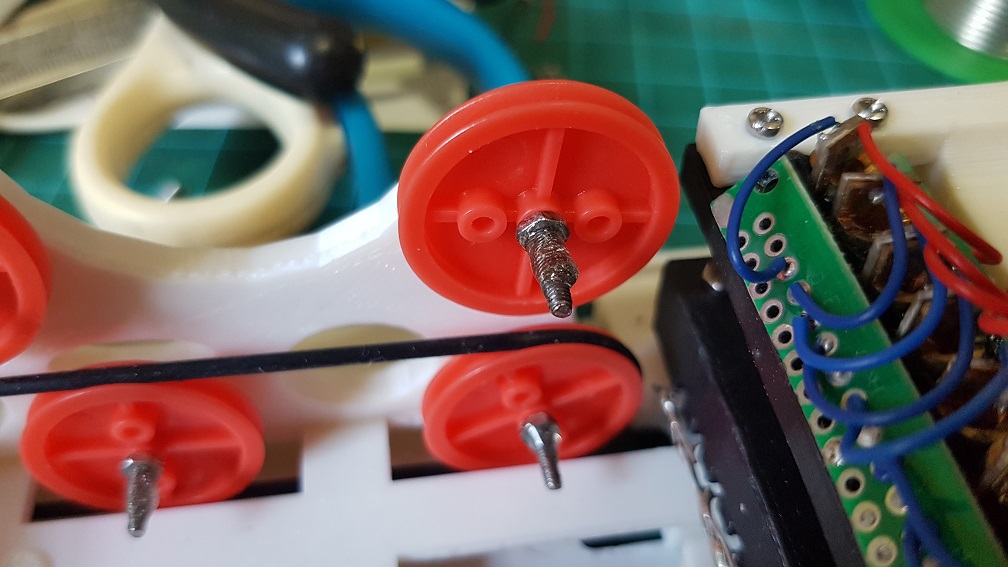

Since the previous post which I’ve roughly did the belt system that drives the music card into the laser reader, I had added the motor to the system and tried it, at this point, it seemed to work as I thought all I need was to slow the motor down and it will be alright.

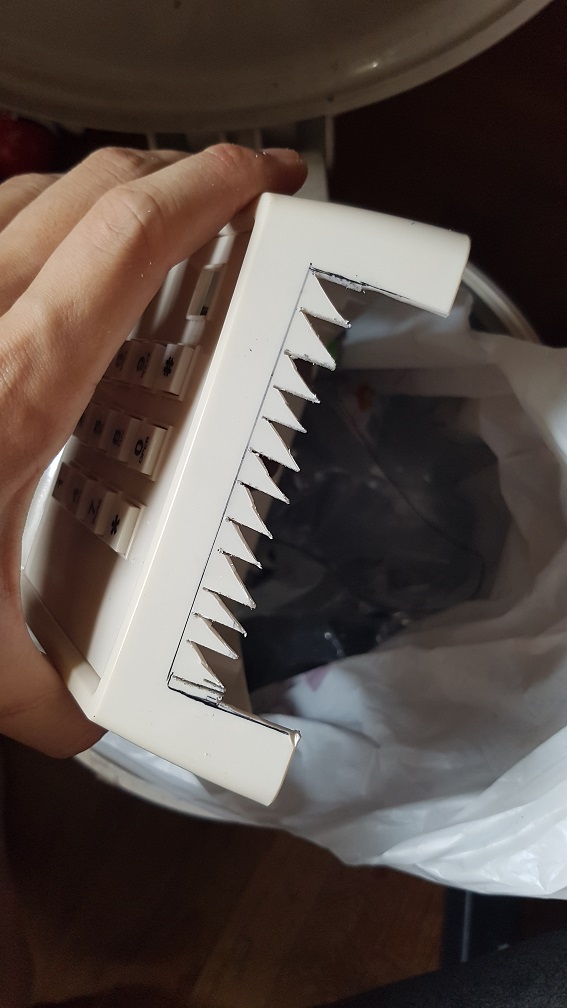

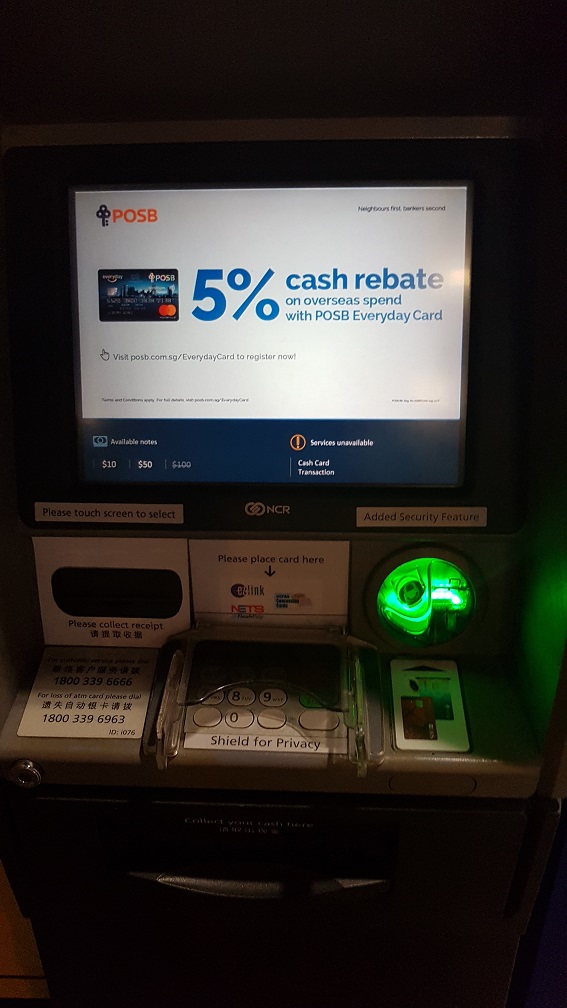

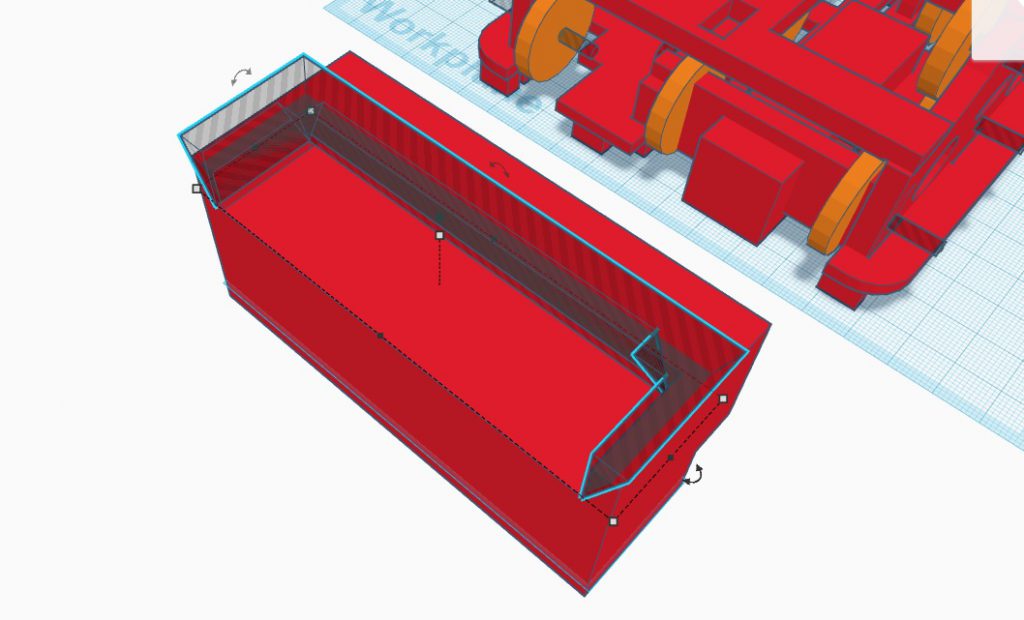

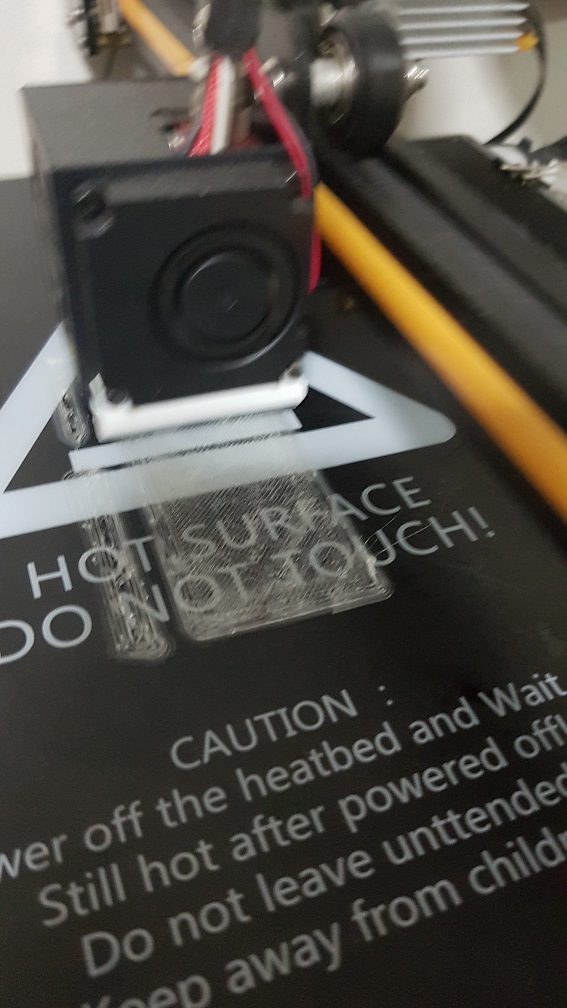

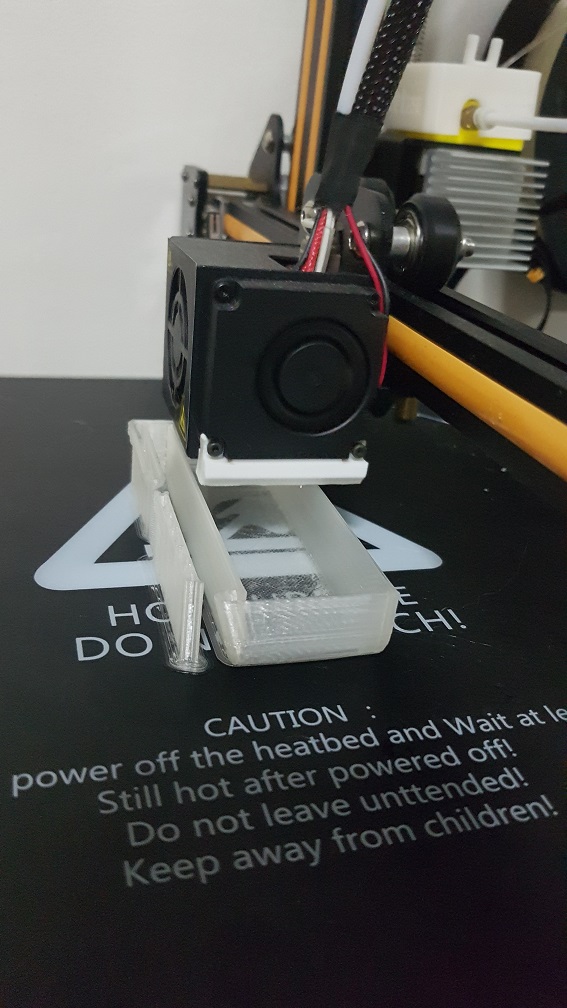

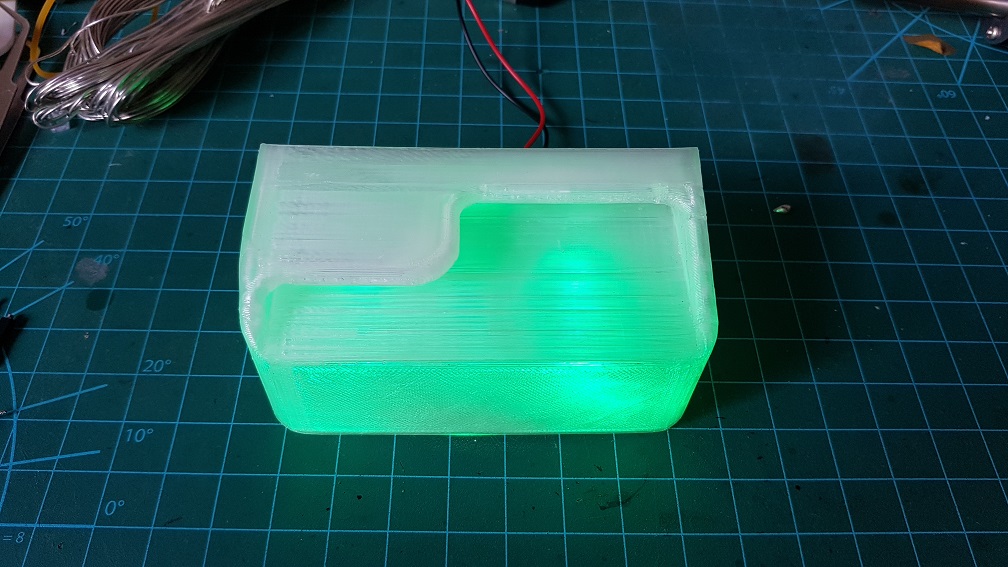

After I cut the hole, I proceed to modelling the card slot and i took inspiration from the ATM just to have the user to have something they’ve experienced and know how to put the Music Card in without instructing them, since subconsciously, I assumed that they interacted with a ATM at some point in their life.

After the modelling which i am really happy with, I proceed to print it.

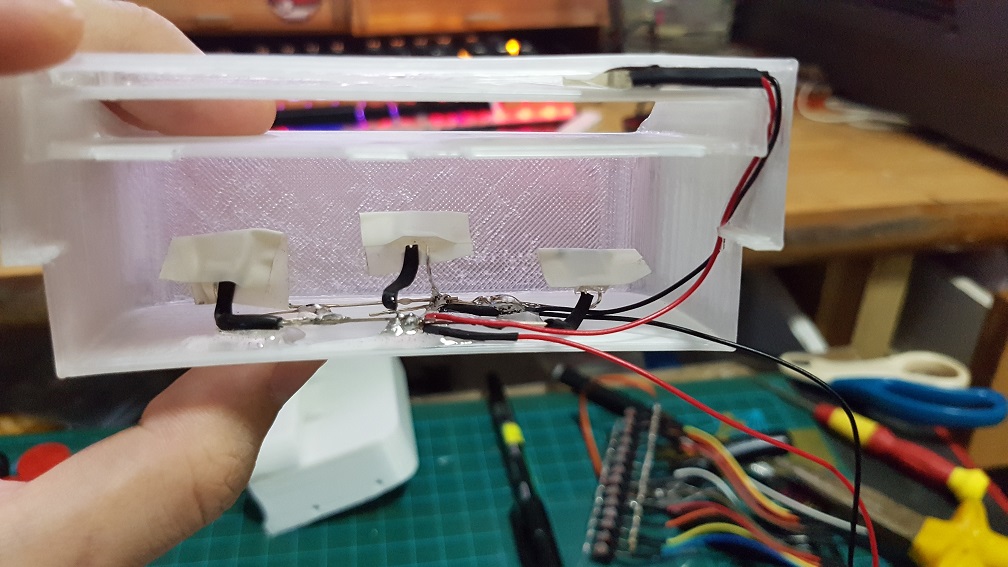

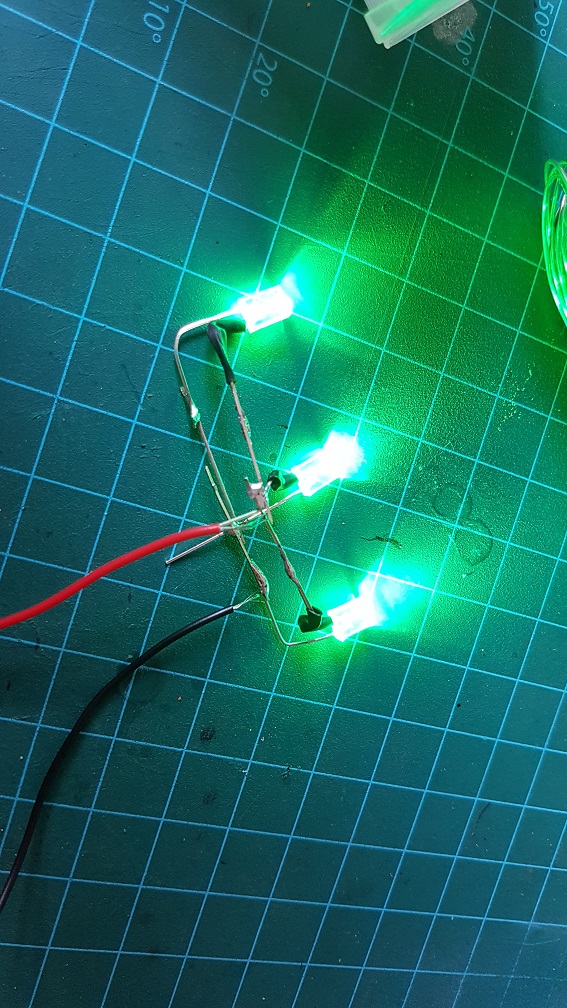

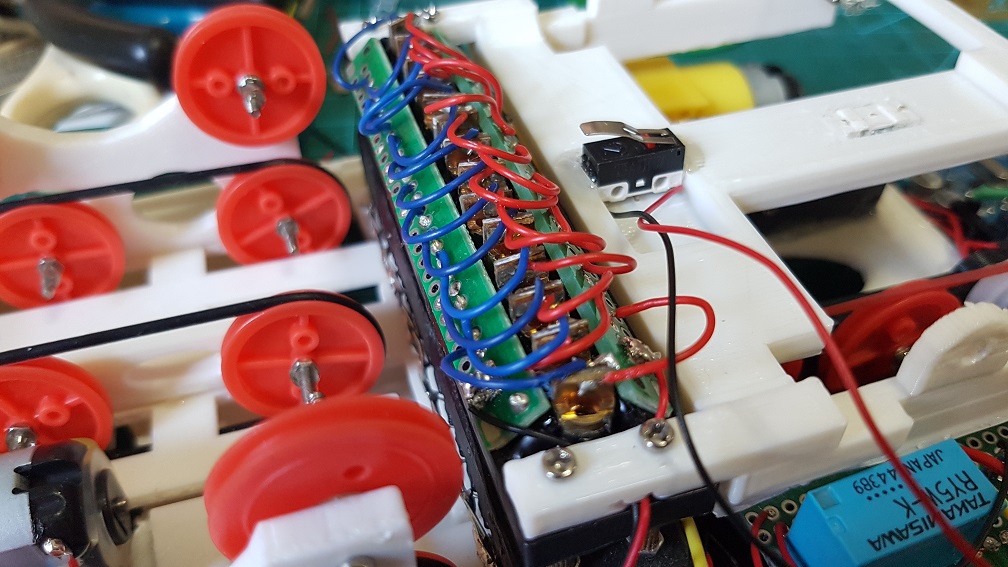

Since it was looking good, I went ahead and make a nicer LED system for it by soldering 4 LED(3 on the bottom and one for the top).

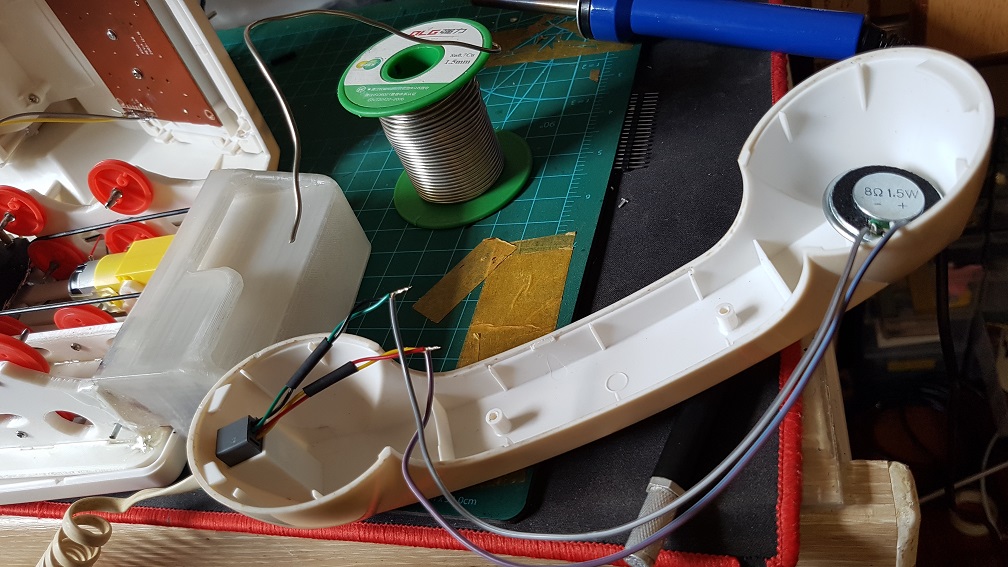

Next, I Epoxyed the speaker onto the bottom of the front belt drive since there is already a hole in the bottom shell for the speaker.

This is a 8 Ohm 0.5watt speaker that will be plugged directly into the Arduino.

This is a 8 Ohm 0.5watt speaker that will be plugged directly into the Arduino.

I also Epoxyed the 4 LED into the card slot to prevent them from sliding around.

I also Epoxyed the 4 LED into the card slot to prevent them from sliding around.

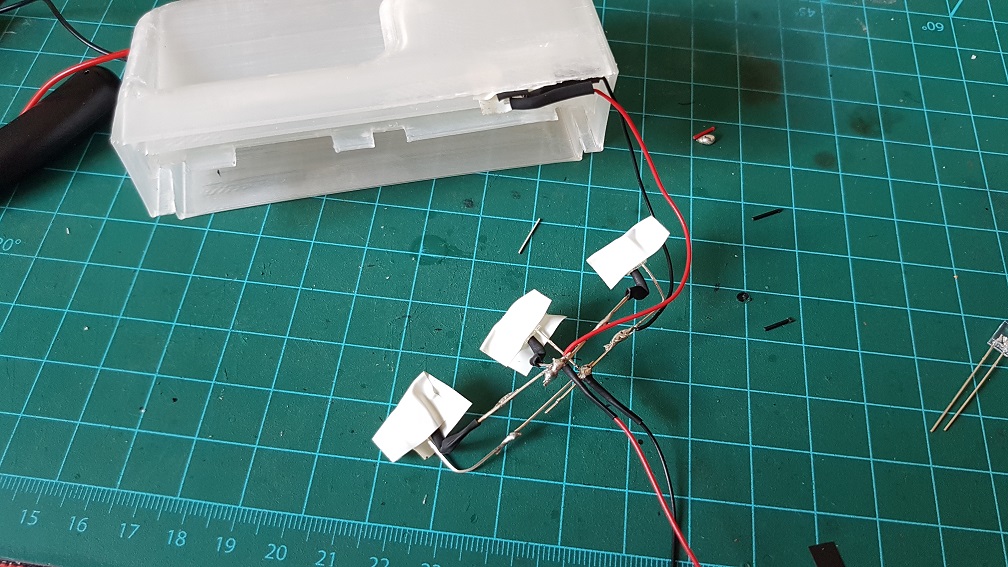

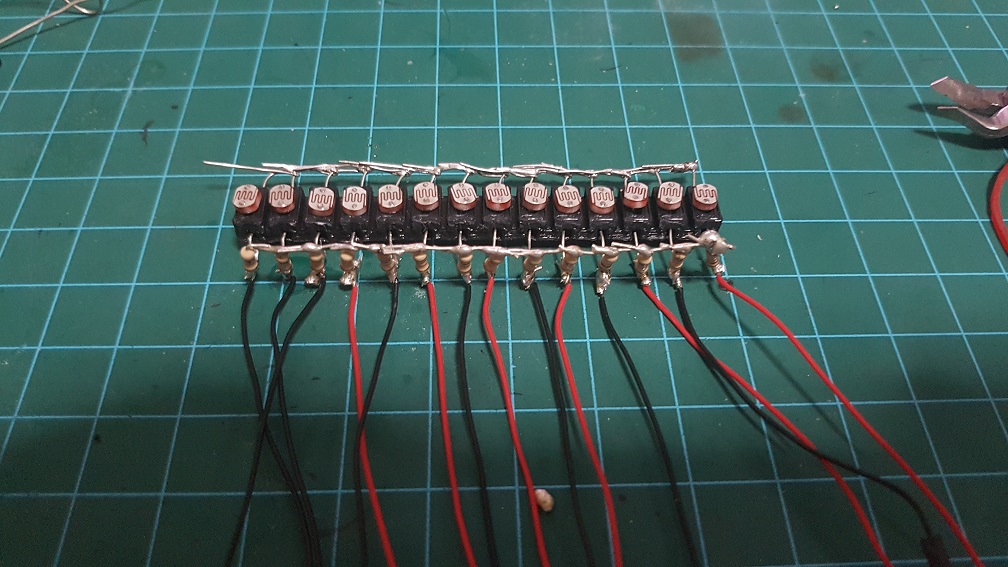

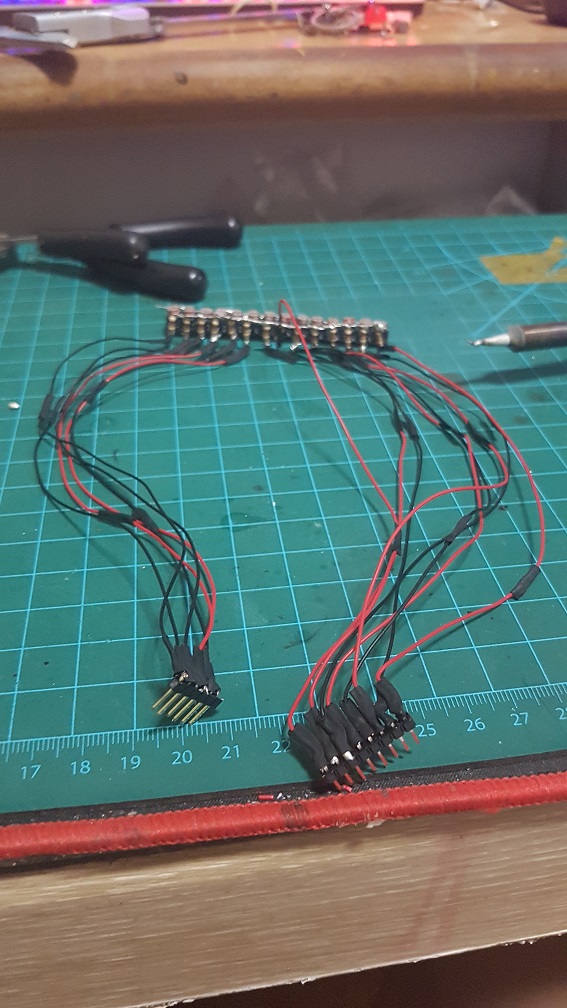

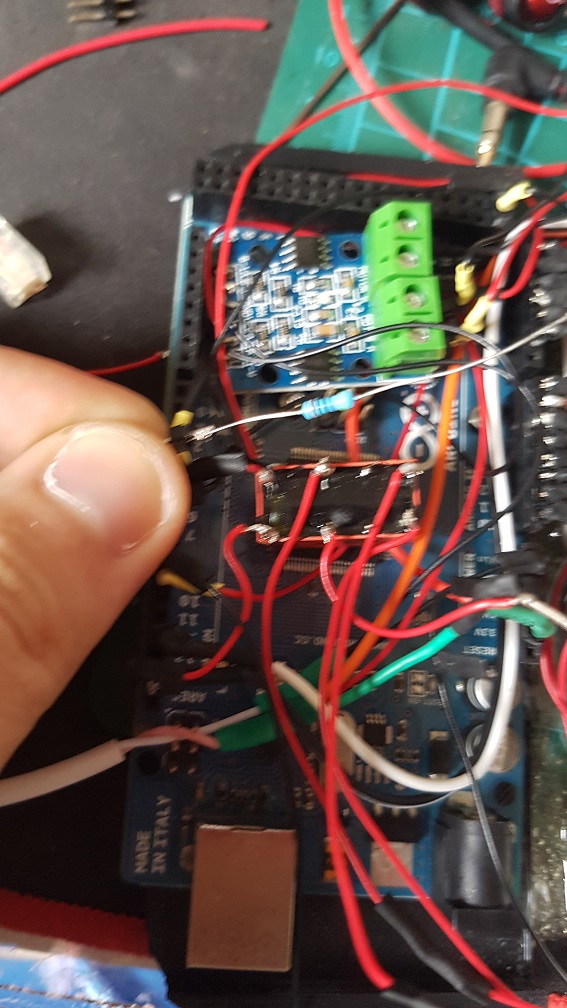

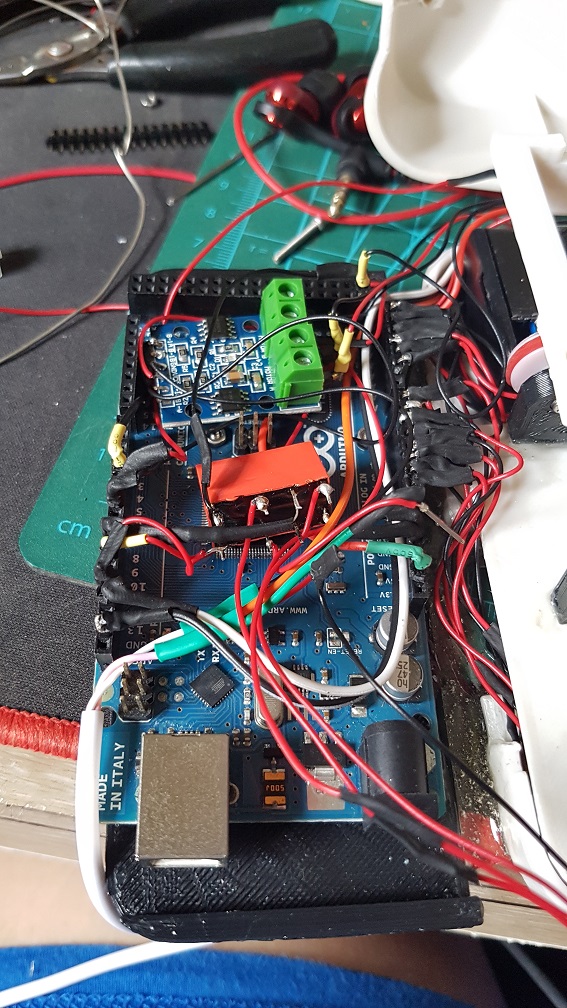

And came the soldering party.

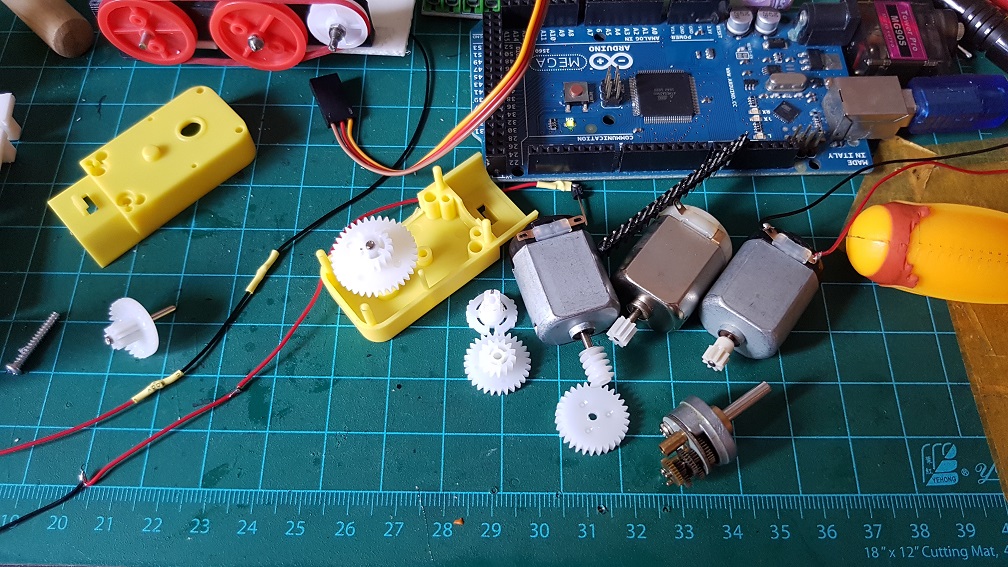

It was at this point then I realized that if i reduce the speed of my DC motor to the speed of the music, I wont have enough torque to pull the card in..

After an afternoon of panicking and finding alternative motor or even thinking to redesigning my whole belt system….

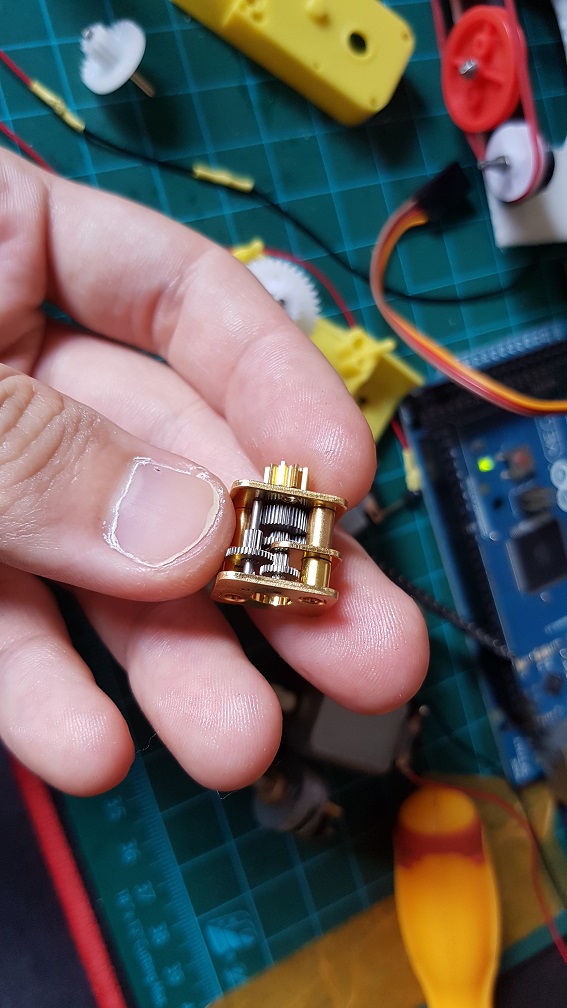

And then I found that I have a longer DC motor with metal gears built into it and i tried to figure our if I can incorporate this gear box into my current system, which is also rather impossible as the ratio for this gear box is about 1:45. when I only need about 1:5 to 1:8. if i use this, I will have the belt driver running too slow.

And then I found that I have a longer DC motor with metal gears built into it and i tried to figure our if I can incorporate this gear box into my current system, which is also rather impossible as the ratio for this gear box is about 1:45. when I only need about 1:5 to 1:8. if i use this, I will have the belt driver running too slow.  same goes for this, but this is 1:250… even slower.

same goes for this, but this is 1:250… even slower.

So to solve this problem, I tried to get the medium speed which is faster than what the song should be and will stuck about 30% of the time and removed the buttons (which detects card when user insert into it that trigger the motor to turn the belt.) that caused more friction. And I also jump start the motor by making it to spin at full speed for half a second to break the initial force required when the motor is starting.

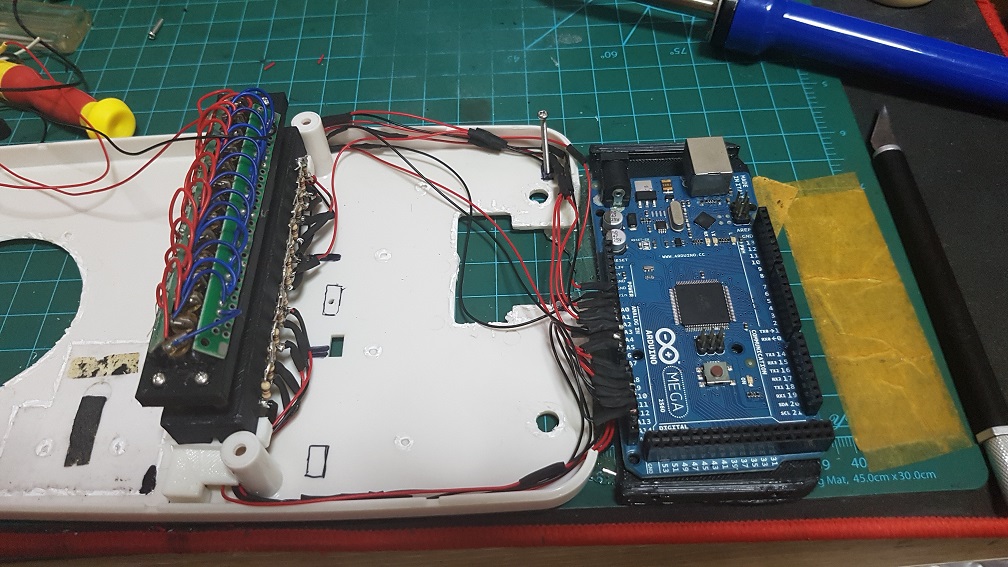

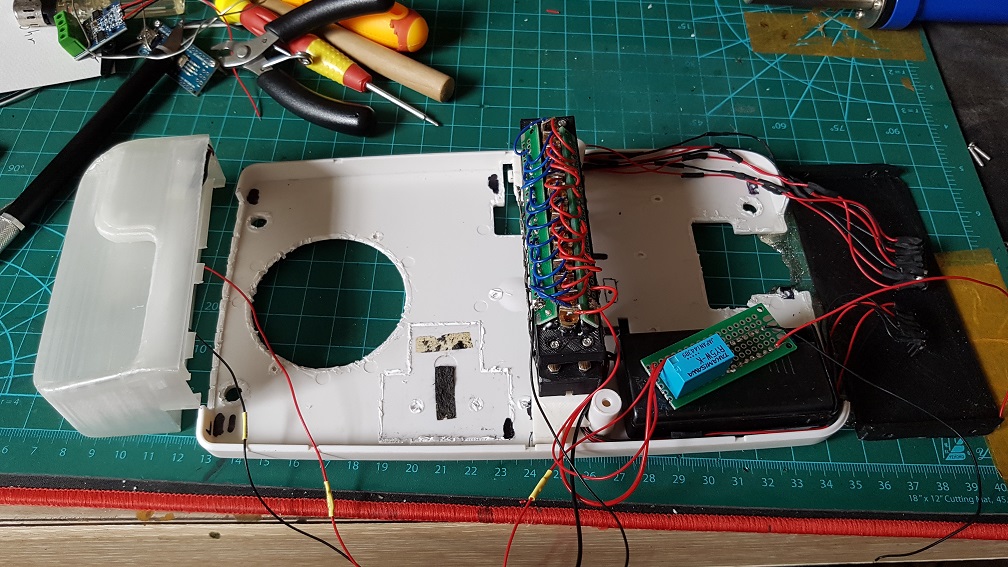

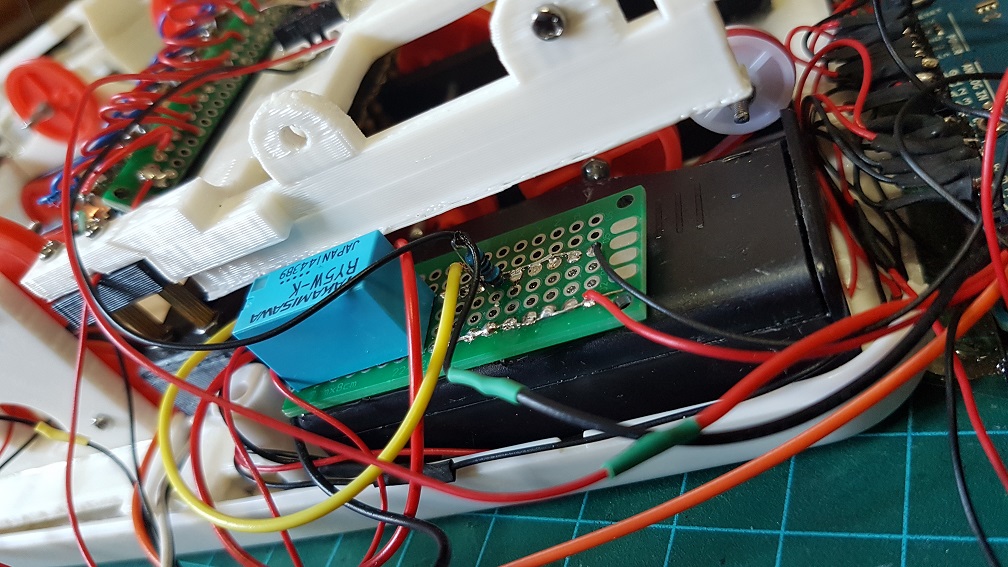

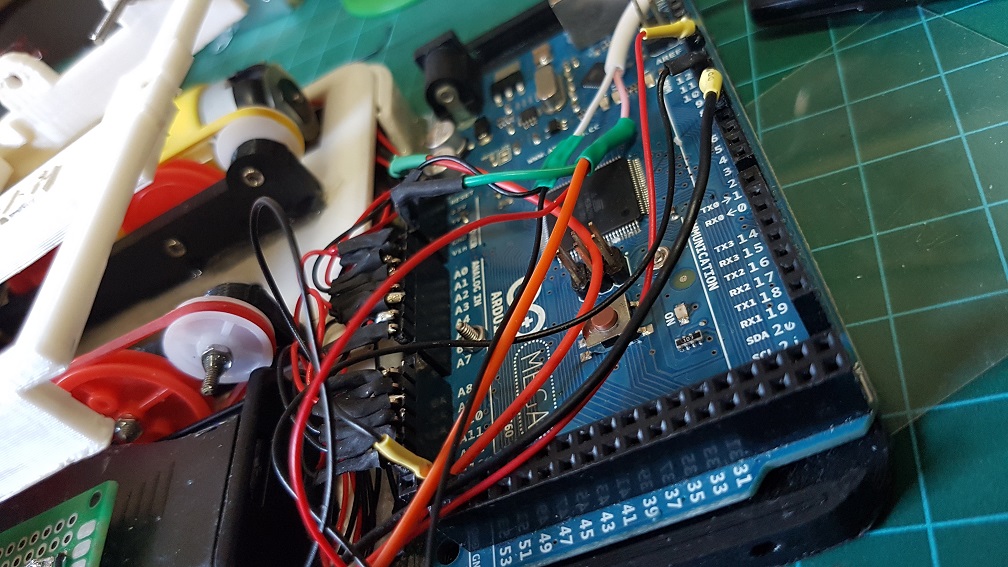

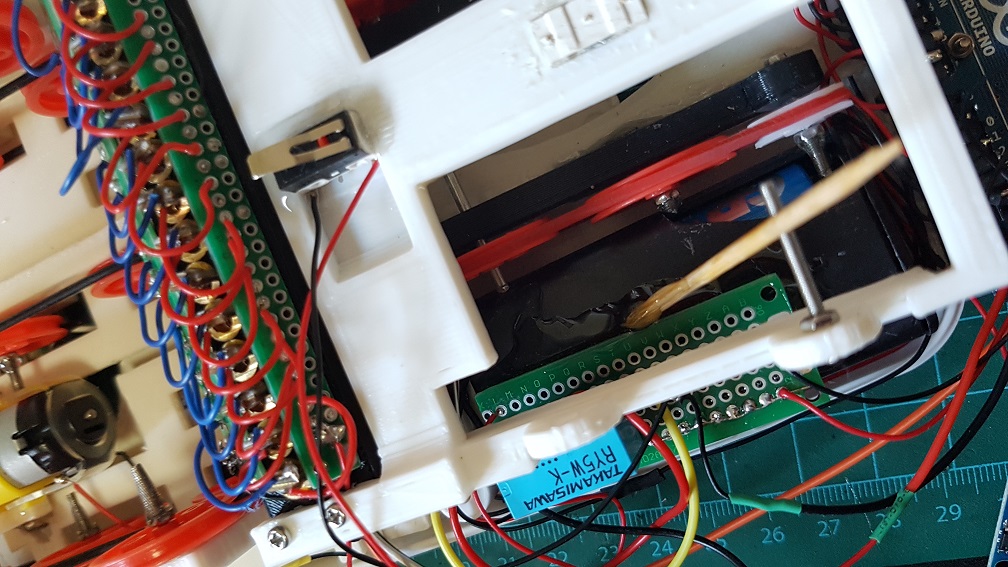

The messy configuration, components and wirings.

It took me some time to sort out these messy wiring and make sure that none of the wires interfere with the track that the Music card is going through.

after trying out the workable speed of sound and getting stuck by removing the buttons.

after trying out the workable speed of sound and getting stuck by removing the buttons.

and after this, I tried to code the majority of the code together.

For this, I did not expect to work this well and I am really excited about it!

Towards the end of the project.

Some may wonder how did I make the Music Card..

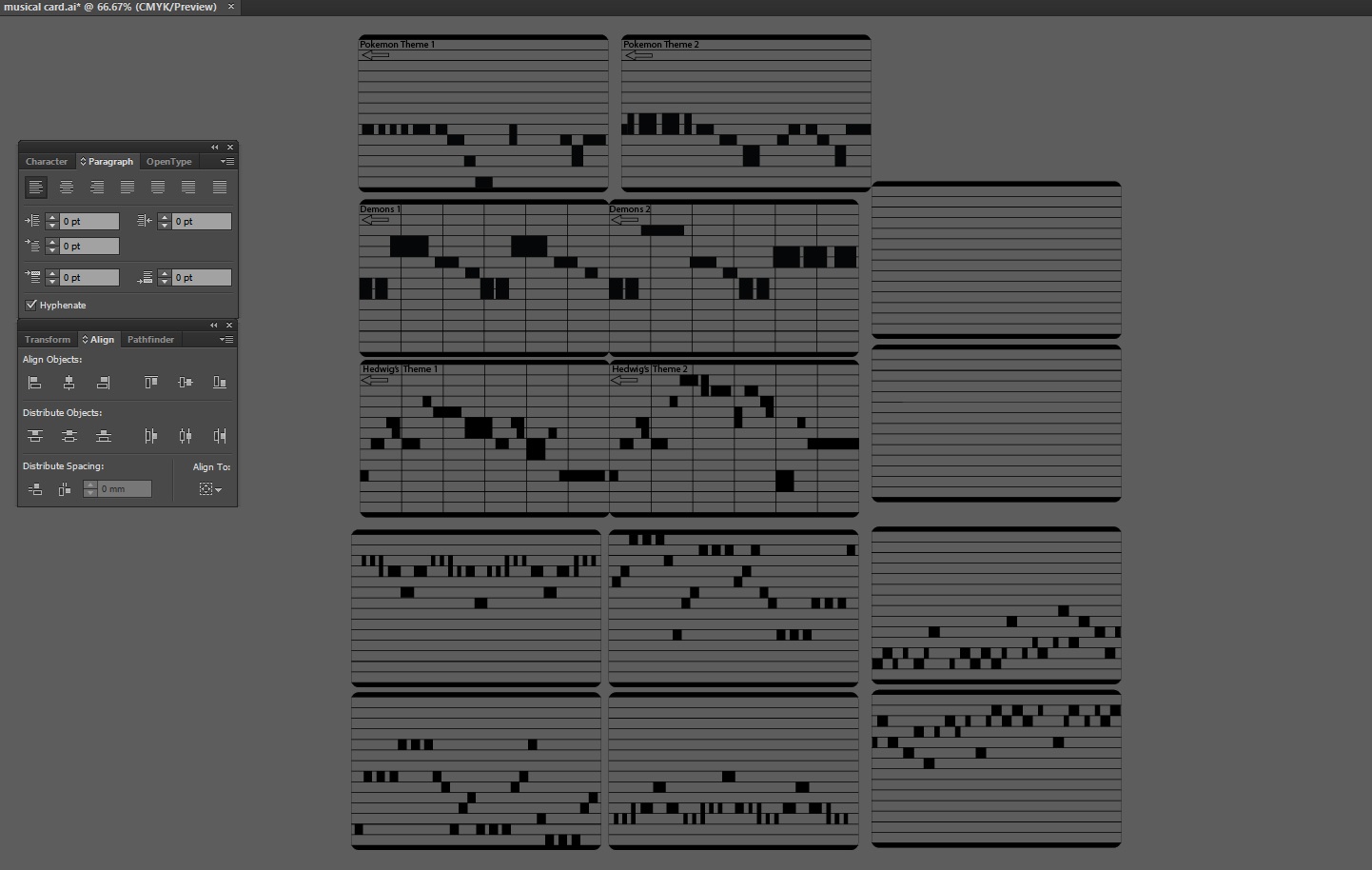

I copied a few from online like Demons by Imagine Dragons, Harrypotter’s Hedwig Theme, and Pokemon Theme song, These were labeled on the card and those that weren’t labeled was What I composed myself. Since I have no music background, I did it by trial and error to give it a tune.

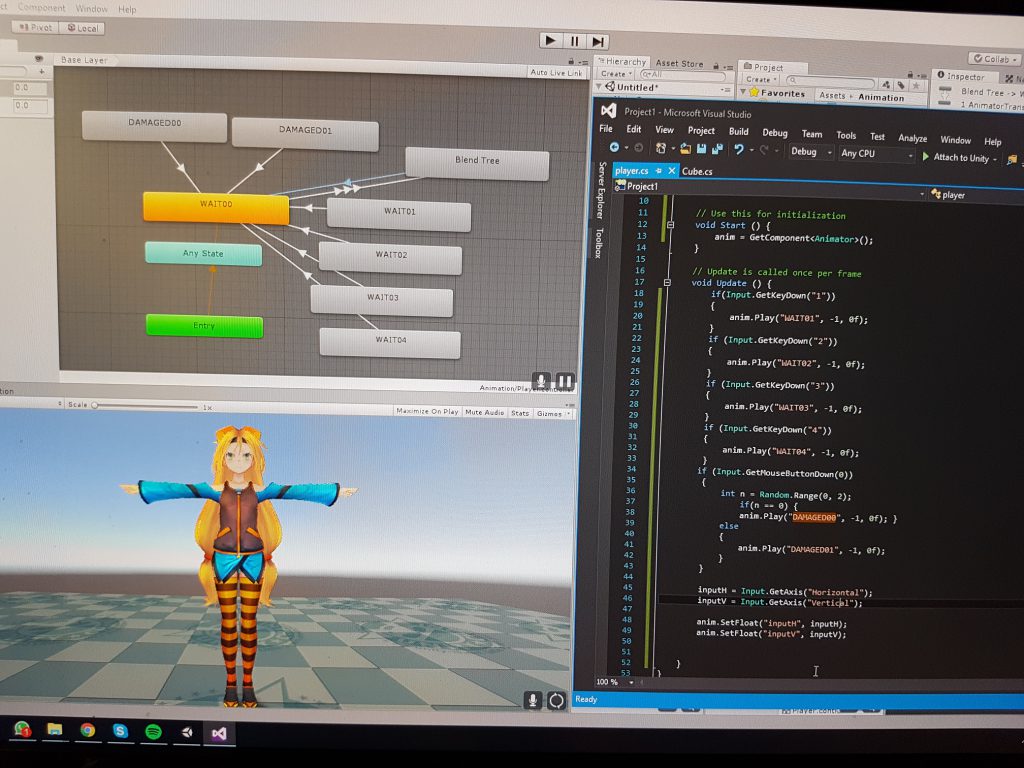

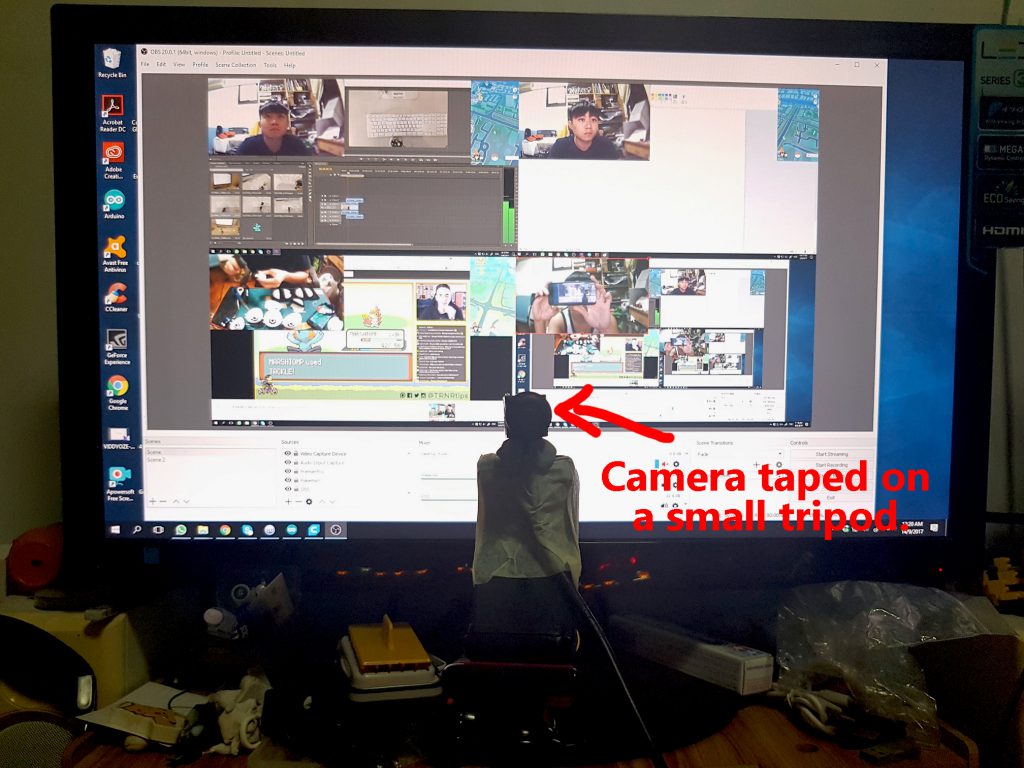

This was screen recorded when I tried to compose my 4th tune for this project:

after this was completed, I screen shot it and import into Illustrator to trace it into the Card layout which I made.

and this was how the cards were made.

and this was how the cards were made.

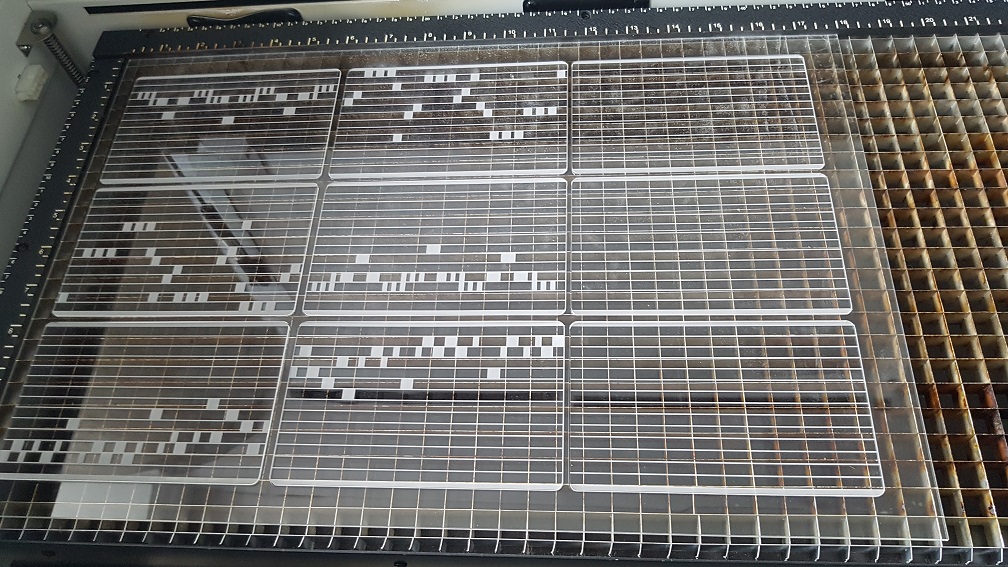

Laser raster and cut in school on 2mm acrylic.

Laser raster and cut in school on 2mm acrylic.

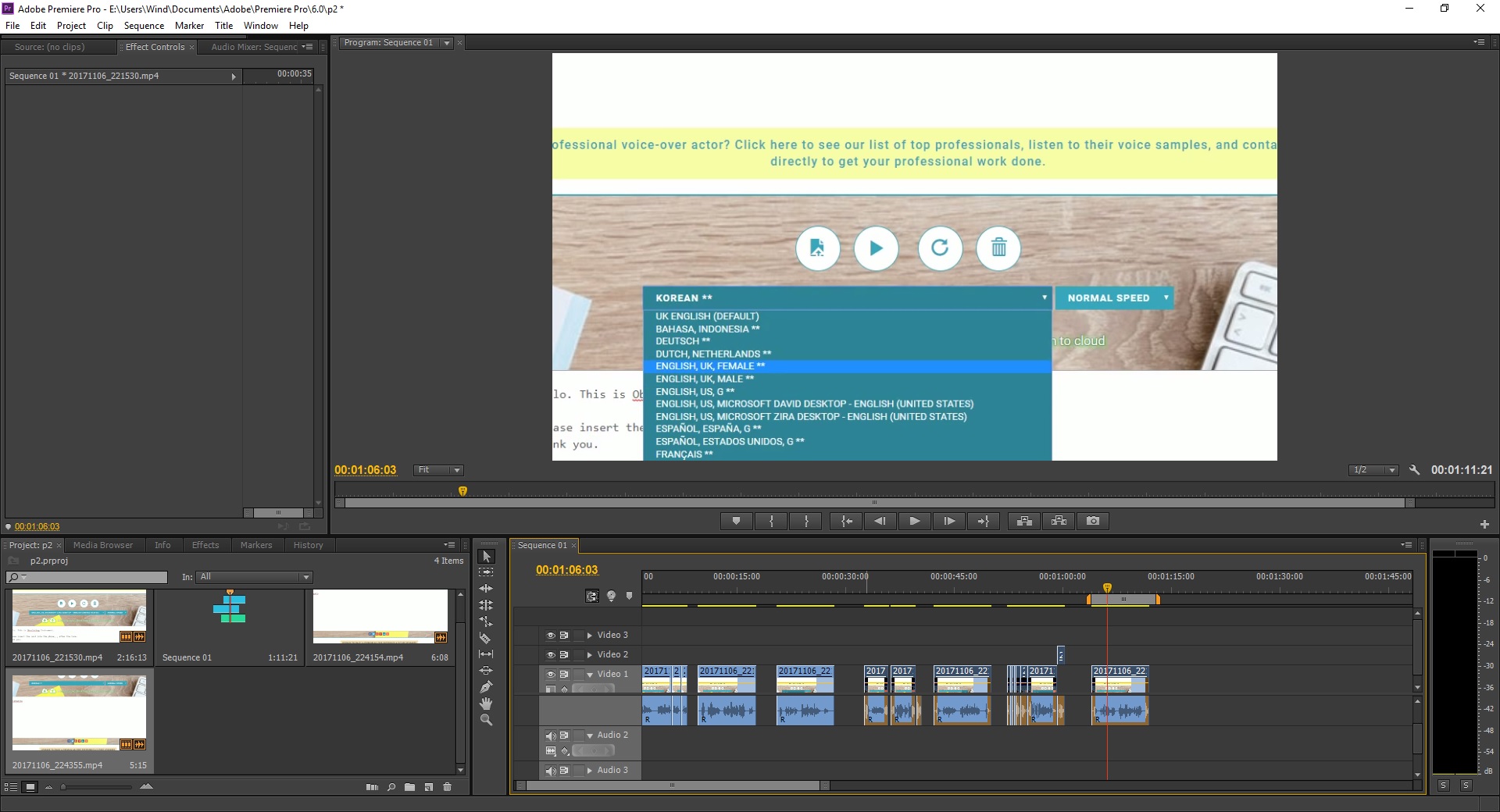

AND how about the voice command in 7 different accent?

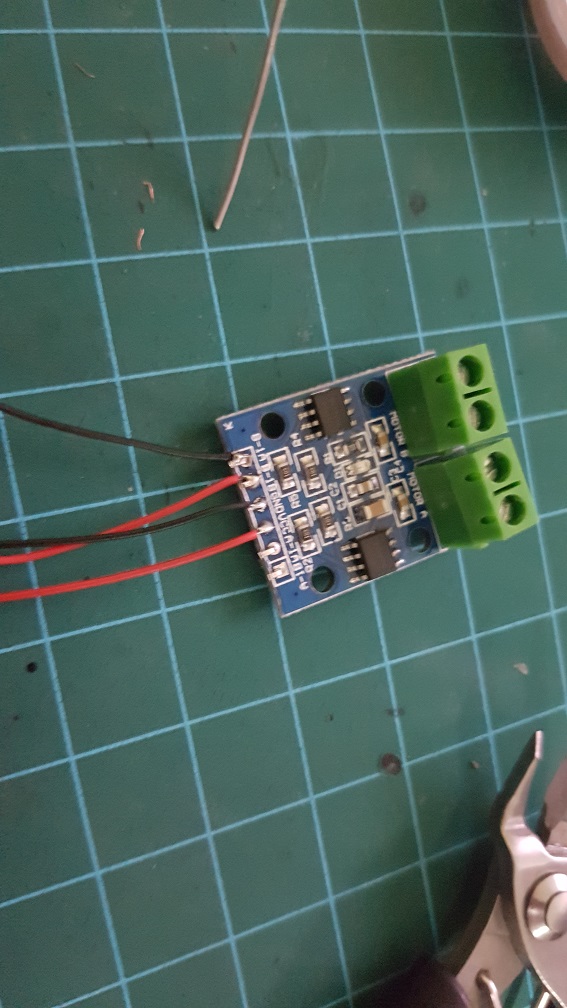

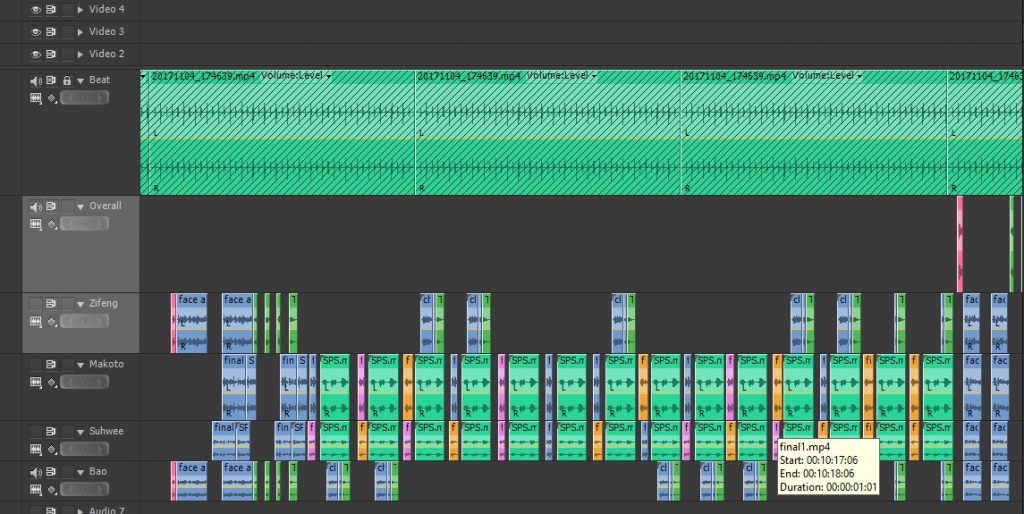

well, this is relatively simple, just type whatever I want on Webbased Text to speech reader and have it read it out in different accent and edit them in premiere pro to cut them up to the exact same length(9 seconds) and put them into the SD card within the Obseleting Instrument’s MP3 Decoder.

I really like the Japanese and Korean accent, its really funny!

I really like the Japanese and Korean accent, its really funny!

Why did I made it to speak different accent? It was to engage the user and make them feel like there was really life in the system where they called/receive call from a real person, like if they discussed with their friend and their friend said that there was a Indian accent while what they heard was the British accent, they might want to try Obseleting Instrument for a few more time. The accent there is there to add variables in the system.

In Conclusion

Throughout this Project, I’ve learnt many things like how to model objects in Tinkercad and make measurements properly, there are always failures in everything that I modeled before it works, and this is why 3D printing is a good prototype process where I printed it out and tested it to know if it work or not, if it doesnt, I will shave off some piece to see if it fits, if it does, I will make new measurements for the edited model.

Also, to have a Telephone case in the start and scale everything into the Telephone case was really a challenge especially at the start when I could not measure how big the internal was and could only make a guess and print some test print to try it out.

In this project, I realized that if I were to do a project that require multiple fields of knowledge like mechanical and electrical, It was better if I did not know how hard it will be, if I were to know that every part of the project will be something that I don’t know, I will be too afraid to jump into this project. I did something, realized that it doesn’t work and find solution to that single problem and proceed to work on the project and faced another problem, solving and learning one problem at a time lead me to the completion of the project.

Now that I had completed the project and looking back. Obseleting Instrument is really a complicated project as a whole, but thinking about it, I am just putting many small system into one project- like using one laser diode and a photo resistor as a switch, playing a tune when triggered, a physical button to sense if the phone was picked up, using a relay to control circuits of different voltage, running two DC motor at the same time and so on… Obseleting Instrument is just a collection of small systems, which I personally thinks was what made my journey of doing this project really interesting because I explored the basics of these components and learnt a whole lot through it.

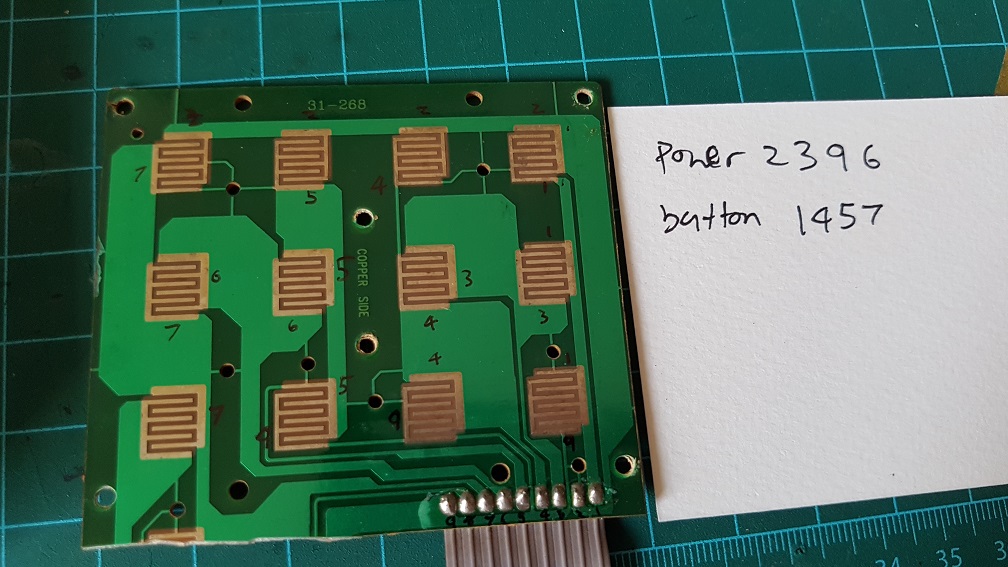

to make use of the original button on the phone, I’ve figured that the 12 buttons runs on 2 different circuit which I could simply solder these number together and make all the 12 buttons into one button, so nomatter which buttons the user pressed, it will be registered as one button pressed.

to make use of the original button on the phone, I’ve figured that the 12 buttons runs on 2 different circuit which I could simply solder these number together and make all the 12 buttons into one button, so nomatter which buttons the user pressed, it will be registered as one button pressed. Because I cut off the Redial button on the phone to make space for my belt driver system, I epoxyed the Redial button back to the case as there are no PCB supporting it.

Because I cut off the Redial button on the phone to make space for my belt driver system, I epoxyed the Redial button back to the case as there are no PCB supporting it.

I am really glad that this many piece worked well together and this was the biggest challenge.. since there are so many components working together (electrical and mechanical), even if one of the parts failed, it would not work as well as it is now. So I considered myself really lucky that the parts happened to work well even when there are misalignment everywhere.

I am really glad that this many piece worked well together and this was the biggest challenge.. since there are so many components working together (electrical and mechanical), even if one of the parts failed, it would not work as well as it is now. So I considered myself really lucky that the parts happened to work well even when there are misalignment everywhere.

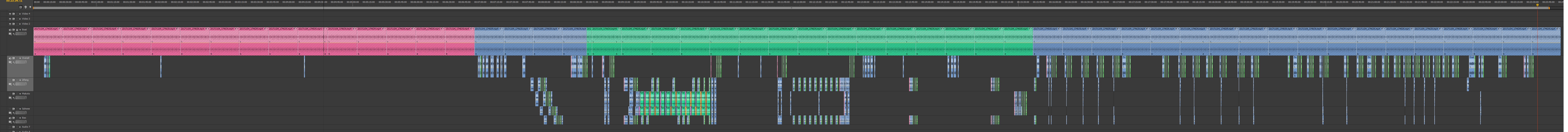

at the end of the scissor paper stone segment, we all synchronized to the same action of the paper and Bao and I were counting on 5, so we all were showing our palm.

at the end of the scissor paper stone segment, we all synchronized to the same action of the paper and Bao and I were counting on 5, so we all were showing our palm.

“Live” me flying over to point at “third space” me

“Live” me flying over to point at “third space” me

The top right(pre-recorded) and bottom right(live) goes to the desktop at around the same time and you can see the changes that happened on my desktop within that few days.

The top right(pre-recorded) and bottom right(live) goes to the desktop at around the same time and you can see the changes that happened on my desktop within that few days. I was talking about how Korean manga is usually in a long strip format but the website which I read it(MangaFox) cut it into a page format.

I was talking about how Korean manga is usually in a long strip format but the website which I read it(MangaFox) cut it into a page format. Thank you!!! =D

Thank you!!! =D